Chapter 7 More than Words: Text Analytics

7.1 Introduction

Text expands the universe of data many-fold. See my monograph on text mining in finance at: http://srdas.github.io/Das_TextAnalyticsInFinance.pdf

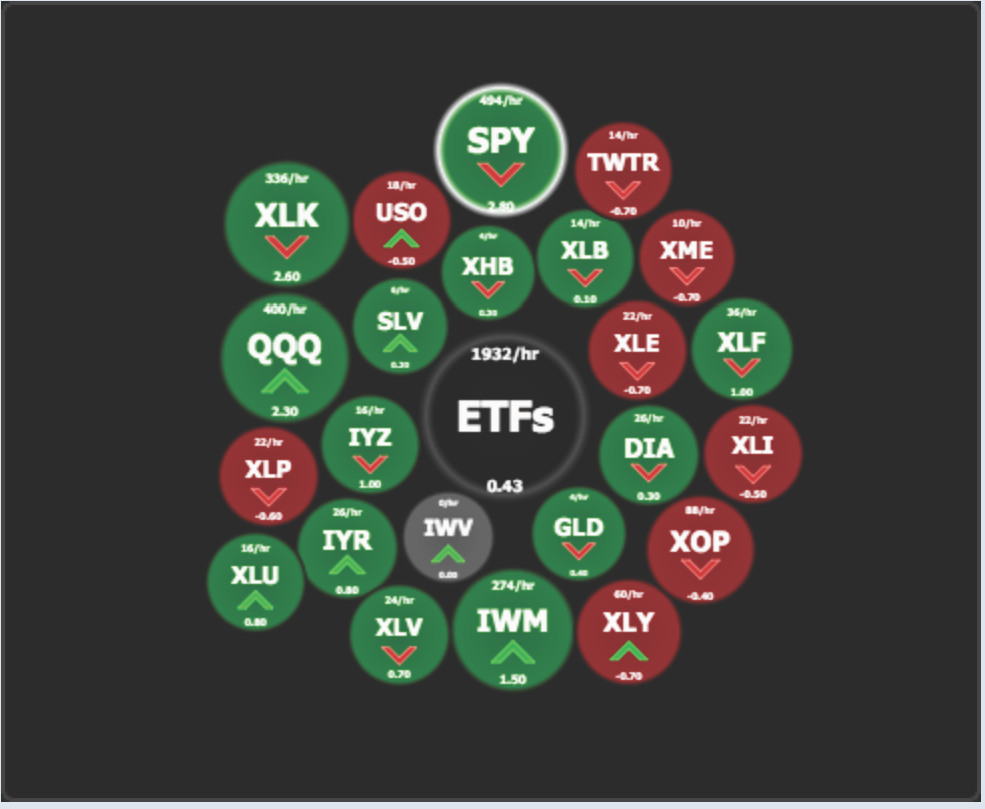

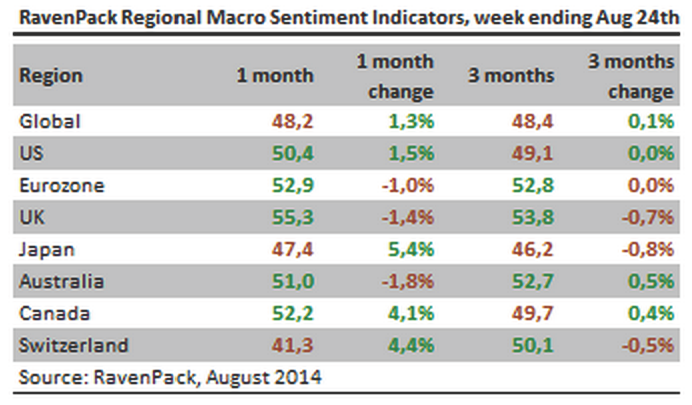

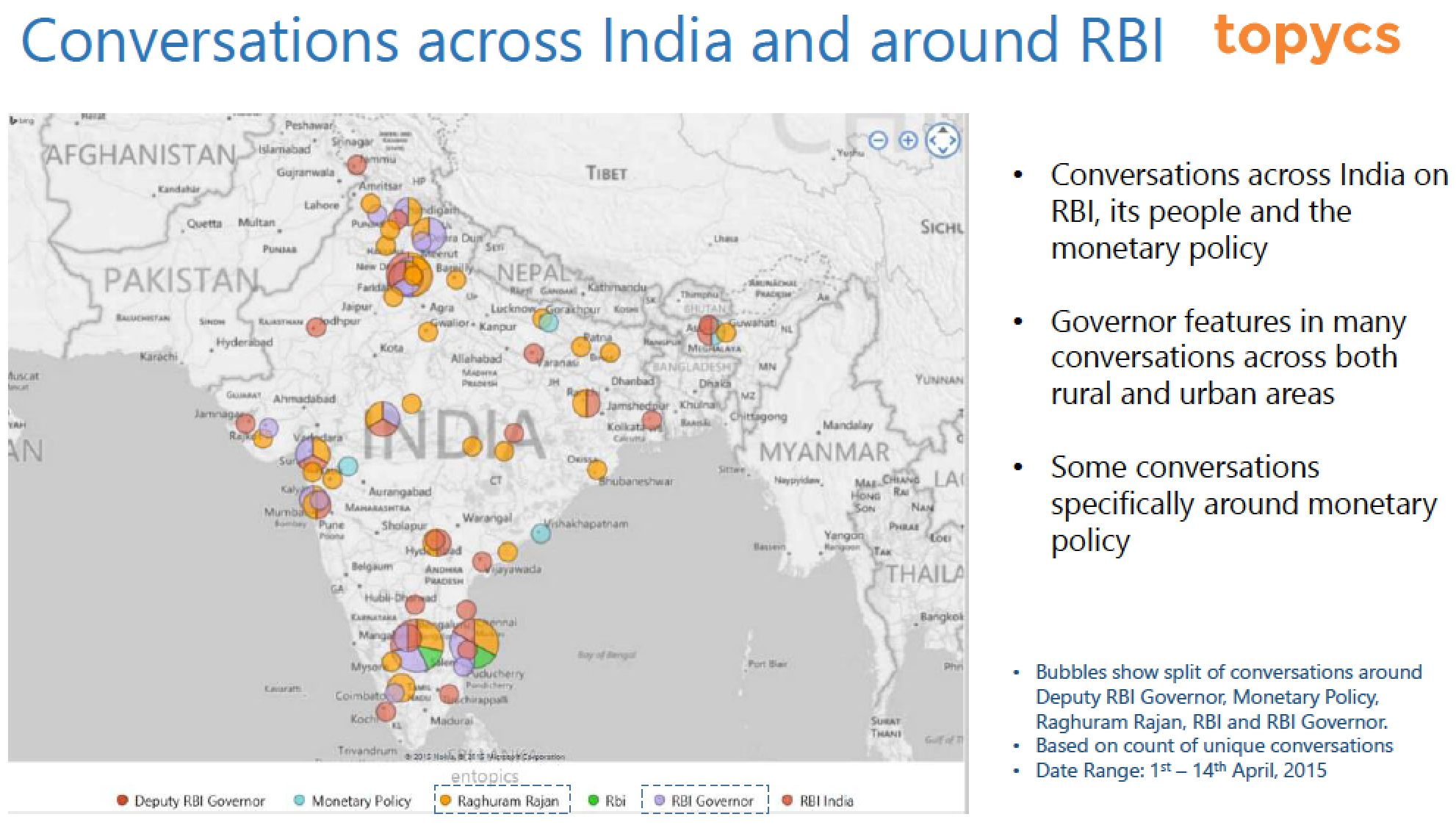

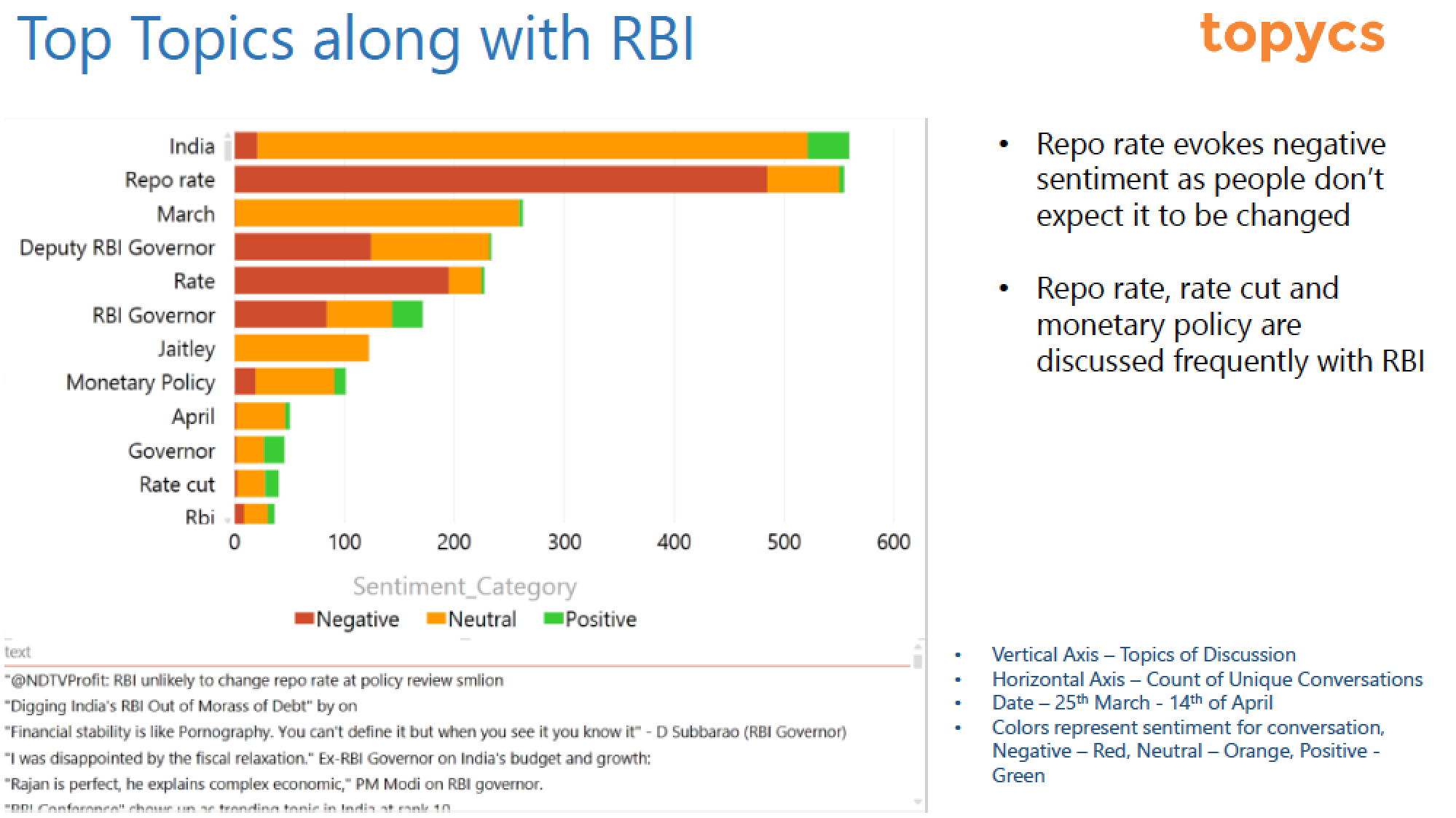

In Finance, for example, text has become a major source of trading information, leading to a new field known as News Metrics.

News analysis is defined as “the measurement of the various qualitative and quantitative attributes of textual news stories. Some of these attributes are: sentiment, relevance, and novelty. Expressing news stories as numbers permits the manipulation of everyday information in a mathematical and statistical way.” (Wikipedia). In this chapter, I provide a framework for text analytics techniques that are in widespread use. I will discuss various text analytic methods and software, and then provide a set of metrics that may be used to assess the performance of analytics. Various directions for this field are discussed through the exposition. The techniques herein can aid in the valuation and trading of securities, facilitate investment decision making, meet regulatory requirements, provide marketing insights, or manage risk.

“News analytics are used in financial modeling, particularly in quantitative and algorithmic trading. Further, news analytics can be used to plot and characterize firm behaviors over time and thus yield important strategic insights about rival firms. News analytics are usually derived through automated text analysis and applied to digital texts using elements from natural language processing and machine learning such as latent semantic analysis, support vector machines, `bag of words’, among other techniques.” (Wikipedia)

7.2 Text as Data

There are many reasons why text has business value. But this is a narrow view. Textual data provides a means of understanding all human behavior through a data-driven, analytical approach. Let’s enumerate some reasons for this.

- Big Text: there is more textual data than numerical data.

- Text is versatile. Nuances and behavioral expressions are not conveyed with numbers, so analyzing text allows us to explore these aspects of human interaction.

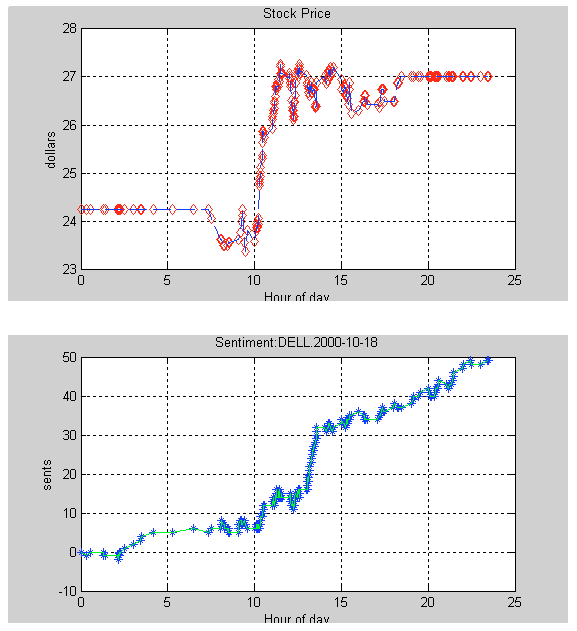

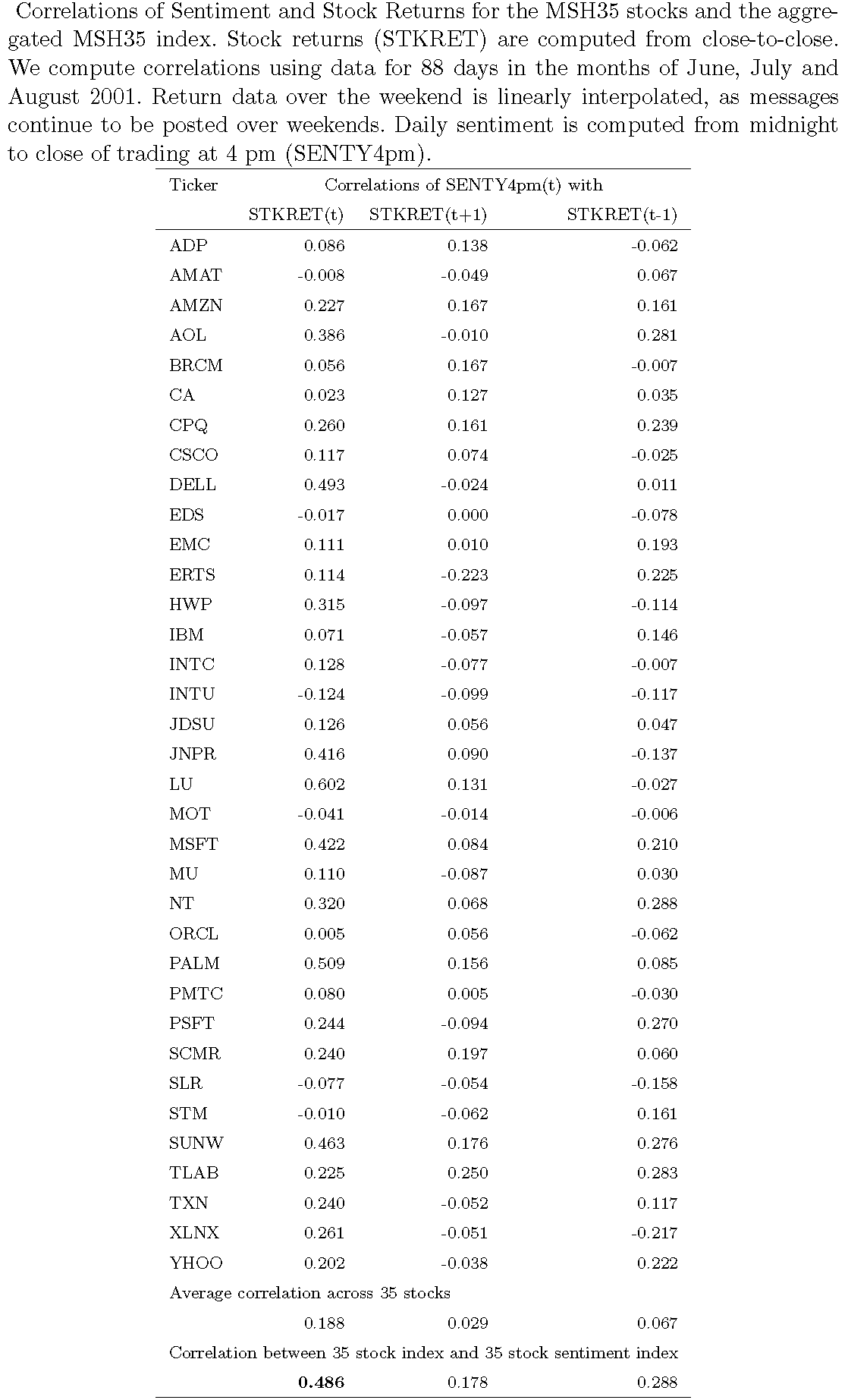

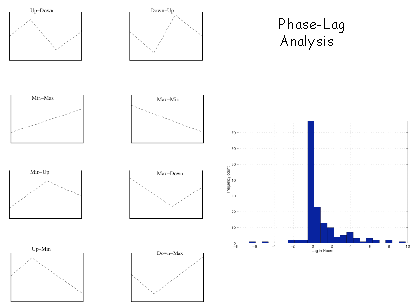

- Text contains emotive content. This has led to the ubiquity of “Sentiment analysis”. See for example: Admati-Pfleiderer 2001; DeMarzo et al 2003; Antweiler-Frank 2004, 2005; Das-Chen 2007; Tetlock 2007; Tetlock et al 2008; Mitra et al 2008; Leinweber-Sisk 2010.

- Text contains opinions and connections. See: Das et al 2005; Das and Sisk 2005; Godes et al 2005; Li 2006; Hochberg et al 2007.

- Numbers aggregate; text disaggregates. Text allows us to drill down into underlying behavior when understanding human interaction.

In a talk at the 17th ACM Conference on Information Knowledge and Management (CIKM ’08), Google’s director of research Peter Norvig stated his unequivocal preference for data over algorithms—“data is more agile than code.” Yet, it is well-understood that too much data can lead to overfitting so that an algorithm becomes mostly useless out-of-sample. 2. Chris Anderson: “Data is the New Theory.” 3. These issues are relevant to text mining, but let’s put them on hold till the end of the session.

7.3 Definition: Text-Mining

I will make an attempt to provide a comprehensive definition of “Text Mining”. As definitions go, it is often easier to enumerate various versions and nuances of an activity than to describe something in one single statement. So here goes:

- Text mining is the large-scale, automated processing of plain text language in digital form to extract data that is converted into useful quantitative or qualitative information.

- Text mining is automated on big data that is not amenable to human processing within reasonable time frames. It entails extracting data that is converted into information of many types.

- Simple: Text mining may be simple as key word searches and counts.

- Complicated: It may require language parsing and complex rules for information extraction.

- Involves structured text, such as the information in forms and some kinds of web pages.

- May be applied to unstructured text is a much harder endeavor.

- Text mining is also aimed at unearthing unseen relationships in unstructured text as in meta analyses of research papers, see Van Noorden 2012.

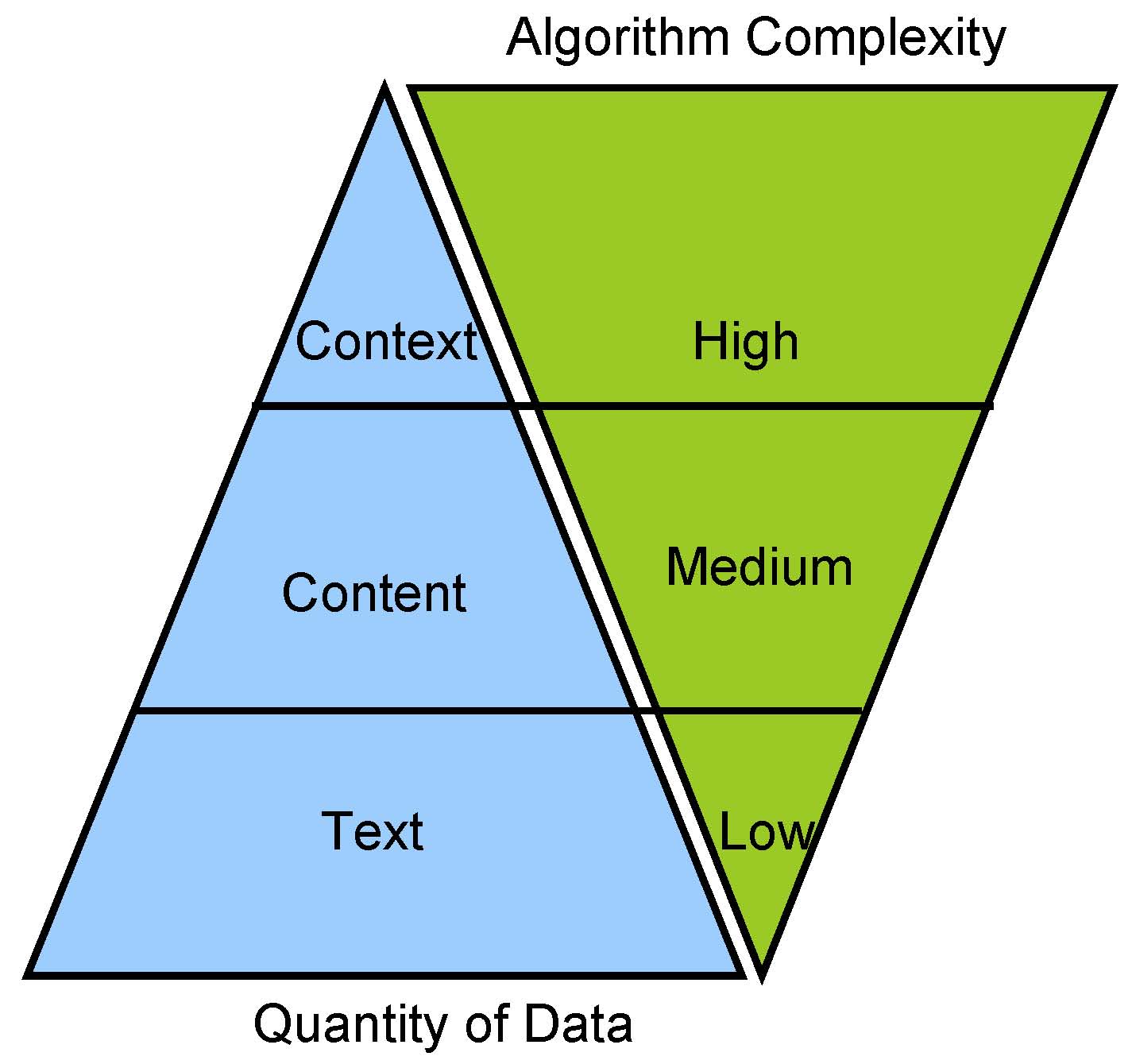

7.4 Data and Algorithms

7.5 Text Extraction

The R programming language is increasingly being used to download text from the web and then analyze it. The ease with which R may be used to scrape text from web site may be seen from the following simple command in R:

text = readLines("http://srdas.github.io/bio-candid.html")

text[15:20]## [1] "journals. Prior to being an academic, he worked in the derivatives"

## [2] "business in the Asia-Pacific region as a Vice-President at"

## [3] "Citibank. His current research interests include: machine learning,"

## [4] "social networks, derivatives pricing models, portfolio theory, the"

## [5] "modeling of default risk, and venture capital. He has published over"

## [6] "ninety articles in academic journals, and has won numerous awards for"Here, we downloaded the my bio page from my university’s web site. It’s a simple HTML file.

length(text)## [1] 807.6 String Parsing

Suppose we just want the 17th line, we do:

text[17]## [1] "Citibank. His current research interests include: machine learning,"And, to find out the character length of the this line we use the function:

library(stringr)

str_length(text[17])## [1] 67We have first invoked the library stringr that contains many string handling functions. In fact, we may also get the length of each line in the text vector by applying the function length() to the entire text vector.

text_len = str_length(text)

print(text_len)## [1] 6 69 0 66 70 70 70 63 69 65 59 59 70 67 66 58 67 66 69 69 67 62 63

## [24] 19 0 0 56 0 65 67 66 65 64 66 69 63 69 65 27 0 3 0 71 71 69 68

## [47] 71 12 0 3 0 71 70 68 71 69 63 67 69 64 67 7 0 3 0 67 71 65 63

## [70] 72 69 68 66 69 70 70 43 0 0 0print(text_len[55])## [1] 71text_len[17]## [1] 677.7 Sort by Length

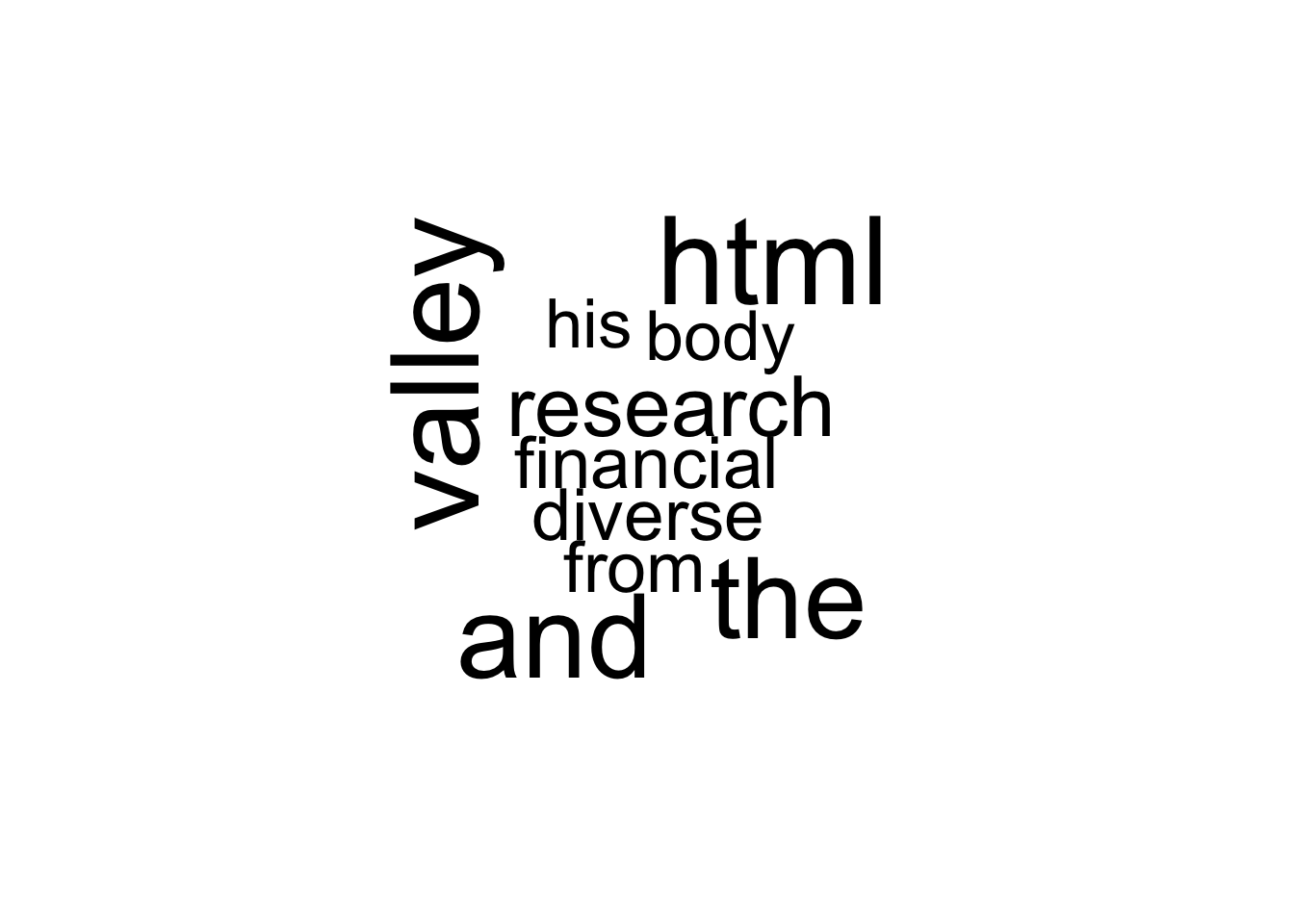

Some lines are very long and are the ones we are mainly interested in as they contain the bulk of the story, whereas many of the remaining lines that are shorter contain html formatting instructions. Thus, we may extract the top three lengthy lines with the following set of commands.

res = sort(text_len,decreasing=TRUE,index.return=TRUE)

idx = res$ix

text2 = text[idx]

text2## [1] "important to open the academic door to the ivory tower and let the world"

## [2] "Sanjiv is now a Professor of Finance at Santa Clara University. He came"

## [3] "to SCU from Harvard Business School and spent a year at UC Berkeley. In"

## [4] "previous lives into his current existence, which is incredibly confused"

## [5] "Sanjiv's research style is instilled with a distinct \"New York state of"

## [6] "funds, the internet, portfolio choice, banking models, credit risk, and"

## [7] "ocean. The many walks in Greenwich village convinced him that there is"

## [8] "Santa Clara University's Leavey School of Business. He previously held"

## [9] "faculty appointments as Associate Professor at Harvard Business School"

## [10] "and UC Berkeley. He holds post-graduate degrees in Finance (M.Phil and"

## [11] "Management, co-editor of The Journal of Derivatives and The Journal of"

## [12] "mind\" - it is chaotic, diverse, with minimal method to the madness. He"

## [13] "any time you like, but you can never leave.\" Which is why he is doomed"

## [14] "to a lifetime in Hotel California. And he believes that, if this is as"

## [15] "<BODY background=\"http://algo.scu.edu/~sanjivdas/graphics/back2.gif\">"

## [16] "Berkeley), an MBA from the Indian Institute of Management, Ahmedabad,"

## [17] "modeling of default risk, and venture capital. He has published over"

## [18] "ninety articles in academic journals, and has won numerous awards for"

## [19] "science fiction movies, and writing cool software code. When there is"

## [20] "academic papers, which helps him relax. Always the contrarian, Sanjiv"

## [21] "his past life in the unreal world, Sanjiv worked at Citibank, N.A. in"

## [22] "has unpublished articles in many other areas. Some years ago, he took"

## [23] "There he learnt about the fascinating field of Randomized Algorithms,"

## [24] "in. Academia is a real challenge, given that he has to reconcile many"

## [25] "explains, you never really finish your education - \"you can check out"

## [26] "the Asia-Pacific region. He takes great pleasure in merging his many"

## [27] "has published articles on derivatives, term-structure models, mutual"

## [28] "more opinions than ideas. He has been known to have turned down many"

## [29] "Financial Services Research, and Associate Editor of other academic"

## [30] "Citibank. His current research interests include: machine learning,"

## [31] "research and teaching. His recent book \"Derivatives: Principles and"

## [32] "growing up, Sanjiv moved to New York to change the world, hopefully"

## [33] "confirming that an unchecked hobby can quickly become an obsession."

## [34] "pursuits, many of which stem from being in the epicenter of Silicon"

## [35] "Coastal living did a lot to mold Sanjiv, who needs to live near the"

## [36] "Sanjiv Das is the William and Janice Terry Professor of Finance at"

## [37] "journals. Prior to being an academic, he worked in the derivatives"

## [38] "social networks, derivatives pricing models, portfolio theory, the"

## [39] "through research. He graduated in 1994 with a Ph.D. from NYU, and"

## [40] "mountains meet the sea, riding sport motorbikes, reading, gadgets,"

## [41] "offers from Mad magazine to publish his academic work. As he often"

## [42] "B.Com in Accounting and Economics (University of Bombay, Sydenham"

## [43] "After loafing and working in many parts of Asia, but never really"

## [44] "since then spent five years in Boston, and now lives in San Jose,"

## [45] "thinks that New York City is the most calming place in the world,"

## [46] "no such thing as a representative investor, yet added many unique"

## [47] "California. Sanjiv loves animals, places in the world where the"

## [48] "skills he now applies earnestly to his editorial work, and other"

## [49] "Ph.D. from New York University), Computer Science (M.S. from UC"

## [50] "currently also serves as a Senior Fellow at the FDIC Center for"

## [51] "time available from the excitement of daily life, Sanjiv writes"

## [52] "time off to get another degree in computer science at Berkeley,"

## [53] "features to his personal utility function. He learnt that it is"

## [54] "Practice\" was published in May 2010 (second edition 2016). He"

## [55] "College), and is also a qualified Cost and Works Accountant"

## [56] "(AICWA). He is a senior editor of The Journal of Investment"

## [57] "business in the Asia-Pacific region as a Vice-President at"

## [58] "<p> <B>Sanjiv Das: A Short Academic Life History</B> <p>"

## [59] "bad as it gets, life is really pretty good."

## [60] "after California of course."

## [61] "Financial Research."

## [62] "and diverse."

## [63] "Valley."

## [64] "<HTML>"

## [65] "<p>"

## [66] "<p>"

## [67] "<p>"

## [68] ""

## [69] ""

## [70] ""

## [71] ""

## [72] ""

## [73] ""

## [74] ""

## [75] ""

## [76] ""

## [77] ""

## [78] ""

## [79] ""

## [80] ""7.8 Text cleanup

In short, text extraction can be exceedingly simple, though getting clean text is not as easy an operation. Removing html tags and other unnecessary elements in the file is also a fairly simple operation. We undertake the following steps that use generalized regular expressions (i.e., grep) to eliminate html formatting characters.

This will generate one single paragraph of text, relatively clean of formatting characters. Such a text collection is also known as a “bag of words”.

text = paste(text,collapse="\n")

print(text)## [1] "<HTML>\n<BODY background=\"http://algo.scu.edu/~sanjivdas/graphics/back2.gif\">\n\nSanjiv Das is the William and Janice Terry Professor of Finance at\nSanta Clara University's Leavey School of Business. He previously held\nfaculty appointments as Associate Professor at Harvard Business School\nand UC Berkeley. He holds post-graduate degrees in Finance (M.Phil and\nPh.D. from New York University), Computer Science (M.S. from UC\nBerkeley), an MBA from the Indian Institute of Management, Ahmedabad,\nB.Com in Accounting and Economics (University of Bombay, Sydenham\nCollege), and is also a qualified Cost and Works Accountant\n(AICWA). He is a senior editor of The Journal of Investment\nManagement, co-editor of The Journal of Derivatives and The Journal of\nFinancial Services Research, and Associate Editor of other academic\njournals. Prior to being an academic, he worked in the derivatives\nbusiness in the Asia-Pacific region as a Vice-President at\nCitibank. His current research interests include: machine learning,\nsocial networks, derivatives pricing models, portfolio theory, the\nmodeling of default risk, and venture capital. He has published over\nninety articles in academic journals, and has won numerous awards for\nresearch and teaching. His recent book \"Derivatives: Principles and\nPractice\" was published in May 2010 (second edition 2016). He\ncurrently also serves as a Senior Fellow at the FDIC Center for\nFinancial Research.\n\n\n<p> <B>Sanjiv Das: A Short Academic Life History</B> <p>\n\nAfter loafing and working in many parts of Asia, but never really\ngrowing up, Sanjiv moved to New York to change the world, hopefully\nthrough research. He graduated in 1994 with a Ph.D. from NYU, and\nsince then spent five years in Boston, and now lives in San Jose,\nCalifornia. Sanjiv loves animals, places in the world where the\nmountains meet the sea, riding sport motorbikes, reading, gadgets,\nscience fiction movies, and writing cool software code. When there is\ntime available from the excitement of daily life, Sanjiv writes\nacademic papers, which helps him relax. Always the contrarian, Sanjiv\nthinks that New York City is the most calming place in the world,\nafter California of course.\n\n<p>\n\nSanjiv is now a Professor of Finance at Santa Clara University. He came\nto SCU from Harvard Business School and spent a year at UC Berkeley. In\nhis past life in the unreal world, Sanjiv worked at Citibank, N.A. in\nthe Asia-Pacific region. He takes great pleasure in merging his many\nprevious lives into his current existence, which is incredibly confused\nand diverse.\n\n<p>\n\nSanjiv's research style is instilled with a distinct \"New York state of\nmind\" - it is chaotic, diverse, with minimal method to the madness. He\nhas published articles on derivatives, term-structure models, mutual\nfunds, the internet, portfolio choice, banking models, credit risk, and\nhas unpublished articles in many other areas. Some years ago, he took\ntime off to get another degree in computer science at Berkeley,\nconfirming that an unchecked hobby can quickly become an obsession.\nThere he learnt about the fascinating field of Randomized Algorithms,\nskills he now applies earnestly to his editorial work, and other\npursuits, many of which stem from being in the epicenter of Silicon\nValley.\n\n<p>\n\nCoastal living did a lot to mold Sanjiv, who needs to live near the\nocean. The many walks in Greenwich village convinced him that there is\nno such thing as a representative investor, yet added many unique\nfeatures to his personal utility function. He learnt that it is\nimportant to open the academic door to the ivory tower and let the world\nin. Academia is a real challenge, given that he has to reconcile many\nmore opinions than ideas. He has been known to have turned down many\noffers from Mad magazine to publish his academic work. As he often\nexplains, you never really finish your education - \"you can check out\nany time you like, but you can never leave.\" Which is why he is doomed\nto a lifetime in Hotel California. And he believes that, if this is as\nbad as it gets, life is really pretty good.\n\n\n"text = str_replace_all(text,"[<>{}()&;,.\n]"," ")

print(text)## [1] " HTML BODY background=\"http://algo scu edu/~sanjivdas/graphics/back2 gif\" Sanjiv Das is the William and Janice Terry Professor of Finance at Santa Clara University's Leavey School of Business He previously held faculty appointments as Associate Professor at Harvard Business School and UC Berkeley He holds post-graduate degrees in Finance M Phil and Ph D from New York University Computer Science M S from UC Berkeley an MBA from the Indian Institute of Management Ahmedabad B Com in Accounting and Economics University of Bombay Sydenham College and is also a qualified Cost and Works Accountant AICWA He is a senior editor of The Journal of Investment Management co-editor of The Journal of Derivatives and The Journal of Financial Services Research and Associate Editor of other academic journals Prior to being an academic he worked in the derivatives business in the Asia-Pacific region as a Vice-President at Citibank His current research interests include: machine learning social networks derivatives pricing models portfolio theory the modeling of default risk and venture capital He has published over ninety articles in academic journals and has won numerous awards for research and teaching His recent book \"Derivatives: Principles and Practice\" was published in May 2010 second edition 2016 He currently also serves as a Senior Fellow at the FDIC Center for Financial Research p B Sanjiv Das: A Short Academic Life History /B p After loafing and working in many parts of Asia but never really growing up Sanjiv moved to New York to change the world hopefully through research He graduated in 1994 with a Ph D from NYU and since then spent five years in Boston and now lives in San Jose California Sanjiv loves animals places in the world where the mountains meet the sea riding sport motorbikes reading gadgets science fiction movies and writing cool software code When there is time available from the excitement of daily life Sanjiv writes academic papers which helps him relax Always the contrarian Sanjiv thinks that New York City is the most calming place in the world after California of course p Sanjiv is now a Professor of Finance at Santa Clara University He came to SCU from Harvard Business School and spent a year at UC Berkeley In his past life in the unreal world Sanjiv worked at Citibank N A in the Asia-Pacific region He takes great pleasure in merging his many previous lives into his current existence which is incredibly confused and diverse p Sanjiv's research style is instilled with a distinct \"New York state of mind\" - it is chaotic diverse with minimal method to the madness He has published articles on derivatives term-structure models mutual funds the internet portfolio choice banking models credit risk and has unpublished articles in many other areas Some years ago he took time off to get another degree in computer science at Berkeley confirming that an unchecked hobby can quickly become an obsession There he learnt about the fascinating field of Randomized Algorithms skills he now applies earnestly to his editorial work and other pursuits many of which stem from being in the epicenter of Silicon Valley p Coastal living did a lot to mold Sanjiv who needs to live near the ocean The many walks in Greenwich village convinced him that there is no such thing as a representative investor yet added many unique features to his personal utility function He learnt that it is important to open the academic door to the ivory tower and let the world in Academia is a real challenge given that he has to reconcile many more opinions than ideas He has been known to have turned down many offers from Mad magazine to publish his academic work As he often explains you never really finish your education - \"you can check out any time you like but you can never leave \" Which is why he is doomed to a lifetime in Hotel California And he believes that if this is as bad as it gets life is really pretty good "7.9 The XML Package

The XML package in R also comes with many functions that aid in cleaning up text and dropping it (mostly unformatted) into a flat file or data frame. This may then be further processed. Here is some example code for this.

7.9.1 Processing XML files in R into a data frame

The following example has been adapted from r-bloggers.com. It uses the following URL:

http://www.w3schools.com/xml/plant_catalog.xml

library(XML)

#Part1: Reading an xml and creating a data frame with it.

xml.url <- "http://www.w3schools.com/xml/plant_catalog.xml"

xmlfile <- xmlTreeParse(xml.url)

xmltop <- xmlRoot(xmlfile)

plantcat <- xmlSApply(xmltop, function(x) xmlSApply(x, xmlValue))

plantcat_df <- data.frame(t(plantcat),row.names=NULL)

plantcat_df[1:5,1:4]7.9.2 Creating a XML file from a data frame

library(XML)## Warning: package 'XML' was built under R version 3.3.2## Loading required package: methods#Example adapted from https://stat.ethz.ch/pipermail/r-help/2008-September/175364.html

#Load the iris data set and create a data frame

data("iris")

data <- as.data.frame(iris)

xml <- xmlTree()

xml$addTag("document", close=FALSE)## Warning in xmlRoot.XMLInternalDocument(currentNodes[[1]]): empty XML

## documentfor (i in 1:nrow(data)) {

xml$addTag("row", close=FALSE)

for (j in names(data)) {

xml$addTag(j, data[i, j])

}

xml$closeTag()

}

xml$closeTag()

#view the xml (uncomment line below to see XML, long output)

cat(saveXML(xml))## <?xml version="1.0"?>

##

## <document>

## <row>

## <Sepal.Length>5.1</Sepal.Length>

## <Sepal.Width>3.5</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.9</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.7</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>1.3</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.6</Sepal.Length>

## <Sepal.Width>3.1</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5</Sepal.Length>

## <Sepal.Width>3.6</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.4</Sepal.Length>

## <Sepal.Width>3.9</Sepal.Width>

## <Petal.Length>1.7</Petal.Length>

## <Petal.Width>0.4</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.6</Sepal.Length>

## <Sepal.Width>3.4</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.3</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5</Sepal.Length>

## <Sepal.Width>3.4</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.4</Sepal.Length>

## <Sepal.Width>2.9</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.9</Sepal.Length>

## <Sepal.Width>3.1</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.1</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.4</Sepal.Length>

## <Sepal.Width>3.7</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.8</Sepal.Length>

## <Sepal.Width>3.4</Sepal.Width>

## <Petal.Length>1.6</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.8</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.1</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.3</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>1.1</Petal.Length>

## <Petal.Width>0.1</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.8</Sepal.Length>

## <Sepal.Width>4</Sepal.Width>

## <Petal.Length>1.2</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.7</Sepal.Length>

## <Sepal.Width>4.4</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.4</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.4</Sepal.Length>

## <Sepal.Width>3.9</Sepal.Width>

## <Petal.Length>1.3</Petal.Length>

## <Petal.Width>0.4</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.1</Sepal.Length>

## <Sepal.Width>3.5</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.3</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.7</Sepal.Length>

## <Sepal.Width>3.8</Sepal.Width>

## <Petal.Length>1.7</Petal.Length>

## <Petal.Width>0.3</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.1</Sepal.Length>

## <Sepal.Width>3.8</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.3</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.4</Sepal.Length>

## <Sepal.Width>3.4</Sepal.Width>

## <Petal.Length>1.7</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.1</Sepal.Length>

## <Sepal.Width>3.7</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.4</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.6</Sepal.Length>

## <Sepal.Width>3.6</Sepal.Width>

## <Petal.Length>1</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.1</Sepal.Length>

## <Sepal.Width>3.3</Sepal.Width>

## <Petal.Length>1.7</Petal.Length>

## <Petal.Width>0.5</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.8</Sepal.Length>

## <Sepal.Width>3.4</Sepal.Width>

## <Petal.Length>1.9</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>1.6</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5</Sepal.Length>

## <Sepal.Width>3.4</Sepal.Width>

## <Petal.Length>1.6</Petal.Length>

## <Petal.Width>0.4</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.2</Sepal.Length>

## <Sepal.Width>3.5</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.2</Sepal.Length>

## <Sepal.Width>3.4</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.7</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>1.6</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.8</Sepal.Length>

## <Sepal.Width>3.1</Sepal.Width>

## <Petal.Length>1.6</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.4</Sepal.Length>

## <Sepal.Width>3.4</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.4</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.2</Sepal.Length>

## <Sepal.Width>4.1</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.1</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.5</Sepal.Length>

## <Sepal.Width>4.2</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.9</Sepal.Length>

## <Sepal.Width>3.1</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>1.2</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.5</Sepal.Length>

## <Sepal.Width>3.5</Sepal.Width>

## <Petal.Length>1.3</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.9</Sepal.Length>

## <Sepal.Width>3.6</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.1</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.4</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>1.3</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.1</Sepal.Length>

## <Sepal.Width>3.4</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5</Sepal.Length>

## <Sepal.Width>3.5</Sepal.Width>

## <Petal.Length>1.3</Petal.Length>

## <Petal.Width>0.3</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.5</Sepal.Length>

## <Sepal.Width>2.3</Sepal.Width>

## <Petal.Length>1.3</Petal.Length>

## <Petal.Width>0.3</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.4</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>1.3</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5</Sepal.Length>

## <Sepal.Width>3.5</Sepal.Width>

## <Petal.Length>1.6</Petal.Length>

## <Petal.Width>0.6</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.1</Sepal.Length>

## <Sepal.Width>3.8</Sepal.Width>

## <Petal.Length>1.9</Petal.Length>

## <Petal.Width>0.4</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.8</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.3</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.1</Sepal.Length>

## <Sepal.Width>3.8</Sepal.Width>

## <Petal.Length>1.6</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>4.6</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5.3</Sepal.Length>

## <Sepal.Width>3.7</Sepal.Width>

## <Petal.Length>1.5</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>5</Sepal.Length>

## <Sepal.Width>3.3</Sepal.Width>

## <Petal.Length>1.4</Petal.Length>

## <Petal.Width>0.2</Petal.Width>

## <Species>setosa</Species>

## </row>

## <row>

## <Sepal.Length>7</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>4.7</Petal.Length>

## <Petal.Width>1.4</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.4</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>4.5</Petal.Length>

## <Petal.Width>1.5</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.9</Sepal.Length>

## <Sepal.Width>3.1</Sepal.Width>

## <Petal.Length>4.9</Petal.Length>

## <Petal.Width>1.5</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.5</Sepal.Length>

## <Sepal.Width>2.3</Sepal.Width>

## <Petal.Length>4</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.5</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>4.6</Petal.Length>

## <Petal.Width>1.5</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.7</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>4.5</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.3</Sepal.Length>

## <Sepal.Width>3.3</Sepal.Width>

## <Petal.Length>4.7</Petal.Length>

## <Petal.Width>1.6</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>4.9</Sepal.Length>

## <Sepal.Width>2.4</Sepal.Width>

## <Petal.Length>3.3</Petal.Length>

## <Petal.Width>1</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.6</Sepal.Length>

## <Sepal.Width>2.9</Sepal.Width>

## <Petal.Length>4.6</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.2</Sepal.Length>

## <Sepal.Width>2.7</Sepal.Width>

## <Petal.Length>3.9</Petal.Length>

## <Petal.Width>1.4</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5</Sepal.Length>

## <Sepal.Width>2</Sepal.Width>

## <Petal.Length>3.5</Petal.Length>

## <Petal.Width>1</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.9</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>4.2</Petal.Length>

## <Petal.Width>1.5</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6</Sepal.Length>

## <Sepal.Width>2.2</Sepal.Width>

## <Petal.Length>4</Petal.Length>

## <Petal.Width>1</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.1</Sepal.Length>

## <Sepal.Width>2.9</Sepal.Width>

## <Petal.Length>4.7</Petal.Length>

## <Petal.Width>1.4</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.6</Sepal.Length>

## <Sepal.Width>2.9</Sepal.Width>

## <Petal.Length>3.6</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.7</Sepal.Length>

## <Sepal.Width>3.1</Sepal.Width>

## <Petal.Length>4.4</Petal.Length>

## <Petal.Width>1.4</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.6</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>4.5</Petal.Length>

## <Petal.Width>1.5</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.8</Sepal.Length>

## <Sepal.Width>2.7</Sepal.Width>

## <Petal.Length>4.1</Petal.Length>

## <Petal.Width>1</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.2</Sepal.Length>

## <Sepal.Width>2.2</Sepal.Width>

## <Petal.Length>4.5</Petal.Length>

## <Petal.Width>1.5</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.6</Sepal.Length>

## <Sepal.Width>2.5</Sepal.Width>

## <Petal.Length>3.9</Petal.Length>

## <Petal.Width>1.1</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.9</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>4.8</Petal.Length>

## <Petal.Width>1.8</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.1</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>4</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.3</Sepal.Length>

## <Sepal.Width>2.5</Sepal.Width>

## <Petal.Length>4.9</Petal.Length>

## <Petal.Width>1.5</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.1</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>4.7</Petal.Length>

## <Petal.Width>1.2</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.4</Sepal.Length>

## <Sepal.Width>2.9</Sepal.Width>

## <Petal.Length>4.3</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.6</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>4.4</Petal.Length>

## <Petal.Width>1.4</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.8</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>4.8</Petal.Length>

## <Petal.Width>1.4</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.7</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>5</Petal.Length>

## <Petal.Width>1.7</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6</Sepal.Length>

## <Sepal.Width>2.9</Sepal.Width>

## <Petal.Length>4.5</Petal.Length>

## <Petal.Width>1.5</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.7</Sepal.Length>

## <Sepal.Width>2.6</Sepal.Width>

## <Petal.Length>3.5</Petal.Length>

## <Petal.Width>1</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.5</Sepal.Length>

## <Sepal.Width>2.4</Sepal.Width>

## <Petal.Length>3.8</Petal.Length>

## <Petal.Width>1.1</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.5</Sepal.Length>

## <Sepal.Width>2.4</Sepal.Width>

## <Petal.Length>3.7</Petal.Length>

## <Petal.Width>1</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.8</Sepal.Length>

## <Sepal.Width>2.7</Sepal.Width>

## <Petal.Length>3.9</Petal.Length>

## <Petal.Width>1.2</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6</Sepal.Length>

## <Sepal.Width>2.7</Sepal.Width>

## <Petal.Length>5.1</Petal.Length>

## <Petal.Width>1.6</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.4</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>4.5</Petal.Length>

## <Petal.Width>1.5</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6</Sepal.Length>

## <Sepal.Width>3.4</Sepal.Width>

## <Petal.Length>4.5</Petal.Length>

## <Petal.Width>1.6</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.7</Sepal.Length>

## <Sepal.Width>3.1</Sepal.Width>

## <Petal.Length>4.7</Petal.Length>

## <Petal.Width>1.5</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.3</Sepal.Length>

## <Sepal.Width>2.3</Sepal.Width>

## <Petal.Length>4.4</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.6</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>4.1</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.5</Sepal.Length>

## <Sepal.Width>2.5</Sepal.Width>

## <Petal.Length>4</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.5</Sepal.Length>

## <Sepal.Width>2.6</Sepal.Width>

## <Petal.Length>4.4</Petal.Length>

## <Petal.Width>1.2</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.1</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>4.6</Petal.Length>

## <Petal.Width>1.4</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.8</Sepal.Length>

## <Sepal.Width>2.6</Sepal.Width>

## <Petal.Length>4</Petal.Length>

## <Petal.Width>1.2</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5</Sepal.Length>

## <Sepal.Width>2.3</Sepal.Width>

## <Petal.Length>3.3</Petal.Length>

## <Petal.Width>1</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.6</Sepal.Length>

## <Sepal.Width>2.7</Sepal.Width>

## <Petal.Length>4.2</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.7</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>4.2</Petal.Length>

## <Petal.Width>1.2</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.7</Sepal.Length>

## <Sepal.Width>2.9</Sepal.Width>

## <Petal.Length>4.2</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.2</Sepal.Length>

## <Sepal.Width>2.9</Sepal.Width>

## <Petal.Length>4.3</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.1</Sepal.Length>

## <Sepal.Width>2.5</Sepal.Width>

## <Petal.Length>3</Petal.Length>

## <Petal.Width>1.1</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>5.7</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>4.1</Petal.Length>

## <Petal.Width>1.3</Petal.Width>

## <Species>versicolor</Species>

## </row>

## <row>

## <Sepal.Length>6.3</Sepal.Length>

## <Sepal.Width>3.3</Sepal.Width>

## <Petal.Length>6</Petal.Length>

## <Petal.Width>2.5</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>5.8</Sepal.Length>

## <Sepal.Width>2.7</Sepal.Width>

## <Petal.Length>5.1</Petal.Length>

## <Petal.Width>1.9</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>7.1</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>5.9</Petal.Length>

## <Petal.Width>2.1</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.3</Sepal.Length>

## <Sepal.Width>2.9</Sepal.Width>

## <Petal.Length>5.6</Petal.Length>

## <Petal.Width>1.8</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.5</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>5.8</Petal.Length>

## <Petal.Width>2.2</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>7.6</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>6.6</Petal.Length>

## <Petal.Width>2.1</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>4.9</Sepal.Length>

## <Sepal.Width>2.5</Sepal.Width>

## <Petal.Length>4.5</Petal.Length>

## <Petal.Width>1.7</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>7.3</Sepal.Length>

## <Sepal.Width>2.9</Sepal.Width>

## <Petal.Length>6.3</Petal.Length>

## <Petal.Width>1.8</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.7</Sepal.Length>

## <Sepal.Width>2.5</Sepal.Width>

## <Petal.Length>5.8</Petal.Length>

## <Petal.Width>1.8</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>7.2</Sepal.Length>

## <Sepal.Width>3.6</Sepal.Width>

## <Petal.Length>6.1</Petal.Length>

## <Petal.Width>2.5</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.5</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>5.1</Petal.Length>

## <Petal.Width>2</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.4</Sepal.Length>

## <Sepal.Width>2.7</Sepal.Width>

## <Petal.Length>5.3</Petal.Length>

## <Petal.Width>1.9</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.8</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>5.5</Petal.Length>

## <Petal.Width>2.1</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>5.7</Sepal.Length>

## <Sepal.Width>2.5</Sepal.Width>

## <Petal.Length>5</Petal.Length>

## <Petal.Width>2</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>5.8</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>5.1</Petal.Length>

## <Petal.Width>2.4</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.4</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>5.3</Petal.Length>

## <Petal.Width>2.3</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.5</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>5.5</Petal.Length>

## <Petal.Width>1.8</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>7.7</Sepal.Length>

## <Sepal.Width>3.8</Sepal.Width>

## <Petal.Length>6.7</Petal.Length>

## <Petal.Width>2.2</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>7.7</Sepal.Length>

## <Sepal.Width>2.6</Sepal.Width>

## <Petal.Length>6.9</Petal.Length>

## <Petal.Width>2.3</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6</Sepal.Length>

## <Sepal.Width>2.2</Sepal.Width>

## <Petal.Length>5</Petal.Length>

## <Petal.Width>1.5</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.9</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>5.7</Petal.Length>

## <Petal.Width>2.3</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>5.6</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>4.9</Petal.Length>

## <Petal.Width>2</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>7.7</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>6.7</Petal.Length>

## <Petal.Width>2</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.3</Sepal.Length>

## <Sepal.Width>2.7</Sepal.Width>

## <Petal.Length>4.9</Petal.Length>

## <Petal.Width>1.8</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.7</Sepal.Length>

## <Sepal.Width>3.3</Sepal.Width>

## <Petal.Length>5.7</Petal.Length>

## <Petal.Width>2.1</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>7.2</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>6</Petal.Length>

## <Petal.Width>1.8</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.2</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>4.8</Petal.Length>

## <Petal.Width>1.8</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.1</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>4.9</Petal.Length>

## <Petal.Width>1.8</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.4</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>5.6</Petal.Length>

## <Petal.Width>2.1</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>7.2</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>5.8</Petal.Length>

## <Petal.Width>1.6</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>7.4</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>6.1</Petal.Length>

## <Petal.Width>1.9</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>7.9</Sepal.Length>

## <Sepal.Width>3.8</Sepal.Width>

## <Petal.Length>6.4</Petal.Length>

## <Petal.Width>2</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.4</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>5.6</Petal.Length>

## <Petal.Width>2.2</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.3</Sepal.Length>

## <Sepal.Width>2.8</Sepal.Width>

## <Petal.Length>5.1</Petal.Length>

## <Petal.Width>1.5</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.1</Sepal.Length>

## <Sepal.Width>2.6</Sepal.Width>

## <Petal.Length>5.6</Petal.Length>

## <Petal.Width>1.4</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>7.7</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>6.1</Petal.Length>

## <Petal.Width>2.3</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.3</Sepal.Length>

## <Sepal.Width>3.4</Sepal.Width>

## <Petal.Length>5.6</Petal.Length>

## <Petal.Width>2.4</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.4</Sepal.Length>

## <Sepal.Width>3.1</Sepal.Width>

## <Petal.Length>5.5</Petal.Length>

## <Petal.Width>1.8</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>4.8</Petal.Length>

## <Petal.Width>1.8</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.9</Sepal.Length>

## <Sepal.Width>3.1</Sepal.Width>

## <Petal.Length>5.4</Petal.Length>

## <Petal.Width>2.1</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.7</Sepal.Length>

## <Sepal.Width>3.1</Sepal.Width>

## <Petal.Length>5.6</Petal.Length>

## <Petal.Width>2.4</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.9</Sepal.Length>

## <Sepal.Width>3.1</Sepal.Width>

## <Petal.Length>5.1</Petal.Length>

## <Petal.Width>2.3</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>5.8</Sepal.Length>

## <Sepal.Width>2.7</Sepal.Width>

## <Petal.Length>5.1</Petal.Length>

## <Petal.Width>1.9</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.8</Sepal.Length>

## <Sepal.Width>3.2</Sepal.Width>

## <Petal.Length>5.9</Petal.Length>

## <Petal.Width>2.3</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.7</Sepal.Length>

## <Sepal.Width>3.3</Sepal.Width>

## <Petal.Length>5.7</Petal.Length>

## <Petal.Width>2.5</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.7</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>5.2</Petal.Length>

## <Petal.Width>2.3</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.3</Sepal.Length>

## <Sepal.Width>2.5</Sepal.Width>

## <Petal.Length>5</Petal.Length>

## <Petal.Width>1.9</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.5</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>5.2</Petal.Length>

## <Petal.Width>2</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>6.2</Sepal.Length>

## <Sepal.Width>3.4</Sepal.Width>

## <Petal.Length>5.4</Petal.Length>

## <Petal.Width>2.3</Petal.Width>

## <Species>virginica</Species>

## </row>

## <row>

## <Sepal.Length>5.9</Sepal.Length>

## <Sepal.Width>3</Sepal.Width>

## <Petal.Length>5.1</Petal.Length>

## <Petal.Width>1.8</Petal.Width>

## <Species>virginica</Species>

## </row>

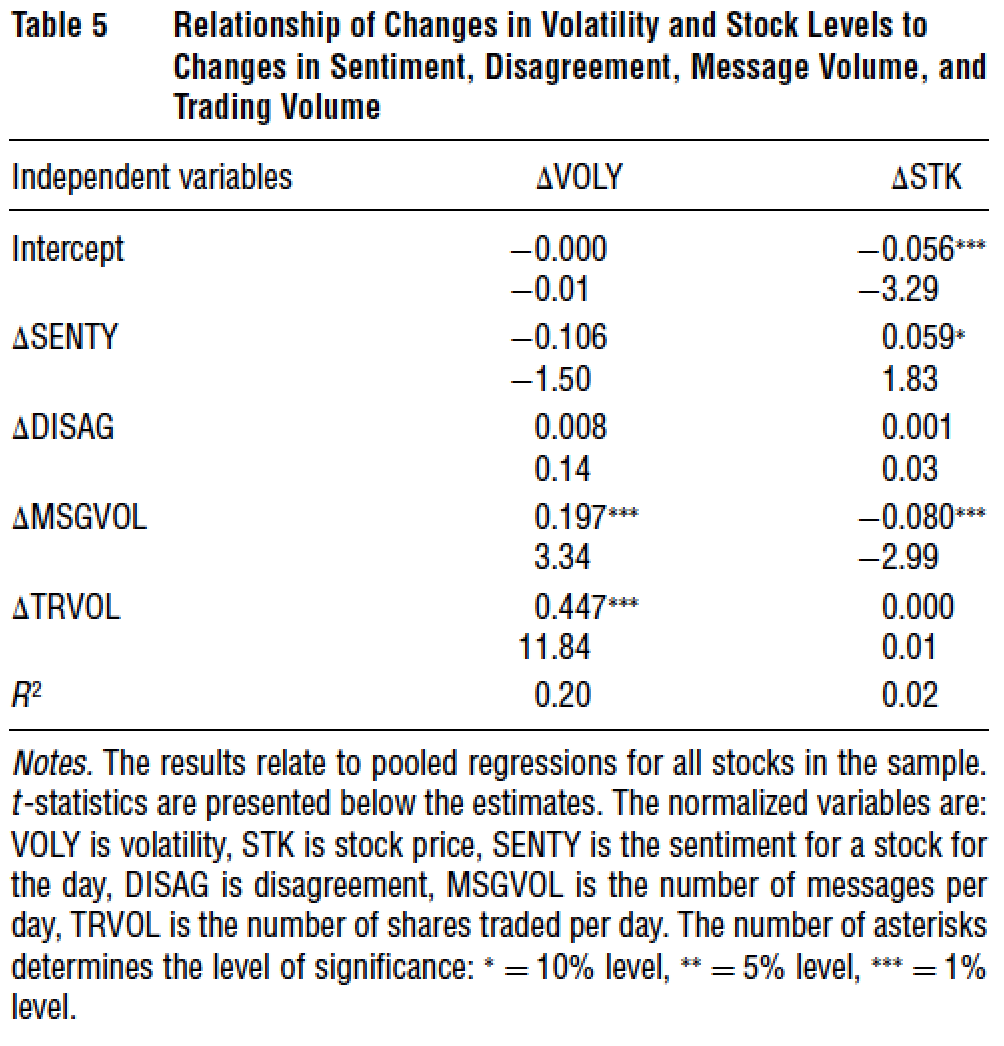

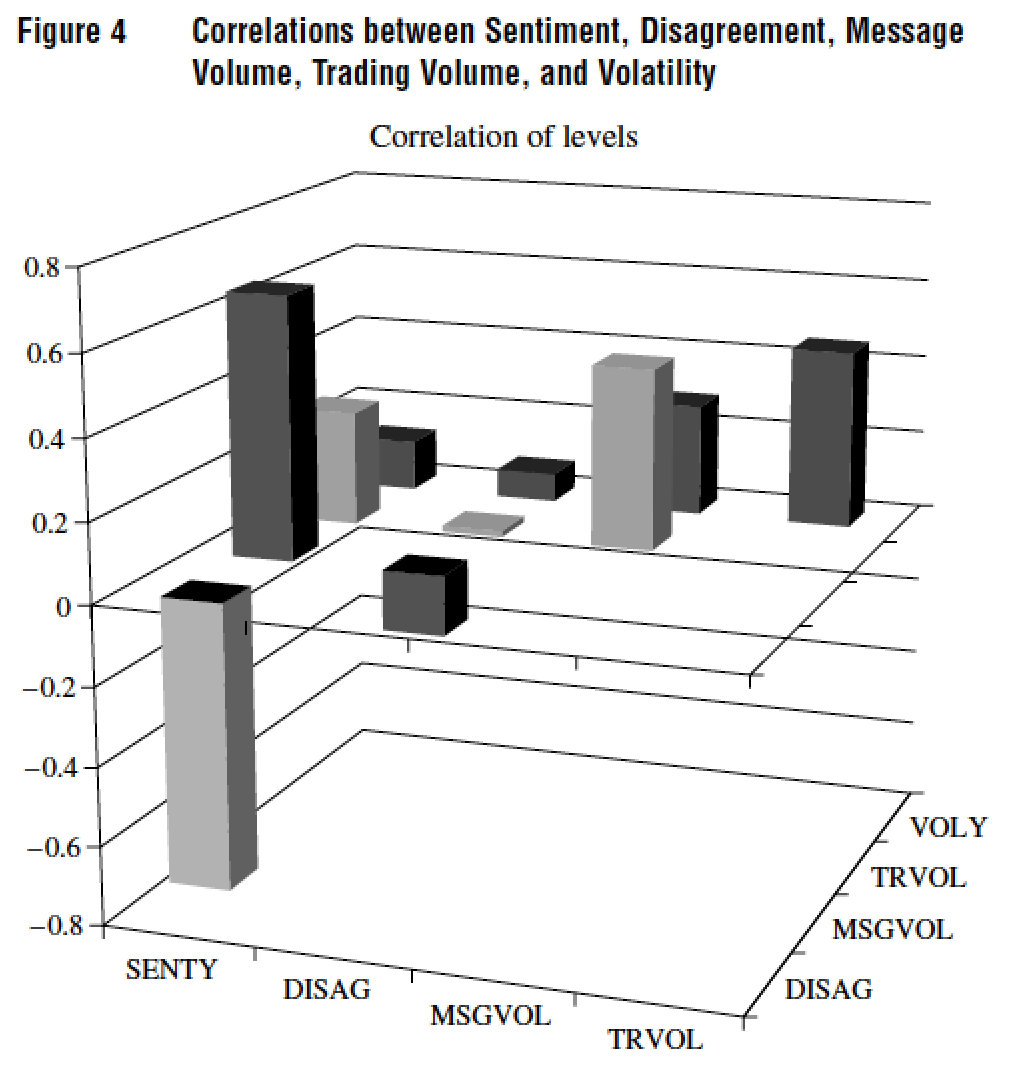

## </document>7.10 The Response to News

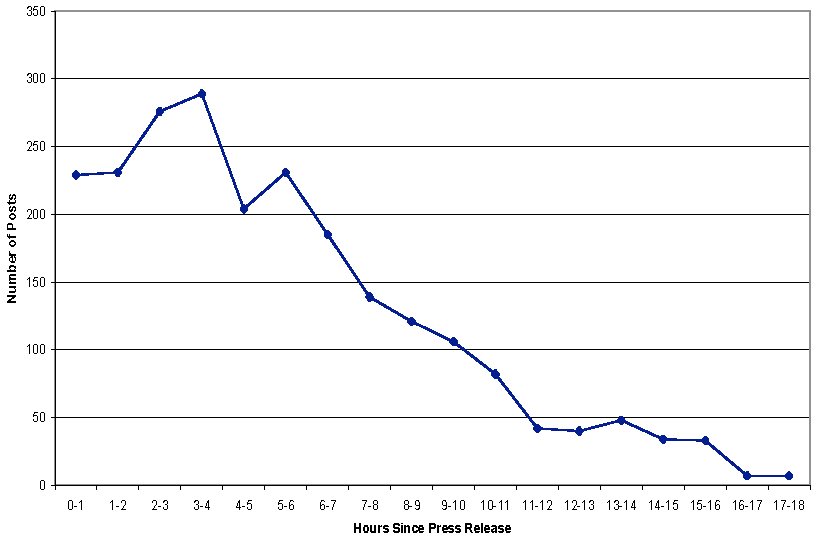

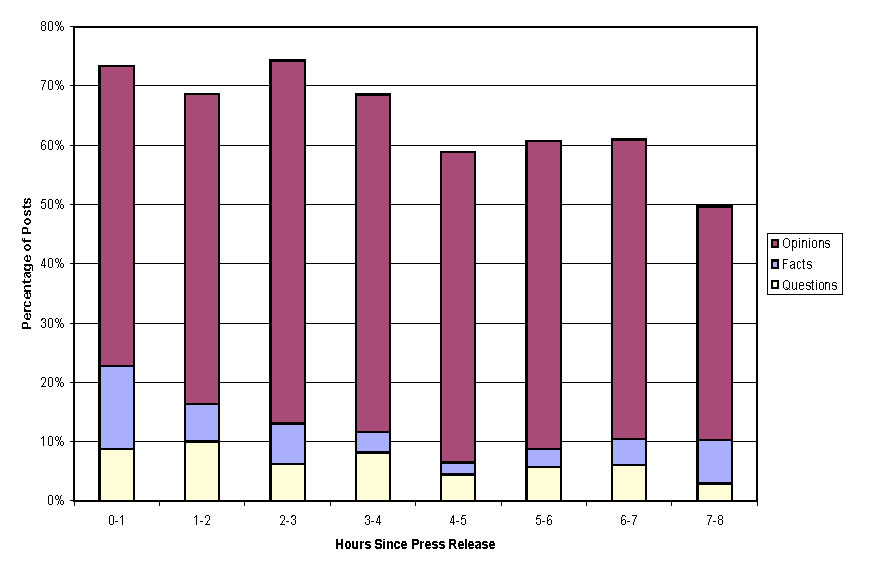

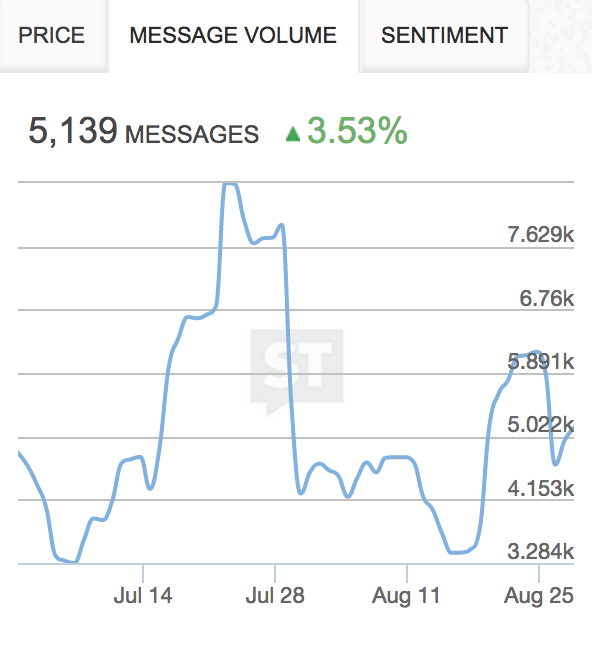

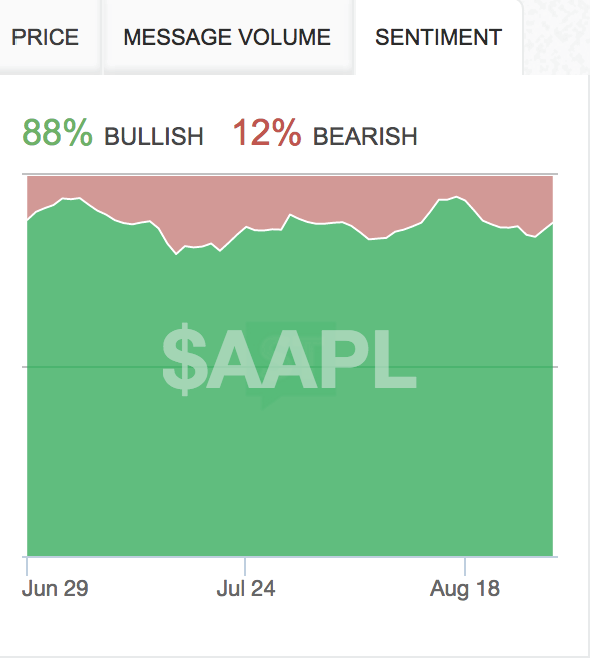

7.10.1 Das, Martinez-Jerez, and Tufano (FM 2005)

7.10.2 Breakdown of News Flow

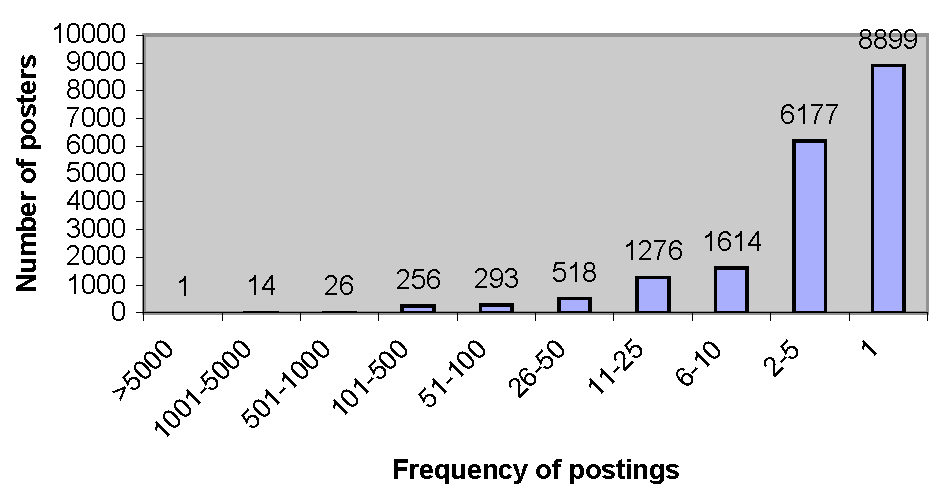

7.10.3 Frequency of Postings

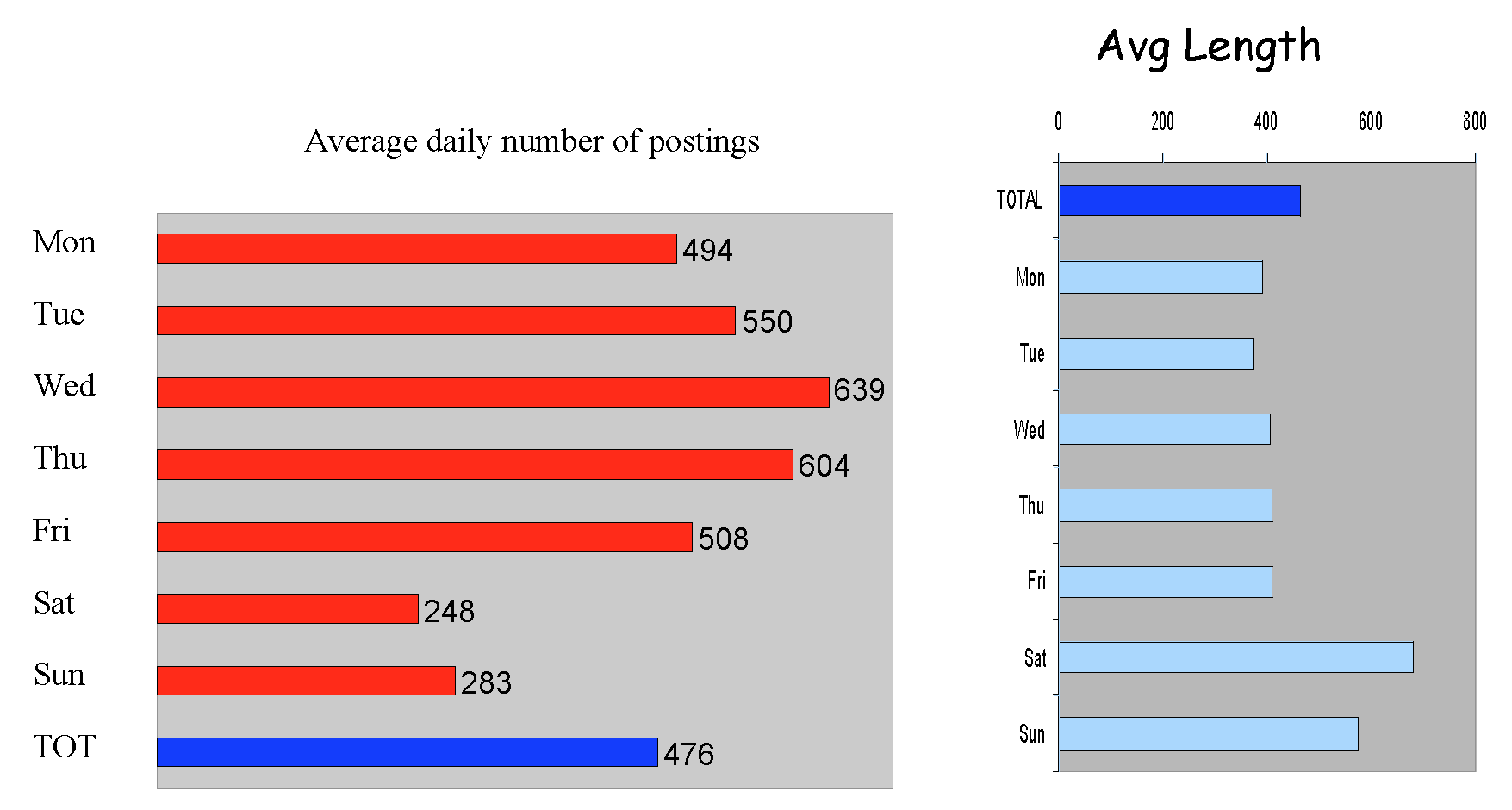

7.10.4 Weekly Posting

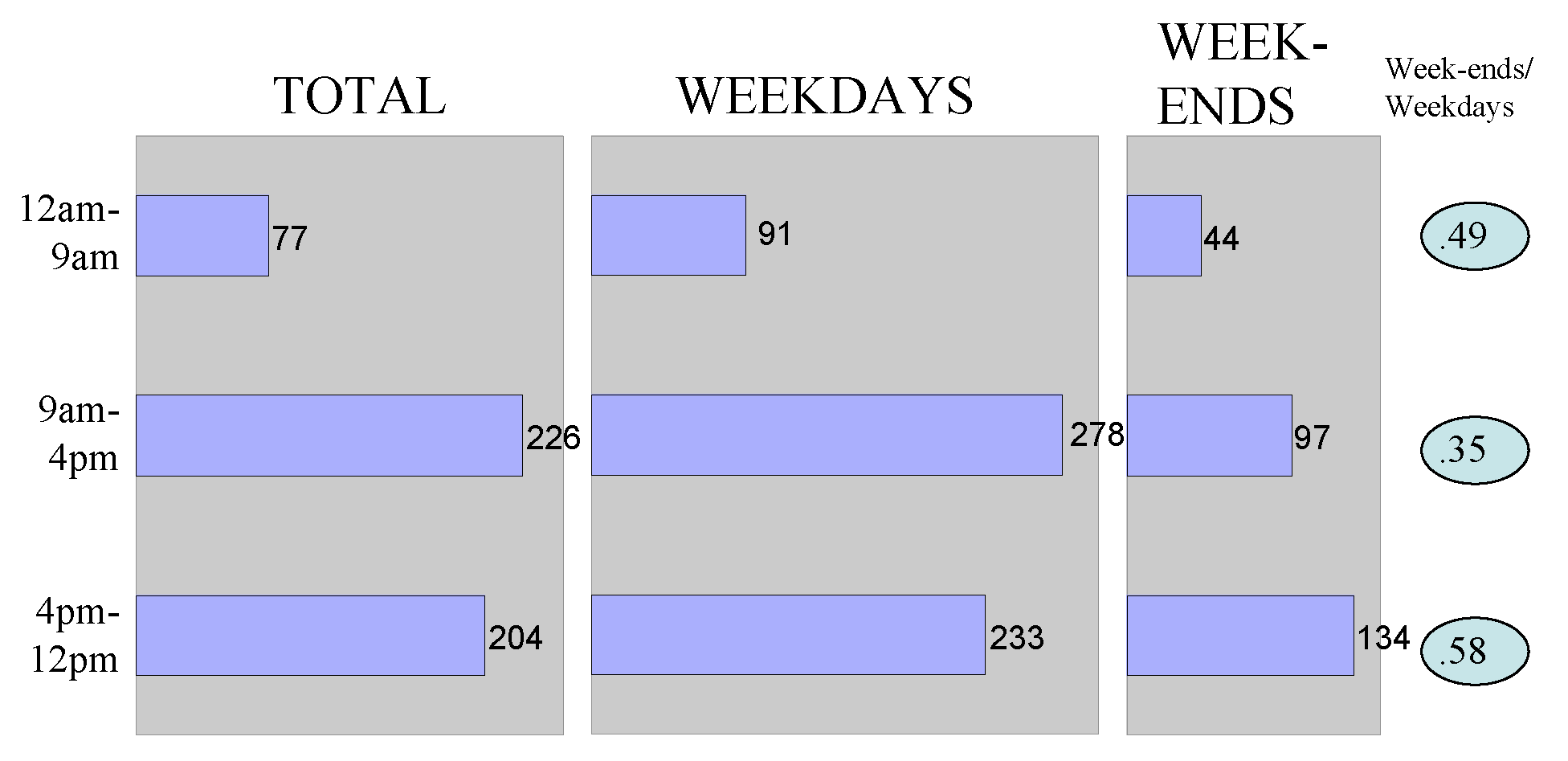

7.10.5 Intraday Posting

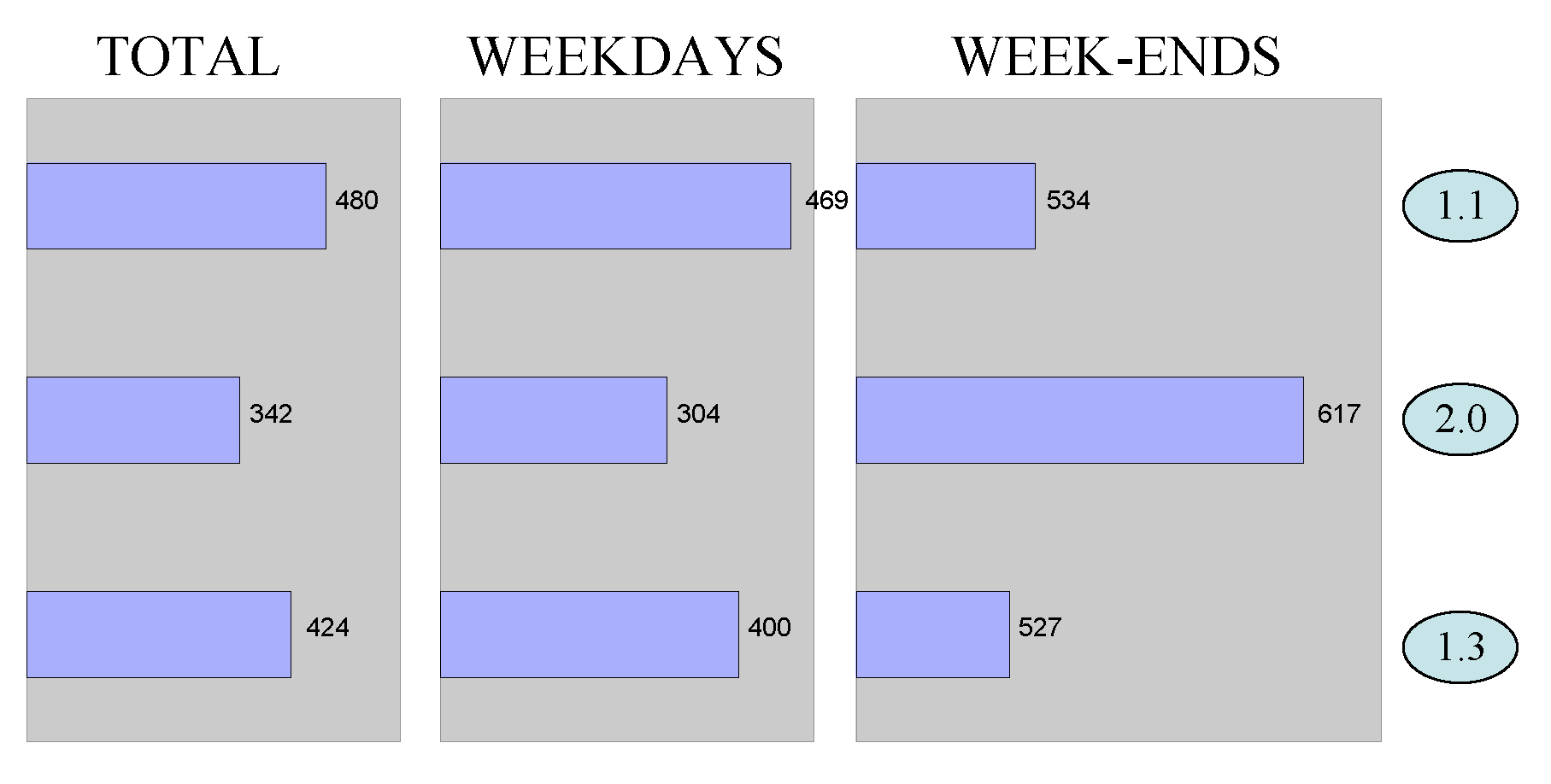

7.10.6 Number of Characters per Posting

7.11 Text Handling

First, let’s read in a simple web page (my landing page)

text = readLines("http://srdas.github.io/")

print(text[1:4])## [1] "<html>"

## [2] ""

## [3] "<head>"

## [4] "<title>SCU Web Page of Sanjiv Ranjan Das</title>"print(length(text))## [1] 367.11.1 String Detection

String handling is a basic need, so we use the stringr package.

#EXTRACTING SUBSTRINGS (take some time to look at

#the "stringr" package also)

library(stringr)

substr(text[4],24,29)## [1] "Sanjiv"#IF YOU WANT TO LOCATE A STRING

res = regexpr("Sanjiv",text[4])

print(res)## [1] 24

## attr(,"match.length")

## [1] 6

## attr(,"useBytes")

## [1] TRUEprint(substr(text[4],res[1],res[1]+nchar("Sanjiv")-1))## [1] "Sanjiv"#ANOTHER WAY

res = str_locate(text[4],"Sanjiv")

print(res)## start end

## [1,] 24 29print(substr(text[4],res[1],res[2]))## [1] "Sanjiv"7.11.2 Cleaning Text

Now we look at using regular expressions with the grep command to clean out text. I will read in my research page to process this. Here we are undertaking a “ruthless” cleanup.

#SIMPLE TEXT HANDLING

text = readLines("http://srdas.github.io/research.htm")

print(length(text))## [1] 845#print(text)

text = text[setdiff(seq(1,length(text)),grep("<",text))]

text = text[setdiff(seq(1,length(text)),grep(">",text))]

text = text[setdiff(seq(1,length(text)),grep("]",text))]

text = text[setdiff(seq(1,length(text)),grep("}",text))]

text = text[setdiff(seq(1,length(text)),grep("_",text))]

text = text[setdiff(seq(1,length(text)),grep("\\/",text))]

print(length(text))## [1] 350#print(text)

text = str_replace_all(text,"[\"]","")

idx = which(nchar(text)==0)

research = text[setdiff(seq(1,length(text)),idx)]

print(research)## [1] "Data Science: Theories, Models, Algorithms, and Analytics (web book -- work in progress)"

## [2] "Derivatives: Principles and Practice (2010),"

## [3] "(Rangarajan Sundaram and Sanjiv Das), McGraw Hill."

## [4] "An Index-Based Measure of Liquidity,'' (with George Chacko and Rong Fan), (2016)."

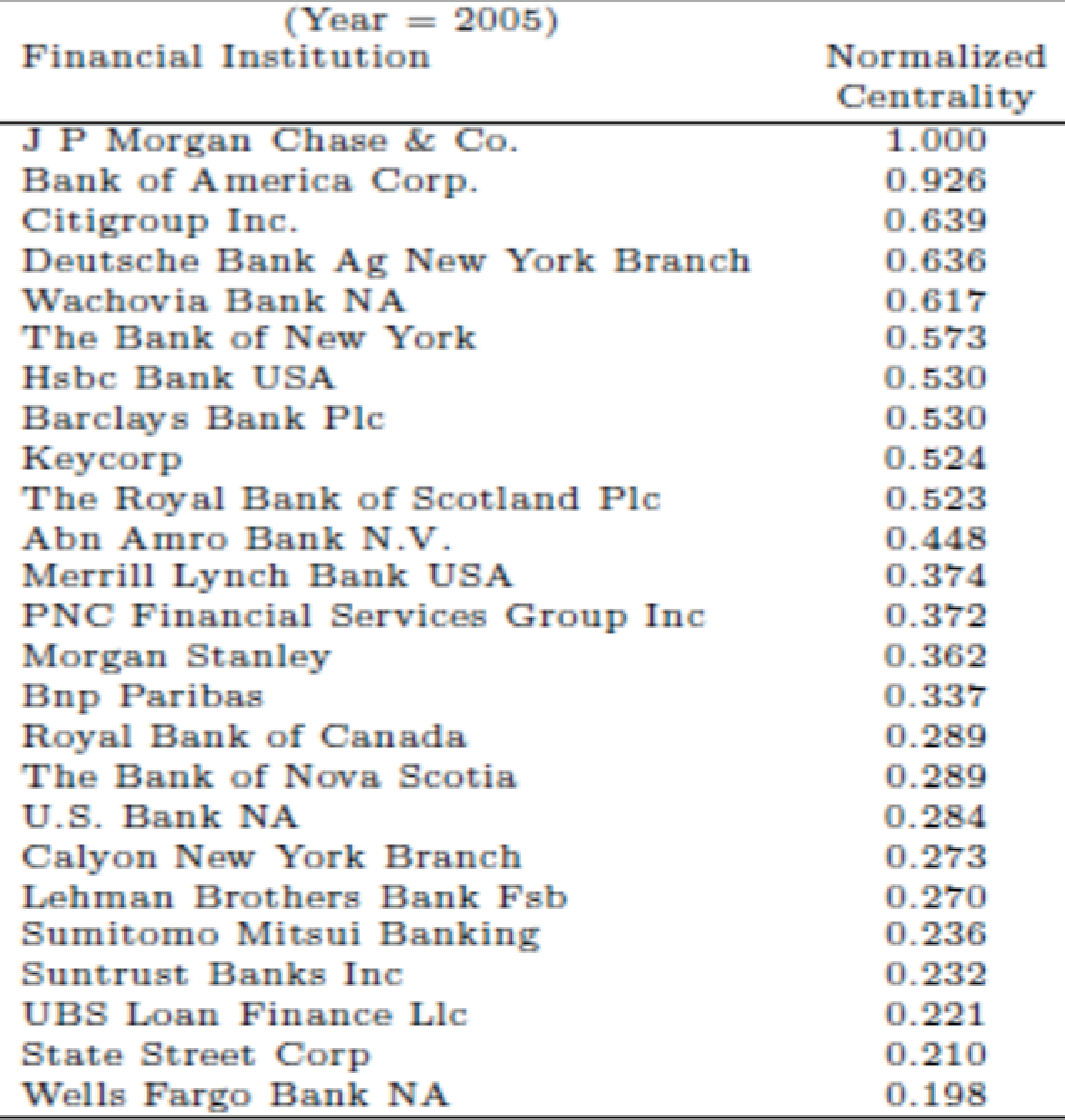

## [5] "Matrix Metrics: Network-Based Systemic Risk Scoring, (2016)."

## [6] "of systemic risk. This paper won the First Prize in the MIT-CFP competition 2016 for "

## [7] "the best paper on SIFIs (systemically important financial institutions). "

## [8] "It also won the best paper award at "

## [9] "Credit Spreads with Dynamic Debt (with Seoyoung Kim), (2015), "

## [10] "Text and Context: Language Analytics for Finance, (2014),"

## [11] "Strategic Loan Modification: An Options-Based Response to Strategic Default,"

## [12] "Options and Structured Products in Behavioral Portfolios, (with Meir Statman), (2013), "

## [13] "and barrier range notes, in the presence of fat-tailed outcomes using copulas."

## [14] "Polishing Diamonds in the Rough: The Sources of Syndicated Venture Performance, (2011), (with Hoje Jo and Yongtae Kim), "

## [15] "Optimization with Mental Accounts, (2010), (with Harry Markowitz, Jonathan"

## [16] "Accounting-based versus market-based cross-sectional models of CDS spreads, "

## [17] "(with Paul Hanouna and Atulya Sarin), (2009), "

## [18] "Hedging Credit: Equity Liquidity Matters, (with Paul Hanouna), (2009),"

## [19] "An Integrated Model for Hybrid Securities,"

## [20] "Yahoo for Amazon! Sentiment Extraction from Small Talk on the Web,"

## [21] "Common Failings: How Corporate Defaults are Correlated "

## [22] "(with Darrell Duffie, Nikunj Kapadia and Leandro Saita)."

## [23] "A Clinical Study of Investor Discussion and Sentiment, "

## [24] "(with Asis Martinez-Jerez and Peter Tufano), 2005, "

## [25] "International Portfolio Choice with Systemic Risk,"

## [26] "The loss resulting from diminished diversification is small, while"

## [27] "Speech: Signaling, Risk-sharing and the Impact of Fee Structures on"

## [28] "investor welfare. Contrary to regulatory intuition, incentive structures"

## [29] "A Discrete-Time Approach to No-arbitrage Pricing of Credit derivatives"

## [30] "with Rating Transitions, (with Viral Acharya and Rangarajan Sundaram),"

## [31] "Pricing Interest Rate Derivatives: A General Approach,''(with George Chacko),"

## [32] "A Discrete-Time Approach to Arbitrage-Free Pricing of Credit Derivatives,'' "

## [33] "The Psychology of Financial Decision Making: A Case"

## [34] "for Theory-Driven Experimental Enquiry,''"

## [35] "1999, (with Priya Raghubir),"

## [36] "Of Smiles and Smirks: A Term Structure Perspective,''"

## [37] "A Theory of Banking Structure, 1999, (with Ashish Nanda),"

## [38] "by function based upon two dimensions: the degree of information asymmetry "

## [39] "A Theory of Optimal Timing and Selectivity,'' "

## [40] "A Direct Discrete-Time Approach to"

## [41] "Poisson-Gaussian Bond Option Pricing in the Heath-Jarrow-Morton "

## [42] "The Central Tendency: A Second Factor in"

## [43] "Bond Yields, 1998, (with Silverio Foresi and Pierluigi Balduzzi), "

## [44] "Efficiency with Costly Information: A Reinterpretation of"

## [45] "Evidence from Managed Portfolios, (with Edwin Elton, Martin Gruber and Matt "

## [46] "Presented and Reprinted in the Proceedings of The "

## [47] "Seminar on the Analysis of Security Prices at the Center "

## [48] "for Research in Security Prices at the University of "

## [49] "Managing Rollover Risk with Capital Structure Covenants"

## [50] "in Structured Finance Vehicles (2016),"

## [51] "The Design and Risk Management of Structured Finance Vehicles (2016),"

## [52] "Post the recent subprime financial crisis, we inform the creation of safer SIVs "

## [53] "in structured finance, and propose avenues of mitigating risks faced by senior debt through "

## [54] "Coming up Short: Managing Underfunded Portfolios in an LDI-ES Framework (2014), "

## [55] "(with Seoyoung Kim and Meir Statman), "

## [56] "Going for Broke: Restructuring Distressed Debt Portfolios (2014),"

## [57] "Digital Portfolios. (2013), "

## [58] "Options on Portfolios with Higher-Order Moments, (2009),"

## [59] "options on a multivariate system of assets, calibrated to the return "

## [60] "Dealing with Dimension: Option Pricing on Factor Trees, (2009),"

## [61] "you to price options on multiple assets in a unified fraamework. Computational"

## [62] "Modeling"

## [63] "Correlated Default with a Forest of Binomial Trees, (2007), (with"

## [64] "Basel II: Correlation Related Issues (2007), "

## [65] "Correlated Default Risk, (2006),"

## [66] "(with Laurence Freed, Gary Geng, and Nikunj Kapadia),"

## [67] "increase as markets worsen. Regime switching models are needed to explain dynamic"

## [68] "A Simple Model for Pricing Equity Options with Markov"

## [69] "Switching State Variables (2006),"

## [70] "(with Donald Aingworth and Rajeev Motwani),"

## [71] "The Firm's Management of Social Interactions, (2005)"

## [72] "(with D. Godes, D. Mayzlin, Y. Chen, S. Das, C. Dellarocas, "

## [73] "B. Pfeieffer, B. Libai, S. Sen, M. Shi, and P. Verlegh). "

## [74] "Financial Communities (with Jacob Sisk), 2005, "

## [75] "Summer, 112-123."

## [76] "Monte Carlo Markov Chain Methods for Derivative Pricing"

## [77] "and Risk Assessment,(with Alistair Sinclair), 2005, "

## [78] "where incomplete information about the value of an asset may be exploited to "

## [79] "undertake fast and accurate pricing. Proof that a fully polynomial randomized "

## [80] "Correlated Default Processes: A Criterion-Based Copula Approach,"

## [81] "Special Issue on Default Risk. "

## [82] "Private Equity Returns: An Empirical Examination of the Exit of"

## [83] "Venture-Backed Companies, (with Murali Jagannathan and Atulya Sarin),"

## [84] "firm being financed, the valuation at the time of financing, and the prevailing market"

## [85] "sentiment. Helps understand the risk premium required for the"

## [86] "Issue on Computational Methods in Economics and Finance), "

## [87] "December, 55-69."

## [88] "Bayesian Migration in Credit Ratings Based on Probabilities of"

## [89] "The Impact of Correlated Default Risk on Credit Portfolios,"

## [90] "(with Gifford Fong, and Gary Geng),"

## [91] "How Diversified are Internationally Diversified Portfolios:"

## [92] "Time-Variation in the Covariances between International Returns,"

## [93] "Discrete-Time Bond and Option Pricing for Jump-Diffusion"

## [94] "Macroeconomic Implications of Search Theory for the Labor Market,"

## [95] "Auction Theory: A Summary with Applications and Evidence"

## [96] "from the Treasury Markets, 1996, (with Rangarajan Sundaram),"

## [97] "A Simple Approach to Three Factor Affine Models of the"

## [98] "Term Structure, (with Pierluigi Balduzzi, Silverio Foresi and Rangarajan"

## [99] "Analytical Approximations of the Term Structure"

## [100] "for Jump-diffusion Processes: A Numerical Analysis, 1996, "

## [101] "Markov Chain Term Structure Models: Extensions and Applications,"

## [102] "Exact Solutions for Bond and Options Prices"

## [103] "with Systematic Jump Risk, 1996, (with Silverio Foresi),"

## [104] "Pricing Credit Sensitive Debt when Interest Rates, Credit Ratings"

## [105] "and Credit Spreads are Stochastic, 1996, "

## [106] "v5(2), 161-198."

## [107] "Did CDS Trading Improve the Market for Corporate Bonds, (2016), "

## [108] "(with Madhu Kalimipalli and Subhankar Nayak), "

## [109] "Big Data's Big Muscle, (2016), "

## [110] "Portfolios for Investors Who Want to Reach Their Goals While Staying on the Mean-Variance Efficient Frontier, (2011), "

## [111] "(with Harry Markowitz, Jonathan Scheid, and Meir Statman), "

## [112] "News Analytics: Framework, Techniques and Metrics, The Handbook of News Analytics in Finance, May 2011, John Wiley & Sons, U.K. "

## [113] "Random Lattices for Option Pricing Problems in Finance, (2011),"

## [114] "Implementing Option Pricing Models using Python and Cython, (2010),"

## [115] "The Finance Web: Internet Information and Markets, (2010), "

## [116] "Financial Applications with Parallel R, (2009), "

## [117] "Recovery Swaps, (2009), (with Paul Hanouna), "

## [118] "Recovery Rates, (2009),(with Paul Hanouna), "

## [119] "``A Simple Model for Pricing Securities with a Debt-Equity Linkage,'' 2008, in "

## [120] "Credit Default Swap Spreads, 2006, (with Paul Hanouna), "

## [121] "Multiple-Core Processors for Finance Applications, 2006, "

## [122] "Power Laws, 2005, (with Jacob Sisk), "

## [123] "Genetic Algorithms, 2005,"

## [124] "Recovery Risk, 2005,"

## [125] "Venture Capital Syndication, (with Hoje Jo and Yongtae Kim), 2004"

## [126] "Technical Analysis, (with David Tien), 2004"

## [127] "Liquidity and the Bond Markets, (with Jan Ericsson and "

## [128] "Madhu Kalimipalli), 2003,"

## [129] "Modern Pricing of Interest Rate Derivatives - Book Review, "

## [130] "Contagion, 2003,"

## [131] "Hedge Funds, 2003,"

## [132] "Reprinted in "

## [133] "Working Papers on Hedge Funds, in The World of Hedge Funds: "

## [134] "Characteristics and "

## [135] "Analysis, 2005, World Scientific."

## [136] "The Internet and Investors, 2003,"

## [137] " Useful things to know about Correlated Default Risk,"

## [138] "(with Gifford Fong, Laurence Freed, Gary Geng, and Nikunj Kapadia),"

## [139] "The Regulation of Fee Structures in Mutual Funds: A Theoretical Analysis,'' "

## [140] "(with Rangarajan Sundaram), 1998, NBER WP No 6639, in the"

## [141] "Courant Institute of Mathematical Sciences, special volume on"

## [142] "A Discrete-Time Approach to Arbitrage-Free Pricing of Credit Derivatives,'' "

## [143] "(with Rangarajan Sundaram), reprinted in "

## [144] "the Courant Institute of Mathematical Sciences, special volume on"

## [145] "Stochastic Mean Models of the Term Structure,''"

## [146] "(with Pierluigi Balduzzi, Silverio Foresi and Rangarajan Sundaram), "

## [147] "John Wiley & Sons, Inc., 128-161."

## [148] "Interest Rate Modeling with Jump-Diffusion Processes,'' "

## [149] "John Wiley & Sons, Inc., 162-189."

## [150] "Comments on 'Pricing Excess-of-Loss Reinsurance Contracts against"

## [151] "Catastrophic Loss,' by J. David Cummins, C. Lewis, and Richard Phillips,"

## [152] "Froot (Ed.), University of Chicago Press, 1999, 141-145."

## [153] " Pricing Credit Derivatives,'' "

## [154] "J. Frost and J.G. Whittaker, 101-138."

## [155] "On the Recursive Implementation of Term Structure Models,'' "

## [156] "Zero-Revelation RegTech: Detecting Risk through"

## [157] "Linguistic Analysis of Corporate Emails and News "

## [158] "(with Seoyoung Kim and Bhushan Kothari)."

## [159] "Summary for the Columbia Law School blog: "

## [160] " "

## [161] "Dynamic Risk Networks: A Note "

## [162] "(with Seoyoung Kim and Dan Ostrov)."

## [163] "Research Challenges in Financial Data Modeling and Analysis "

## [164] "(with Lewis Alexander, Zachary Ives, H.V. Jagadish, and Claire Monteleoni)."

## [165] "Local Volatility and the Recovery Rate of Credit Default Swaps "

## [166] "(with Jeroen Jansen and Frank Fabozzi)."

## [167] "Efficient Rebalancing of Taxable Portfolios (with Dan Ostrov, Dennis Ding, Vincent Newell), "

## [168] "The Fast and the Curious: VC Drift "

## [169] "(with Amit Bubna and Paul Hanouna), "

## [170] "Venture Capital Communities (with Amit Bubna and Nagpurnanand Prabhala), "

## [171] " "Take a look at the text now to see how cleaned up it is. But there is a better way, i.e., use the text-mining package tm.

7.12 Package tm

The R programming language supports a text-mining package, succinctly named {tm}. Using functions such as {readDOC()}, {readPDF()}, etc., for reading DOC and PDF files, the package makes accessing various file formats easy.

Text mining involves applying functions to many text documents. A library of text documents (irrespective of format) is called a corpus. The essential and highly useful feature of text mining packages is the ability to operate on the entire set of documents at one go.

library(tm)## Loading required package: NLPtext = c("INTL is expected to announce good earnings report", "AAPL first quarter disappoints","GOOG announces new wallet", "YHOO ascends from old ways")

text_corpus = Corpus(VectorSource(text))

print(text_corpus)## <<VCorpus>>

## Metadata: corpus specific: 0, document level (indexed): 0

## Content: documents: 4writeCorpus(text_corpus)The writeCorpus() function in tm creates separate text files on the hard drive, and by default are names 1.txt, 2.txt, etc. The simple program code above shows how text scraped off a web page and collapsed into a single character string for each document, may then be converted into a corpus of documents using the Corpus() function.

It is easy to inspect the corpus as follows:

inspect(text_corpus)## <<VCorpus>>

## Metadata: corpus specific: 0, document level (indexed): 0

## Content: documents: 4

##

## [[1]]

## <<PlainTextDocument>>

## Metadata: 7

## Content: chars: 49

##

## [[2]]

## <<PlainTextDocument>>

## Metadata: 7

## Content: chars: 30

##

## [[3]]

## <<PlainTextDocument>>

## Metadata: 7

## Content: chars: 25

##

## [[4]]

## <<PlainTextDocument>>

## Metadata: 7

## Content: chars: 267.12.1 A second example

Here we use lapply to inspect the contents of the corpus.

#USING THE tm PACKAGE

library(tm)

text = c("Doc1;","This is doc2 --", "And, then Doc3.")

ctext = Corpus(VectorSource(text))

ctext## <<VCorpus>>

## Metadata: corpus specific: 0, document level (indexed): 0

## Content: documents: 3#writeCorpus(ctext)

#THE CORPUS IS A LIST OBJECT in R of type VCorpus or Corpus

inspect(ctext)## <<VCorpus>>

## Metadata: corpus specific: 0, document level (indexed): 0

## Content: documents: 3

##

## [[1]]

## <<PlainTextDocument>>

## Metadata: 7

## Content: chars: 5

##

## [[2]]

## <<PlainTextDocument>>

## Metadata: 7

## Content: chars: 15

##

## [[3]]

## <<PlainTextDocument>>

## Metadata: 7

## Content: chars: 15print(as.character(ctext[[1]]))## [1] "Doc1;"print(lapply(ctext[1:2],as.character))## $`1`

## [1] "Doc1;"

##

## $`2`

## [1] "This is doc2 --"ctext = tm_map(ctext,tolower) #Lower case all text in all docs

inspect(ctext)## <<VCorpus>>

## Metadata: corpus specific: 0, document level (indexed): 0

## Content: documents: 3

##

## [[1]]

## [1] doc1;

##

## [[2]]

## [1] this is doc2 --

##

## [[3]]

## [1] and, then doc3.ctext2 = tm_map(ctext,toupper)

inspect(ctext2)## <<VCorpus>>

## Metadata: corpus specific: 0, document level (indexed): 0

## Content: documents: 3

##

## [[1]]

## [1] DOC1;

##

## [[2]]

## [1] THIS IS DOC2 --

##

## [[3]]

## [1] AND, THEN DOC3.7.12.2 Function tm_map

- The tm_map function is very useful for cleaning up the documents. We may want to remove some words.

- We may also remove stopwords, punctuation, numbers, etc.

#FIRST CURATE TO UPPER CASE

dropWords = c("IS","AND","THEN")

ctext2 = tm_map(ctext2,removeWords,dropWords)

inspect(ctext2)## <<VCorpus>>

## Metadata: corpus specific: 0, document level (indexed): 0

## Content: documents: 3

##

## [[1]]

## [1] DOC1;

##

## [[2]]

## [1] THIS DOC2 --

##

## [[3]]

## [1] , DOC3.ctext = Corpus(VectorSource(text))

temp = ctext

print(lapply(temp,as.character))## $`1`

## [1] "Doc1;"

##

## $`2`

## [1] "This is doc2 --"

##

## $`3`

## [1] "And, then Doc3."temp = tm_map(temp,removeWords,stopwords("english"))

print(lapply(temp,as.character))## $`1`

## [1] "Doc1;"

##

## $`2`

## [1] "This doc2 --"

##

## $`3`

## [1] "And, Doc3."temp = tm_map(temp,removePunctuation)

print(lapply(temp,as.character))## $`1`

## [1] "Doc1"

##

## $`2`

## [1] "This doc2 "

##

## $`3`

## [1] "And Doc3"temp = tm_map(temp,removeNumbers)

print(lapply(temp,as.character))## $`1`

## [1] "Doc"

##

## $`2`

## [1] "This doc "

##

## $`3`

## [1] "And Doc"7.12.3 Bag of Words

We can create a bag of words by collapsing all the text into one bundle.

#CONVERT CORPUS INTO ARRAY OF STRINGS AND FLATTEN

txt = NULL

for (j in 1:length(temp)) {

txt = c(txt,temp[[j]]$content)

}

txt = paste(txt,collapse=" ")

txt = tolower(txt)

print(txt)## [1] "doc this doc and doc"7.12.4 Example (on my bio page)

Now we will do a full pass through of this on my bio.

text = readLines("http://srdas.github.io/bio-candid.html")

ctext = Corpus(VectorSource(text))

ctext## <<VCorpus>>

## Metadata: corpus specific: 0, document level (indexed): 0

## Content: documents: 80#Print a few lines

print(lapply(ctext, as.character)[10:15])## $`10`

## [1] "B.Com in Accounting and Economics (University of Bombay, Sydenham"

##

## $`11`

## [1] "College), and is also a qualified Cost and Works Accountant"

##

## $`12`

## [1] "(AICWA). He is a senior editor of The Journal of Investment"

##

## $`13`

## [1] "Management, co-editor of The Journal of Derivatives and The Journal of"

##

## $`14`

## [1] "Financial Services Research, and Associate Editor of other academic"

##

## $`15`

## [1] "journals. Prior to being an academic, he worked in the derivatives"ctext = tm_map(ctext,removePunctuation)

print(lapply(ctext, as.character)[10:15])## $`10`

## [1] "BCom in Accounting and Economics University of Bombay Sydenham"

##

## $`11`

## [1] "College and is also a qualified Cost and Works Accountant"

##

## $`12`

## [1] "AICWA He is a senior editor of The Journal of Investment"

##

## $`13`

## [1] "Management coeditor of The Journal of Derivatives and The Journal of"

##

## $`14`

## [1] "Financial Services Research and Associate Editor of other academic"

##

## $`15`

## [1] "journals Prior to being an academic he worked in the derivatives"txt = NULL

for (j in 1:length(ctext)) {

txt = c(txt,ctext[[j]]$content)

}

txt = paste(txt,collapse=" ")

txt = tolower(txt)