27. Text Classification with AutoGluon#

https://auto.gluon.ai/stable/index.html

This is an excellent library from AWS that may be used for multimodal machine learning in an automatic manner. It uses stack-ensembling and beats most kaggle competition winners. See the papers in the Guthub repo: awslabs/autogluon

from google.colab import drive

drive.mount('/content/drive') # Add My Drive/<>

import os

os.chdir('drive/My Drive')

os.chdir('Books_Writings/NLPBook/')

Mounted at /content/drive

%%capture

# %pylab inline

import pandas as pd

import os

import matplotlib

import matplotlib.pyplot as plt

import seaborn as sns

27.1. Use AutoGluon Tabular on News Dataset#

We need to first install Meta’s PyTorch framework and then install AutoGluon, which runs on top of PyTorch. This is an extensive installation, and will take some time.

AutoGluon installation instructions: https://auto.gluon.ai/stable/install.html

%%time

!pip install -U pip

!pip install -U setuptools wheel

# !pip install -U uv

# CPU version of pytorch has smaller footprint - see installation instructions in

# pytorch documentation - https://pytorch.org/get-started/locally/

# !uv pip install torch==2.3.1 torchvision==0.18.1 --index-url https://download.pytorch.org/whl/cpu --system

# !uv pip install autogluon --system

# !pip install autogluon

!pip install autogluon --extra-index-url https://download.pytorch.org/whl/cpu

Requirement already satisfied: pip in /usr/local/lib/python3.12/dist-packages (24.1.2)

Collecting pip

Downloading pip-25.2-py3-none-any.whl.metadata (4.7 kB)

Downloading pip-25.2-py3-none-any.whl (1.8 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.8/1.8 MB 42.2 MB/s eta 0:00:00

?25hInstalling collected packages: pip

Attempting uninstall: pip

Found existing installation: pip 24.1.2

Uninstalling pip-24.1.2:

Successfully uninstalled pip-24.1.2

Successfully installed pip-25.2

Requirement already satisfied: setuptools in /usr/local/lib/python3.12/dist-packages (75.2.0)

Collecting setuptools

Downloading setuptools-80.9.0-py3-none-any.whl.metadata (6.6 kB)

Requirement already satisfied: wheel in /usr/local/lib/python3.12/dist-packages (0.45.1)

Downloading setuptools-80.9.0-py3-none-any.whl (1.2 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.2/1.2 MB 42.6 MB/s 0:00:00

?25hInstalling collected packages: setuptools

Attempting uninstall: setuptools

Found existing installation: setuptools 75.2.0

Uninstalling setuptools-75.2.0:

Successfully uninstalled setuptools-75.2.0

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

ipython 7.34.0 requires jedi>=0.16, which is not installed.

Successfully installed setuptools-80.9.0

Looking in indexes: https://pypi.org/simple, https://download.pytorch.org/whl/cpu

Collecting autogluon

Downloading autogluon-1.4.0-py3-none-any.whl.metadata (11 kB)

Collecting autogluon.core==1.4.0 (from autogluon.core[all]==1.4.0->autogluon)

Downloading autogluon.core-1.4.0-py3-none-any.whl.metadata (12 kB)

Collecting autogluon.features==1.4.0 (from autogluon)

Downloading autogluon.features-1.4.0-py3-none-any.whl.metadata (11 kB)

Collecting autogluon.tabular==1.4.0 (from autogluon.tabular[all]==1.4.0->autogluon)

Downloading autogluon.tabular-1.4.0-py3-none-any.whl.metadata (16 kB)

Collecting autogluon.multimodal==1.4.0 (from autogluon)

Downloading autogluon.multimodal-1.4.0-py3-none-any.whl.metadata (13 kB)

Collecting autogluon.timeseries==1.4.0 (from autogluon.timeseries[all]==1.4.0->autogluon)

Downloading autogluon.timeseries-1.4.0-py3-none-any.whl.metadata (12 kB)

Requirement already satisfied: numpy<2.4.0,>=1.25.0 in /usr/local/lib/python3.12/dist-packages (from autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (2.0.2)

Requirement already satisfied: scipy<1.17,>=1.5.4 in /usr/local/lib/python3.12/dist-packages (from autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (1.16.2)

Requirement already satisfied: scikit-learn<1.8.0,>=1.4.0 in /usr/local/lib/python3.12/dist-packages (from autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (1.6.1)

Requirement already satisfied: networkx<4,>=3.0 in /usr/local/lib/python3.12/dist-packages (from autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (3.5)

Requirement already satisfied: pandas<2.4.0,>=2.0.0 in /usr/local/lib/python3.12/dist-packages (from autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (2.2.2)

Requirement already satisfied: tqdm<5,>=4.38 in /usr/local/lib/python3.12/dist-packages (from autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (4.67.1)

Requirement already satisfied: requests in /usr/local/lib/python3.12/dist-packages (from autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (2.32.4)

Requirement already satisfied: matplotlib<3.11,>=3.7.0 in /usr/local/lib/python3.12/dist-packages (from autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (3.10.0)

Collecting boto3<2,>=1.10 (from autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon)

Downloading boto3-1.40.44-py3-none-any.whl.metadata (6.7 kB)

Collecting autogluon.common==1.4.0 (from autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon)

Downloading autogluon.common-1.4.0-py3-none-any.whl.metadata (11 kB)

Requirement already satisfied: pyarrow<21.0.0,>=7.0.0 in /usr/local/lib/python3.12/dist-packages (from autogluon.common==1.4.0->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (18.1.0)

Requirement already satisfied: psutil<7.1.0,>=5.7.3 in /usr/local/lib/python3.12/dist-packages (from autogluon.common==1.4.0->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (5.9.5)

Requirement already satisfied: joblib<1.7,>=1.2 in /usr/local/lib/python3.12/dist-packages (from autogluon.common==1.4.0->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (1.5.2)

Requirement already satisfied: hyperopt<0.2.8,>=0.2.7 in /usr/local/lib/python3.12/dist-packages (from autogluon.core[all]==1.4.0->autogluon) (0.2.7)

Collecting ray<2.45,>=2.10.0 (from ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon)

Downloading ray-2.44.1-cp312-cp312-manylinux2014_x86_64.whl.metadata (19 kB)

Requirement already satisfied: Pillow<12,>=10.0.1 in /usr/local/lib/python3.12/dist-packages (from autogluon.multimodal==1.4.0->autogluon) (11.3.0)

Collecting torch<2.8,>=2.2 (from autogluon.multimodal==1.4.0->autogluon)

Downloading https://download.pytorch.org/whl/cpu/torch-2.7.1%2Bcpu-cp312-cp312-manylinux_2_28_x86_64.whl.metadata (27 kB)

Collecting lightning<2.8,>=2.2 (from autogluon.multimodal==1.4.0->autogluon)

Downloading lightning-2.5.5-py3-none-any.whl.metadata (39 kB)

Collecting transformers<4.50,>=4.38.0 (from transformers[sentencepiece]<4.50,>=4.38.0->autogluon.multimodal==1.4.0->autogluon)

Downloading transformers-4.49.0-py3-none-any.whl.metadata (44 kB)

Requirement already satisfied: accelerate<2.0,>=0.34.0 in /usr/local/lib/python3.12/dist-packages (from autogluon.multimodal==1.4.0->autogluon) (1.10.1)

Requirement already satisfied: fsspec<=2025.3 in /usr/local/lib/python3.12/dist-packages (from fsspec[http]<=2025.3->autogluon.multimodal==1.4.0->autogluon) (2025.3.0)

Collecting jsonschema<4.24,>=4.18 (from autogluon.multimodal==1.4.0->autogluon)

Downloading jsonschema-4.23.0-py3-none-any.whl.metadata (7.9 kB)

Collecting seqeval<1.3.0,>=1.2.2 (from autogluon.multimodal==1.4.0->autogluon)

Downloading seqeval-1.2.2.tar.gz (43 kB)

Preparing metadata (setup.py) ... ?25l?25hdone

Collecting evaluate<0.5.0,>=0.4.0 (from autogluon.multimodal==1.4.0->autogluon)

Downloading evaluate-0.4.6-py3-none-any.whl.metadata (9.5 kB)

Collecting timm<1.0.7,>=0.9.5 (from autogluon.multimodal==1.4.0->autogluon)

Downloading timm-1.0.3-py3-none-any.whl.metadata (43 kB)

Collecting torchvision<0.23.0,>=0.16.0 (from autogluon.multimodal==1.4.0->autogluon)

Downloading https://download.pytorch.org/whl/cpu/torchvision-0.22.1%2Bcpu-cp312-cp312-manylinux_2_28_x86_64.whl.metadata (6.1 kB)

Requirement already satisfied: scikit-image<0.26.0,>=0.19.1 in /usr/local/lib/python3.12/dist-packages (from autogluon.multimodal==1.4.0->autogluon) (0.25.2)

Requirement already satisfied: text-unidecode<1.4,>=1.3 in /usr/local/lib/python3.12/dist-packages (from autogluon.multimodal==1.4.0->autogluon) (1.3)

Collecting torchmetrics<1.8,>=1.2.0 (from autogluon.multimodal==1.4.0->autogluon)

Downloading torchmetrics-1.7.4-py3-none-any.whl.metadata (21 kB)

Requirement already satisfied: omegaconf<2.4.0,>=2.1.1 in /usr/local/lib/python3.12/dist-packages (from autogluon.multimodal==1.4.0->autogluon) (2.3.0)

Collecting pytorch-metric-learning<2.9,>=1.3.0 (from autogluon.multimodal==1.4.0->autogluon)

Downloading pytorch_metric_learning-2.8.1-py3-none-any.whl.metadata (18 kB)

Collecting nlpaug<1.2.0,>=1.1.10 (from autogluon.multimodal==1.4.0->autogluon)

Downloading nlpaug-1.1.11-py3-none-any.whl.metadata (14 kB)

Requirement already satisfied: nltk<3.10,>=3.4.5 in /usr/local/lib/python3.12/dist-packages (from autogluon.multimodal==1.4.0->autogluon) (3.9.1)

Collecting openmim<0.4.0,>=0.3.7 (from autogluon.multimodal==1.4.0->autogluon)

Downloading openmim-0.3.9-py2.py3-none-any.whl.metadata (16 kB)

Requirement already satisfied: defusedxml<0.7.2,>=0.7.1 in /usr/local/lib/python3.12/dist-packages (from autogluon.multimodal==1.4.0->autogluon) (0.7.1)

Requirement already satisfied: jinja2<3.2,>=3.0.3 in /usr/local/lib/python3.12/dist-packages (from autogluon.multimodal==1.4.0->autogluon) (3.1.6)

Requirement already satisfied: tensorboard<3,>=2.9 in /usr/local/lib/python3.12/dist-packages (from autogluon.multimodal==1.4.0->autogluon) (2.19.0)

Collecting pytesseract<0.4,>=0.3.9 (from autogluon.multimodal==1.4.0->autogluon)

Downloading pytesseract-0.3.13-py3-none-any.whl.metadata (11 kB)

Collecting nvidia-ml-py3<8.0,>=7.352.0 (from autogluon.multimodal==1.4.0->autogluon)

Downloading nvidia-ml-py3-7.352.0.tar.gz (19 kB)

Preparing metadata (setup.py) ... ?25l?25hdone

Collecting pdf2image<1.19,>=1.17.0 (from autogluon.multimodal==1.4.0->autogluon)

Downloading pdf2image-1.17.0-py3-none-any.whl.metadata (6.2 kB)

Collecting catboost<1.3,>=1.2 (from autogluon.tabular[all]==1.4.0->autogluon)

Downloading catboost-1.2.8-cp312-cp312-manylinux2014_x86_64.whl.metadata (1.2 kB)

Requirement already satisfied: fastai<2.9,>=2.3.1 in /usr/local/lib/python3.12/dist-packages (from autogluon.tabular[all]==1.4.0->autogluon) (2.8.4)

Collecting loguru (from autogluon.tabular[all]==1.4.0->autogluon)

Downloading loguru-0.7.3-py3-none-any.whl.metadata (22 kB)

Requirement already satisfied: lightgbm<4.7,>=4.0 in /usr/local/lib/python3.12/dist-packages (from autogluon.tabular[all]==1.4.0->autogluon) (4.6.0)

Collecting einx (from autogluon.tabular[all]==1.4.0->autogluon)

Downloading einx-0.3.0-py3-none-any.whl.metadata (6.9 kB)

Requirement already satisfied: xgboost<3.1,>=2.0 in /usr/local/lib/python3.12/dist-packages (from autogluon.tabular[all]==1.4.0->autogluon) (3.0.5)

Requirement already satisfied: spacy<3.9 in /usr/local/lib/python3.12/dist-packages (from autogluon.tabular[all]==1.4.0->autogluon) (3.8.7)

Requirement already satisfied: huggingface-hub[torch] in /usr/local/lib/python3.12/dist-packages (from autogluon.tabular[all]==1.4.0->autogluon) (0.35.0)

Collecting pytorch-lightning (from autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading pytorch_lightning-2.5.5-py3-none-any.whl.metadata (20 kB)

Collecting gluonts<0.17,>=0.15.0 (from autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading gluonts-0.16.2-py3-none-any.whl.metadata (9.8 kB)

Collecting statsforecast<2.0.2,>=1.7.0 (from autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading statsforecast-2.0.1-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (29 kB)

Collecting mlforecast<0.15.0,>=0.14.0 (from autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading mlforecast-0.14.0-py3-none-any.whl.metadata (12 kB)

Collecting utilsforecast<0.2.12,>=0.2.3 (from autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading utilsforecast-0.2.11-py3-none-any.whl.metadata (7.7 kB)

Collecting coreforecast<0.0.17,>=0.0.12 (from autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading coreforecast-0.0.16-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (3.7 kB)

Collecting fugue>=0.9.0 (from autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading fugue-0.9.1-py3-none-any.whl.metadata (18 kB)

Requirement already satisfied: orjson~=3.9 in /usr/local/lib/python3.12/dist-packages (from autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (3.11.3)

Requirement already satisfied: packaging>=20.0 in /usr/local/lib/python3.12/dist-packages (from accelerate<2.0,>=0.34.0->autogluon.multimodal==1.4.0->autogluon) (25.0)

Requirement already satisfied: pyyaml in /usr/local/lib/python3.12/dist-packages (from accelerate<2.0,>=0.34.0->autogluon.multimodal==1.4.0->autogluon) (6.0.2)

Requirement already satisfied: safetensors>=0.4.3 in /usr/local/lib/python3.12/dist-packages (from accelerate<2.0,>=0.34.0->autogluon.multimodal==1.4.0->autogluon) (0.6.2)

Collecting botocore<1.41.0,>=1.40.44 (from boto3<2,>=1.10->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon)

Downloading botocore-1.40.44-py3-none-any.whl.metadata (5.7 kB)

Collecting jmespath<2.0.0,>=0.7.1 (from boto3<2,>=1.10->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon)

Downloading jmespath-1.0.1-py3-none-any.whl.metadata (7.6 kB)

Collecting s3transfer<0.15.0,>=0.14.0 (from boto3<2,>=1.10->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon)

Downloading s3transfer-0.14.0-py3-none-any.whl.metadata (1.7 kB)

Requirement already satisfied: python-dateutil<3.0.0,>=2.1 in /usr/local/lib/python3.12/dist-packages (from botocore<1.41.0,>=1.40.44->boto3<2,>=1.10->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (2.9.0.post0)

Requirement already satisfied: urllib3!=2.2.0,<3,>=1.25.4 in /usr/local/lib/python3.12/dist-packages (from botocore<1.41.0,>=1.40.44->boto3<2,>=1.10->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (2.5.0)

Requirement already satisfied: graphviz in /usr/local/lib/python3.12/dist-packages (from catboost<1.3,>=1.2->autogluon.tabular[all]==1.4.0->autogluon) (0.21)

Requirement already satisfied: plotly in /usr/local/lib/python3.12/dist-packages (from catboost<1.3,>=1.2->autogluon.tabular[all]==1.4.0->autogluon) (5.24.1)

Requirement already satisfied: six in /usr/local/lib/python3.12/dist-packages (from catboost<1.3,>=1.2->autogluon.tabular[all]==1.4.0->autogluon) (1.17.0)

Requirement already satisfied: datasets>=2.0.0 in /usr/local/lib/python3.12/dist-packages (from evaluate<0.5.0,>=0.4.0->autogluon.multimodal==1.4.0->autogluon) (4.0.0)

Requirement already satisfied: dill in /usr/local/lib/python3.12/dist-packages (from evaluate<0.5.0,>=0.4.0->autogluon.multimodal==1.4.0->autogluon) (0.3.8)

Requirement already satisfied: xxhash in /usr/local/lib/python3.12/dist-packages (from evaluate<0.5.0,>=0.4.0->autogluon.multimodal==1.4.0->autogluon) (3.5.0)

Requirement already satisfied: multiprocess in /usr/local/lib/python3.12/dist-packages (from evaluate<0.5.0,>=0.4.0->autogluon.multimodal==1.4.0->autogluon) (0.70.16)

Requirement already satisfied: pip in /usr/local/lib/python3.12/dist-packages (from fastai<2.9,>=2.3.1->autogluon.tabular[all]==1.4.0->autogluon) (25.2)

Requirement already satisfied: fastdownload<2,>=0.0.5 in /usr/local/lib/python3.12/dist-packages (from fastai<2.9,>=2.3.1->autogluon.tabular[all]==1.4.0->autogluon) (0.0.7)

Requirement already satisfied: fastcore<1.9,>=1.8.0 in /usr/local/lib/python3.12/dist-packages (from fastai<2.9,>=2.3.1->autogluon.tabular[all]==1.4.0->autogluon) (1.8.8)

Requirement already satisfied: fasttransform>=0.0.2 in /usr/local/lib/python3.12/dist-packages (from fastai<2.9,>=2.3.1->autogluon.tabular[all]==1.4.0->autogluon) (0.0.2)

Requirement already satisfied: fastprogress>=0.2.4 in /usr/local/lib/python3.12/dist-packages (from fastai<2.9,>=2.3.1->autogluon.tabular[all]==1.4.0->autogluon) (1.0.3)

Requirement already satisfied: plum-dispatch in /usr/local/lib/python3.12/dist-packages (from fastai<2.9,>=2.3.1->autogluon.tabular[all]==1.4.0->autogluon) (2.5.7)

Requirement already satisfied: cloudpickle in /usr/local/lib/python3.12/dist-packages (from fastai<2.9,>=2.3.1->autogluon.tabular[all]==1.4.0->autogluon) (3.1.1)

Requirement already satisfied: aiohttp!=4.0.0a0,!=4.0.0a1 in /usr/local/lib/python3.12/dist-packages (from fsspec[http]<=2025.3->autogluon.multimodal==1.4.0->autogluon) (3.12.15)

Requirement already satisfied: pydantic<3,>=1.7 in /usr/local/lib/python3.12/dist-packages (from gluonts<0.17,>=0.15.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (2.11.9)

Requirement already satisfied: toolz~=0.10 in /usr/local/lib/python3.12/dist-packages (from gluonts<0.17,>=0.15.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (0.12.1)

Requirement already satisfied: typing-extensions~=4.0 in /usr/local/lib/python3.12/dist-packages (from gluonts<0.17,>=0.15.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (4.15.0)

Requirement already satisfied: future in /usr/local/lib/python3.12/dist-packages (from hyperopt<0.2.8,>=0.2.7->autogluon.core[all]==1.4.0->autogluon) (1.0.0)

Requirement already satisfied: py4j in /usr/local/lib/python3.12/dist-packages (from hyperopt<0.2.8,>=0.2.7->autogluon.core[all]==1.4.0->autogluon) (0.10.9.7)

Requirement already satisfied: MarkupSafe>=2.0 in /usr/local/lib/python3.12/dist-packages (from jinja2<3.2,>=3.0.3->autogluon.multimodal==1.4.0->autogluon) (3.0.2)

Requirement already satisfied: attrs>=22.2.0 in /usr/local/lib/python3.12/dist-packages (from jsonschema<4.24,>=4.18->autogluon.multimodal==1.4.0->autogluon) (25.3.0)

Requirement already satisfied: jsonschema-specifications>=2023.03.6 in /usr/local/lib/python3.12/dist-packages (from jsonschema<4.24,>=4.18->autogluon.multimodal==1.4.0->autogluon) (2025.9.1)

Requirement already satisfied: referencing>=0.28.4 in /usr/local/lib/python3.12/dist-packages (from jsonschema<4.24,>=4.18->autogluon.multimodal==1.4.0->autogluon) (0.36.2)

Requirement already satisfied: rpds-py>=0.7.1 in /usr/local/lib/python3.12/dist-packages (from jsonschema<4.24,>=4.18->autogluon.multimodal==1.4.0->autogluon) (0.27.1)

Collecting lightning-utilities<2.0,>=0.10.0 (from lightning<2.8,>=2.2->autogluon.multimodal==1.4.0->autogluon)

Downloading lightning_utilities-0.15.2-py3-none-any.whl.metadata (5.7 kB)

Requirement already satisfied: setuptools in /usr/local/lib/python3.12/dist-packages (from lightning-utilities<2.0,>=0.10.0->lightning<2.8,>=2.2->autogluon.multimodal==1.4.0->autogluon) (80.9.0)

Requirement already satisfied: contourpy>=1.0.1 in /usr/local/lib/python3.12/dist-packages (from matplotlib<3.11,>=3.7.0->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (1.3.3)

Requirement already satisfied: cycler>=0.10 in /usr/local/lib/python3.12/dist-packages (from matplotlib<3.11,>=3.7.0->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (0.12.1)

Requirement already satisfied: fonttools>=4.22.0 in /usr/local/lib/python3.12/dist-packages (from matplotlib<3.11,>=3.7.0->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (4.60.0)

Requirement already satisfied: kiwisolver>=1.3.1 in /usr/local/lib/python3.12/dist-packages (from matplotlib<3.11,>=3.7.0->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (1.4.9)

Requirement already satisfied: pyparsing>=2.3.1 in /usr/local/lib/python3.12/dist-packages (from matplotlib<3.11,>=3.7.0->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (3.2.4)

Requirement already satisfied: numba in /usr/local/lib/python3.12/dist-packages (from mlforecast<0.15.0,>=0.14.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (0.60.0)

Collecting optuna (from mlforecast<0.15.0,>=0.14.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading optuna-4.5.0-py3-none-any.whl.metadata (17 kB)

Collecting window-ops (from mlforecast<0.15.0,>=0.14.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading window_ops-0.0.15-py3-none-any.whl.metadata (6.8 kB)

Requirement already satisfied: gdown>=4.0.0 in /usr/local/lib/python3.12/dist-packages (from nlpaug<1.2.0,>=1.1.10->autogluon.multimodal==1.4.0->autogluon) (5.2.0)

Requirement already satisfied: click in /usr/local/lib/python3.12/dist-packages (from nltk<3.10,>=3.4.5->autogluon.multimodal==1.4.0->autogluon) (8.2.1)

Requirement already satisfied: regex>=2021.8.3 in /usr/local/lib/python3.12/dist-packages (from nltk<3.10,>=3.4.5->autogluon.multimodal==1.4.0->autogluon) (2024.11.6)

Requirement already satisfied: antlr4-python3-runtime==4.9.* in /usr/local/lib/python3.12/dist-packages (from omegaconf<2.4.0,>=2.1.1->autogluon.multimodal==1.4.0->autogluon) (4.9.3)

Collecting colorama (from openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon)

Downloading https://download.pytorch.org/whl/colorama-0.4.6-py2.py3-none-any.whl (25 kB)

Collecting model-index (from openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon)

Downloading model_index-0.1.11-py3-none-any.whl.metadata (3.9 kB)

Collecting opendatalab (from openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon)

Downloading opendatalab-0.0.10-py3-none-any.whl.metadata (6.4 kB)

Requirement already satisfied: rich in /usr/local/lib/python3.12/dist-packages (from openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon) (13.9.4)

Requirement already satisfied: tabulate in /usr/local/lib/python3.12/dist-packages (from openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon) (0.9.0)

Requirement already satisfied: pytz>=2020.1 in /usr/local/lib/python3.12/dist-packages (from pandas<2.4.0,>=2.0.0->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (2025.2)

Requirement already satisfied: tzdata>=2022.7 in /usr/local/lib/python3.12/dist-packages (from pandas<2.4.0,>=2.0.0->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (2025.2)

Requirement already satisfied: annotated-types>=0.6.0 in /usr/local/lib/python3.12/dist-packages (from pydantic<3,>=1.7->gluonts<0.17,>=0.15.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (0.7.0)

Requirement already satisfied: pydantic-core==2.33.2 in /usr/local/lib/python3.12/dist-packages (from pydantic<3,>=1.7->gluonts<0.17,>=0.15.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (2.33.2)

Requirement already satisfied: typing-inspection>=0.4.0 in /usr/local/lib/python3.12/dist-packages (from pydantic<3,>=1.7->gluonts<0.17,>=0.15.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (0.4.1)

Requirement already satisfied: filelock in /usr/local/lib/python3.12/dist-packages (from ray<2.45,>=2.10.0->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (3.19.1)

Requirement already satisfied: msgpack<2.0.0,>=1.0.0 in /usr/local/lib/python3.12/dist-packages (from ray<2.45,>=2.10.0->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (1.1.1)

Requirement already satisfied: protobuf!=3.19.5,>=3.15.3 in /usr/local/lib/python3.12/dist-packages (from ray<2.45,>=2.10.0->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (5.29.5)

Requirement already satisfied: aiosignal in /usr/local/lib/python3.12/dist-packages (from ray<2.45,>=2.10.0->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (1.4.0)

Requirement already satisfied: frozenlist in /usr/local/lib/python3.12/dist-packages (from ray<2.45,>=2.10.0->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (1.7.0)

Collecting aiohttp_cors (from ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon)

Downloading aiohttp_cors-0.8.1-py3-none-any.whl.metadata (20 kB)

Collecting colorful (from ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon)

Downloading colorful-0.5.7-py2.py3-none-any.whl.metadata (16 kB)

Collecting py-spy>=0.4.0 (from ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon)

Downloading py_spy-0.4.1-py2.py3-none-manylinux_2_5_x86_64.manylinux1_x86_64.whl.metadata (510 bytes)

Requirement already satisfied: grpcio>=1.42.0 in /usr/local/lib/python3.12/dist-packages (from ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (1.75.0)

Collecting opencensus (from ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon)

Downloading opencensus-0.11.4-py2.py3-none-any.whl.metadata (12 kB)

Requirement already satisfied: prometheus_client>=0.7.1 in /usr/local/lib/python3.12/dist-packages (from ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (0.22.1)

Requirement already satisfied: smart_open in /usr/local/lib/python3.12/dist-packages (from ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (7.3.1)

Collecting virtualenv!=20.21.1,>=20.0.24 (from ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon)

Downloading virtualenv-20.34.0-py3-none-any.whl.metadata (4.6 kB)

Collecting tensorboardX>=1.9 (from ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon)

Downloading tensorboardx-2.6.4-py3-none-any.whl.metadata (6.2 kB)

Requirement already satisfied: charset_normalizer<4,>=2 in /usr/local/lib/python3.12/dist-packages (from requests->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (3.4.3)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.12/dist-packages (from requests->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (3.10)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.12/dist-packages (from requests->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (2025.8.3)

Requirement already satisfied: imageio!=2.35.0,>=2.33 in /usr/local/lib/python3.12/dist-packages (from scikit-image<0.26.0,>=0.19.1->autogluon.multimodal==1.4.0->autogluon) (2.37.0)

Requirement already satisfied: tifffile>=2022.8.12 in /usr/local/lib/python3.12/dist-packages (from scikit-image<0.26.0,>=0.19.1->autogluon.multimodal==1.4.0->autogluon) (2025.9.9)

Requirement already satisfied: lazy-loader>=0.4 in /usr/local/lib/python3.12/dist-packages (from scikit-image<0.26.0,>=0.19.1->autogluon.multimodal==1.4.0->autogluon) (0.4)

Requirement already satisfied: threadpoolctl>=3.1.0 in /usr/local/lib/python3.12/dist-packages (from scikit-learn<1.8.0,>=1.4.0->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon) (3.6.0)

Requirement already satisfied: spacy-legacy<3.1.0,>=3.0.11 in /usr/local/lib/python3.12/dist-packages (from spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (3.0.12)

Requirement already satisfied: spacy-loggers<2.0.0,>=1.0.0 in /usr/local/lib/python3.12/dist-packages (from spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (1.0.5)

Requirement already satisfied: murmurhash<1.1.0,>=0.28.0 in /usr/local/lib/python3.12/dist-packages (from spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (1.0.13)

Requirement already satisfied: cymem<2.1.0,>=2.0.2 in /usr/local/lib/python3.12/dist-packages (from spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (2.0.11)

Requirement already satisfied: preshed<3.1.0,>=3.0.2 in /usr/local/lib/python3.12/dist-packages (from spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (3.0.10)

Requirement already satisfied: thinc<8.4.0,>=8.3.4 in /usr/local/lib/python3.12/dist-packages (from spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (8.3.6)

Requirement already satisfied: wasabi<1.2.0,>=0.9.1 in /usr/local/lib/python3.12/dist-packages (from spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (1.1.3)

Requirement already satisfied: srsly<3.0.0,>=2.4.3 in /usr/local/lib/python3.12/dist-packages (from spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (2.5.1)

Requirement already satisfied: catalogue<2.1.0,>=2.0.6 in /usr/local/lib/python3.12/dist-packages (from spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (2.0.10)

Requirement already satisfied: weasel<0.5.0,>=0.1.0 in /usr/local/lib/python3.12/dist-packages (from spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (0.4.1)

Requirement already satisfied: typer<1.0.0,>=0.3.0 in /usr/local/lib/python3.12/dist-packages (from spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (0.17.4)

Requirement already satisfied: langcodes<4.0.0,>=3.2.0 in /usr/local/lib/python3.12/dist-packages (from spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (3.5.0)

Requirement already satisfied: language-data>=1.2 in /usr/local/lib/python3.12/dist-packages (from langcodes<4.0.0,>=3.2.0->spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (1.3.0)

Requirement already satisfied: statsmodels>=0.13.2 in /usr/local/lib/python3.12/dist-packages (from statsforecast<2.0.2,>=1.7.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (0.14.5)

Requirement already satisfied: absl-py>=0.4 in /usr/local/lib/python3.12/dist-packages (from tensorboard<3,>=2.9->autogluon.multimodal==1.4.0->autogluon) (1.4.0)

Requirement already satisfied: markdown>=2.6.8 in /usr/local/lib/python3.12/dist-packages (from tensorboard<3,>=2.9->autogluon.multimodal==1.4.0->autogluon) (3.9)

Requirement already satisfied: tensorboard-data-server<0.8.0,>=0.7.0 in /usr/local/lib/python3.12/dist-packages (from tensorboard<3,>=2.9->autogluon.multimodal==1.4.0->autogluon) (0.7.2)

Requirement already satisfied: werkzeug>=1.0.1 in /usr/local/lib/python3.12/dist-packages (from tensorboard<3,>=2.9->autogluon.multimodal==1.4.0->autogluon) (3.1.3)

Requirement already satisfied: blis<1.4.0,>=1.3.0 in /usr/local/lib/python3.12/dist-packages (from thinc<8.4.0,>=8.3.4->spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (1.3.0)

Requirement already satisfied: confection<1.0.0,>=0.0.1 in /usr/local/lib/python3.12/dist-packages (from thinc<8.4.0,>=8.3.4->spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (0.1.5)

Requirement already satisfied: sympy>=1.13.3 in /usr/local/lib/python3.12/dist-packages (from torch<2.8,>=2.2->autogluon.multimodal==1.4.0->autogluon) (1.13.3)

Collecting tokenizers<0.22,>=0.21 (from transformers<4.50,>=4.38.0->transformers[sentencepiece]<4.50,>=4.38.0->autogluon.multimodal==1.4.0->autogluon)

Downloading tokenizers-0.21.4-cp39-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (6.7 kB)

Requirement already satisfied: hf-xet<2.0.0,>=1.1.3 in /usr/local/lib/python3.12/dist-packages (from huggingface-hub[torch]->autogluon.tabular[all]==1.4.0->autogluon) (1.1.10)

Requirement already satisfied: sentencepiece!=0.1.92,>=0.1.91 in /usr/local/lib/python3.12/dist-packages (from transformers[sentencepiece]<4.50,>=4.38.0->autogluon.multimodal==1.4.0->autogluon) (0.2.1)

Requirement already satisfied: shellingham>=1.3.0 in /usr/local/lib/python3.12/dist-packages (from typer<1.0.0,>=0.3.0->spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (1.5.4)

Requirement already satisfied: cloudpathlib<1.0.0,>=0.7.0 in /usr/local/lib/python3.12/dist-packages (from weasel<0.5.0,>=0.1.0->spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (0.22.0)

Requirement already satisfied: wrapt in /usr/local/lib/python3.12/dist-packages (from smart_open->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (1.17.3)

Requirement already satisfied: nvidia-nccl-cu12 in /usr/local/lib/python3.12/dist-packages (from xgboost<3.1,>=2.0->autogluon.tabular[all]==1.4.0->autogluon) (2.27.3)

Requirement already satisfied: aiohappyeyeballs>=2.5.0 in /usr/local/lib/python3.12/dist-packages (from aiohttp!=4.0.0a0,!=4.0.0a1->fsspec[http]<=2025.3->autogluon.multimodal==1.4.0->autogluon) (2.6.1)

Requirement already satisfied: multidict<7.0,>=4.5 in /usr/local/lib/python3.12/dist-packages (from aiohttp!=4.0.0a0,!=4.0.0a1->fsspec[http]<=2025.3->autogluon.multimodal==1.4.0->autogluon) (6.6.4)

Requirement already satisfied: propcache>=0.2.0 in /usr/local/lib/python3.12/dist-packages (from aiohttp!=4.0.0a0,!=4.0.0a1->fsspec[http]<=2025.3->autogluon.multimodal==1.4.0->autogluon) (0.3.2)

Requirement already satisfied: yarl<2.0,>=1.17.0 in /usr/local/lib/python3.12/dist-packages (from aiohttp!=4.0.0a0,!=4.0.0a1->fsspec[http]<=2025.3->autogluon.multimodal==1.4.0->autogluon) (1.20.1)

Collecting triad>=0.9.7 (from fugue>=0.9.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading triad-0.9.8-py3-none-any.whl.metadata (6.3 kB)

Collecting adagio>=0.2.4 (from fugue>=0.9.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading adagio-0.2.6-py3-none-any.whl.metadata (1.8 kB)

Requirement already satisfied: beautifulsoup4 in /usr/local/lib/python3.12/dist-packages (from gdown>=4.0.0->nlpaug<1.2.0,>=1.1.10->autogluon.multimodal==1.4.0->autogluon) (4.13.5)

Requirement already satisfied: marisa-trie>=1.1.0 in /usr/local/lib/python3.12/dist-packages (from language-data>=1.2->langcodes<4.0.0,>=3.2.0->spacy<3.9->autogluon.tabular[all]==1.4.0->autogluon) (1.3.1)

Requirement already satisfied: llvmlite<0.44,>=0.43.0dev0 in /usr/local/lib/python3.12/dist-packages (from numba->mlforecast<0.15.0,>=0.14.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (0.43.0)

Requirement already satisfied: markdown-it-py>=2.2.0 in /usr/local/lib/python3.12/dist-packages (from rich->openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon) (4.0.0)

Requirement already satisfied: pygments<3.0.0,>=2.13.0 in /usr/local/lib/python3.12/dist-packages (from rich->openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon) (2.19.2)

Requirement already satisfied: mdurl~=0.1 in /usr/local/lib/python3.12/dist-packages (from markdown-it-py>=2.2.0->rich->openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon) (0.1.2)

Requirement already satisfied: patsy>=0.5.6 in /usr/local/lib/python3.12/dist-packages (from statsmodels>=0.13.2->statsforecast<2.0.2,>=1.7.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (1.0.1)

Requirement already satisfied: mpmath<1.4,>=1.1.0 in /usr/local/lib/python3.12/dist-packages (from sympy>=1.13.3->torch<2.8,>=2.2->autogluon.multimodal==1.4.0->autogluon) (1.3.0)

Collecting fs (from triad>=0.9.7->fugue>=0.9.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading fs-2.4.16-py2.py3-none-any.whl.metadata (6.3 kB)

Collecting distlib<1,>=0.3.7 (from virtualenv!=20.21.1,>=20.0.24->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon)

Downloading distlib-0.4.0-py2.py3-none-any.whl.metadata (5.2 kB)

Requirement already satisfied: platformdirs<5,>=3.9.1 in /usr/local/lib/python3.12/dist-packages (from virtualenv!=20.21.1,>=20.0.24->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (4.4.0)

Requirement already satisfied: soupsieve>1.2 in /usr/local/lib/python3.12/dist-packages (from beautifulsoup4->gdown>=4.0.0->nlpaug<1.2.0,>=1.1.10->autogluon.multimodal==1.4.0->autogluon) (2.8)

Requirement already satisfied: frozendict in /usr/local/lib/python3.12/dist-packages (from einx->autogluon.tabular[all]==1.4.0->autogluon) (2.4.6)

Collecting appdirs~=1.4.3 (from fs->triad>=0.9.7->fugue>=0.9.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading appdirs-1.4.4-py2.py3-none-any.whl.metadata (9.0 kB)

Collecting ordered-set (from model-index->openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon)

Downloading ordered_set-4.1.0-py3-none-any.whl.metadata (5.3 kB)

Collecting opencensus-context>=0.1.3 (from opencensus->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon)

Downloading opencensus_context-0.1.3-py2.py3-none-any.whl.metadata (3.3 kB)

Requirement already satisfied: google-api-core<3.0.0,>=1.0.0 in /usr/local/lib/python3.12/dist-packages (from opencensus->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (2.25.1)

Requirement already satisfied: googleapis-common-protos<2.0.0,>=1.56.2 in /usr/local/lib/python3.12/dist-packages (from google-api-core<3.0.0,>=1.0.0->opencensus->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (1.70.0)

Requirement already satisfied: proto-plus<2.0.0,>=1.22.3 in /usr/local/lib/python3.12/dist-packages (from google-api-core<3.0.0,>=1.0.0->opencensus->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (1.26.1)

Requirement already satisfied: google-auth<3.0.0,>=2.14.1 in /usr/local/lib/python3.12/dist-packages (from google-api-core<3.0.0,>=1.0.0->opencensus->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (2.38.0)

Requirement already satisfied: cachetools<6.0,>=2.0.0 in /usr/local/lib/python3.12/dist-packages (from google-auth<3.0.0,>=2.14.1->google-api-core<3.0.0,>=1.0.0->opencensus->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (5.5.2)

Requirement already satisfied: pyasn1-modules>=0.2.1 in /usr/local/lib/python3.12/dist-packages (from google-auth<3.0.0,>=2.14.1->google-api-core<3.0.0,>=1.0.0->opencensus->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (0.4.2)

Requirement already satisfied: rsa<5,>=3.1.4 in /usr/local/lib/python3.12/dist-packages (from google-auth<3.0.0,>=2.14.1->google-api-core<3.0.0,>=1.0.0->opencensus->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (4.9.1)

Requirement already satisfied: pyasn1>=0.1.3 in /usr/local/lib/python3.12/dist-packages (from rsa<5,>=3.1.4->google-auth<3.0.0,>=2.14.1->google-api-core<3.0.0,>=1.0.0->opencensus->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon) (0.6.1)

Collecting pycryptodome (from opendatalab->openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon)

Downloading pycryptodome-3.23.0-cp37-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (3.4 kB)

Collecting openxlab (from opendatalab->openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon)

Downloading openxlab-0.1.2-py3-none-any.whl.metadata (3.8 kB)

Collecting filelock (from ray<2.45,>=2.10.0->ray[default,tune]<2.45,>=2.10.0; extra == "all"->autogluon.core[all]==1.4.0->autogluon)

Downloading filelock-3.14.0-py3-none-any.whl.metadata (2.8 kB)

Collecting oss2~=2.17.0 (from openxlab->opendatalab->openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon)

Downloading oss2-2.17.0.tar.gz (259 kB)

Preparing metadata (setup.py) ... ?25l?25hdone

Collecting packaging>=20.0 (from accelerate<2.0,>=0.34.0->autogluon.multimodal==1.4.0->autogluon)

Downloading packaging-24.2-py3-none-any.whl.metadata (3.2 kB)

Collecting pytz>=2020.1 (from pandas<2.4.0,>=2.0.0->autogluon.core==1.4.0->autogluon.core[all]==1.4.0->autogluon)

Downloading pytz-2023.4-py2.py3-none-any.whl.metadata (22 kB)

INFO: pip is looking at multiple versions of openxlab to determine which version is compatible with other requirements. This could take a while.

Collecting openxlab (from opendatalab->openmim<0.4.0,>=0.3.7->autogluon.multimodal==1.4.0->autogluon)

Downloading openxlab-0.1.1-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.1.0-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.0.38-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.0.37-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.0.36-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.0.35-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.0.34-py3-none-any.whl.metadata (3.8 kB)

INFO: pip is still looking at multiple versions of openxlab to determine which version is compatible with other requirements. This could take a while.

Downloading openxlab-0.0.33-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.0.32-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.0.31-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.0.30-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.0.29-py3-none-any.whl.metadata (3.8 kB)

INFO: This is taking longer than usual. You might need to provide the dependency resolver with stricter constraints to reduce runtime. See https://pip.pypa.io/warnings/backtracking for guidance. If you want to abort this run, press Ctrl + C.

Downloading openxlab-0.0.28-py3-none-any.whl.metadata (3.7 kB)

Downloading openxlab-0.0.27-py3-none-any.whl.metadata (3.7 kB)

Downloading openxlab-0.0.26-py3-none-any.whl.metadata (3.7 kB)

Downloading openxlab-0.0.25-py3-none-any.whl.metadata (3.7 kB)

Downloading openxlab-0.0.24-py3-none-any.whl.metadata (3.7 kB)

Downloading openxlab-0.0.23-py3-none-any.whl.metadata (3.7 kB)

Downloading openxlab-0.0.22-py3-none-any.whl.metadata (3.7 kB)

Downloading openxlab-0.0.21-py3-none-any.whl.metadata (3.7 kB)

Downloading openxlab-0.0.20-py3-none-any.whl.metadata (3.7 kB)

Downloading openxlab-0.0.19-py3-none-any.whl.metadata (3.7 kB)

Downloading openxlab-0.0.18-py3-none-any.whl.metadata (3.7 kB)

Downloading openxlab-0.0.17-py3-none-any.whl.metadata (3.7 kB)

Downloading openxlab-0.0.16-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.0.15-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.0.14-py3-none-any.whl.metadata (3.8 kB)

Downloading openxlab-0.0.13-py3-none-any.whl.metadata (4.5 kB)

Downloading openxlab-0.0.12-py3-none-any.whl.metadata (4.5 kB)

Downloading openxlab-0.0.11-py3-none-any.whl.metadata (4.3 kB)

Requirement already satisfied: alembic>=1.5.0 in /usr/local/lib/python3.12/dist-packages (from optuna->mlforecast<0.15.0,>=0.14.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (1.16.5)

Collecting colorlog (from optuna->mlforecast<0.15.0,>=0.14.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon)

Downloading colorlog-6.9.0-py3-none-any.whl.metadata (10 kB)

Requirement already satisfied: sqlalchemy>=1.4.2 in /usr/local/lib/python3.12/dist-packages (from optuna->mlforecast<0.15.0,>=0.14.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (2.0.43)

Requirement already satisfied: Mako in /usr/local/lib/python3.12/dist-packages (from alembic>=1.5.0->optuna->mlforecast<0.15.0,>=0.14.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (1.3.10)

Requirement already satisfied: greenlet>=1 in /usr/local/lib/python3.12/dist-packages (from sqlalchemy>=1.4.2->optuna->mlforecast<0.15.0,>=0.14.0->autogluon.timeseries==1.4.0->autogluon.timeseries[all]==1.4.0->autogluon) (3.2.4)

Requirement already satisfied: tenacity>=6.2.0 in /usr/local/lib/python3.12/dist-packages (from plotly->catboost<1.3,>=1.2->autogluon.tabular[all]==1.4.0->autogluon) (8.5.0)

Requirement already satisfied: beartype>=0.16.2 in /usr/local/lib/python3.12/dist-packages (from plum-dispatch->fastai<2.9,>=2.3.1->autogluon.tabular[all]==1.4.0->autogluon) (0.21.0)

Requirement already satisfied: PySocks!=1.5.7,>=1.5.6 in /usr/local/lib/python3.12/dist-packages (from requests[socks]->gdown>=4.0.0->nlpaug<1.2.0,>=1.1.10->autogluon.multimodal==1.4.0->autogluon) (1.7.1)

Downloading autogluon-1.4.0-py3-none-any.whl (9.8 kB)

Downloading autogluon.core-1.4.0-py3-none-any.whl (225 kB)

Downloading autogluon.common-1.4.0-py3-none-any.whl (70 kB)

Downloading autogluon.features-1.4.0-py3-none-any.whl (64 kB)

Downloading autogluon.multimodal-1.4.0-py3-none-any.whl (454 kB)

Downloading autogluon.tabular-1.4.0-py3-none-any.whl (487 kB)

Downloading autogluon.timeseries-1.4.0-py3-none-any.whl (189 kB)

Downloading boto3-1.40.44-py3-none-any.whl (139 kB)

Downloading botocore-1.40.44-py3-none-any.whl (14.1 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 14.1/14.1 MB 183.9 MB/s 0:00:00

?25hDownloading catboost-1.2.8-cp312-cp312-manylinux2014_x86_64.whl (99.2 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 99.2/99.2 MB 57.0 MB/s 0:00:01

?25hDownloading coreforecast-0.0.16-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (287 kB)

Downloading evaluate-0.4.6-py3-none-any.whl (84 kB)

Downloading gluonts-0.16.2-py3-none-any.whl (1.5 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.5/1.5 MB 75.7 MB/s 0:00:00

?25hDownloading jmespath-1.0.1-py3-none-any.whl (20 kB)

Downloading jsonschema-4.23.0-py3-none-any.whl (88 kB)

Downloading lightning-2.5.5-py3-none-any.whl (828 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 828.5/828.5 kB 45.7 MB/s 0:00:00

?25hDownloading lightning_utilities-0.15.2-py3-none-any.whl (29 kB)

Downloading mlforecast-0.14.0-py3-none-any.whl (71 kB)

Downloading nlpaug-1.1.11-py3-none-any.whl (410 kB)

Downloading openmim-0.3.9-py2.py3-none-any.whl (52 kB)

Downloading pdf2image-1.17.0-py3-none-any.whl (11 kB)

Downloading pytesseract-0.3.13-py3-none-any.whl (14 kB)

Downloading pytorch_metric_learning-2.8.1-py3-none-any.whl (125 kB)

Downloading ray-2.44.1-cp312-cp312-manylinux2014_x86_64.whl (68.1 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 68.1/68.1 MB 16.5 MB/s 0:00:04

?25hDownloading s3transfer-0.14.0-py3-none-any.whl (85 kB)

Downloading statsforecast-2.0.1-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (353 kB)

Downloading timm-1.0.3-py3-none-any.whl (2.3 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.3/2.3 MB 94.3 MB/s 0:00:00

?25hDownloading https://download.pytorch.org/whl/cpu/torch-2.7.1%2Bcpu-cp312-cp312-manylinux_2_28_x86_64.whl (175.8 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 175.8/175.8 MB 69.7 MB/s 0:00:02

?25hDownloading torchmetrics-1.7.4-py3-none-any.whl (963 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 963.5/963.5 kB 54.4 MB/s 0:00:00

?25hDownloading https://download.pytorch.org/whl/cpu/torchvision-0.22.1%2Bcpu-cp312-cp312-manylinux_2_28_x86_64.whl (2.0 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.0/2.0 MB 91.8 MB/s 0:00:00

?25hDownloading transformers-4.49.0-py3-none-any.whl (10.0 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 10.0/10.0 MB 89.1 MB/s 0:00:00

?25hDownloading tokenizers-0.21.4-cp39-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (3.1 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 3.1/3.1 MB 134.7 MB/s 0:00:00

?25hDownloading utilsforecast-0.2.11-py3-none-any.whl (41 kB)

Downloading fugue-0.9.1-py3-none-any.whl (278 kB)

Downloading adagio-0.2.6-py3-none-any.whl (19 kB)

Downloading py_spy-0.4.1-py2.py3-none-manylinux_2_5_x86_64.manylinux1_x86_64.whl (2.8 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.8/2.8 MB 26.0 MB/s 0:00:00

?25hDownloading tensorboardx-2.6.4-py3-none-any.whl (87 kB)

Downloading triad-0.9.8-py3-none-any.whl (62 kB)

Downloading virtualenv-20.34.0-py3-none-any.whl (6.0 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 6.0/6.0 MB 170.6 MB/s 0:00:00

?25hDownloading distlib-0.4.0-py2.py3-none-any.whl (469 kB)

Downloading aiohttp_cors-0.8.1-py3-none-any.whl (25 kB)

Downloading colorful-0.5.7-py2.py3-none-any.whl (201 kB)

Downloading einx-0.3.0-py3-none-any.whl (102 kB)

Downloading fs-2.4.16-py2.py3-none-any.whl (135 kB)

Downloading appdirs-1.4.4-py2.py3-none-any.whl (9.6 kB)

Downloading loguru-0.7.3-py3-none-any.whl (61 kB)

Downloading model_index-0.1.11-py3-none-any.whl (34 kB)

Downloading opencensus-0.11.4-py2.py3-none-any.whl (128 kB)

Downloading opencensus_context-0.1.3-py2.py3-none-any.whl (5.1 kB)

Downloading opendatalab-0.0.10-py3-none-any.whl (29 kB)

Downloading openxlab-0.0.11-py3-none-any.whl (55 kB)

Downloading optuna-4.5.0-py3-none-any.whl (400 kB)

Downloading colorlog-6.9.0-py3-none-any.whl (11 kB)

Downloading ordered_set-4.1.0-py3-none-any.whl (7.6 kB)

Downloading pycryptodome-3.23.0-cp37-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (2.3 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.3/2.3 MB 104.0 MB/s 0:00:00

?25hDownloading pytorch_lightning-2.5.5-py3-none-any.whl (832 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 832.4/832.4 kB 42.2 MB/s 0:00:00

?25hDownloading window_ops-0.0.15-py3-none-any.whl (15 kB)

Building wheels for collected packages: nvidia-ml-py3, seqeval

DEPRECATION: Building 'nvidia-ml-py3' using the legacy setup.py bdist_wheel mechanism, which will be removed in a future version. pip 25.3 will enforce this behaviour change. A possible replacement is to use the standardized build interface by setting the `--use-pep517` option, (possibly combined with `--no-build-isolation`), or adding a `pyproject.toml` file to the source tree of 'nvidia-ml-py3'. Discussion can be found at https://github.com/pypa/pip/issues/6334

Building wheel for nvidia-ml-py3 (setup.py) ... ?25l?25hdone

Created wheel for nvidia-ml-py3: filename=nvidia_ml_py3-7.352.0-py3-none-any.whl size=19208 sha256=de5755c4b468469bed58dc95b15dc68aea5ad617a735248820dbb68909f12c9d

Stored in directory: /root/.cache/pip/wheels/6e/65/79/33dee66cba26e8204801916dfee7481bccfd22905ebb841fe5

DEPRECATION: Building 'seqeval' using the legacy setup.py bdist_wheel mechanism, which will be removed in a future version. pip 25.3 will enforce this behaviour change. A possible replacement is to use the standardized build interface by setting the `--use-pep517` option, (possibly combined with `--no-build-isolation`), or adding a `pyproject.toml` file to the source tree of 'seqeval'. Discussion can be found at https://github.com/pypa/pip/issues/6334

Building wheel for seqeval (setup.py) ... ?25l?25hdone

Created wheel for seqeval: filename=seqeval-1.2.2-py3-none-any.whl size=16250 sha256=be6bc73d1e1ae00cd3c1f9e187bde8f5b384d0242d13de91ace16b2d35e3a78d

Stored in directory: /root/.cache/pip/wheels/5f/b8/73/0b2c1a76b701a677653dd79ece07cfabd7457989dbfbdcd8d7

Successfully built nvidia-ml-py3 seqeval

Installing collected packages: py-spy, opencensus-context, nvidia-ml-py3, distlib, colorful, appdirs, virtualenv, tensorboardX, pytesseract, pycryptodome, pdf2image, ordered-set, openxlab, loguru, lightning-utilities, jmespath, fs, coreforecast, colorlog, colorama, window-ops, torch, model-index, einx, botocore, utilsforecast, triad, torchvision, torchmetrics, tokenizers, seqeval, s3transfer, pytorch-metric-learning, optuna, opendatalab, jsonschema, gluonts, catboost, aiohttp_cors, transformers, timm, ray, pytorch-lightning, openmim, opencensus, nlpaug, mlforecast, boto3, adagio, lightning, fugue, evaluate, autogluon.common, statsforecast, autogluon.features, autogluon.core, autogluon.tabular, autogluon.multimodal, autogluon.timeseries, autogluon

Attempting uninstall: torch

Found existing installation: torch 2.8.0+cu126

Uninstalling torch-2.8.0+cu126:

Successfully uninstalled torch-2.8.0+cu126

Attempting uninstall: torchvision

Found existing installation: torchvision 0.23.0+cu126

Uninstalling torchvision-0.23.0+cu126:

Successfully uninstalled torchvision-0.23.0+cu126

Attempting uninstall: tokenizers

Found existing installation: tokenizers 0.22.0

Uninstalling tokenizers-0.22.0:

Successfully uninstalled tokenizers-0.22.0

Attempting uninstall: jsonschema

Found existing installation: jsonschema 4.25.1

Uninstalling jsonschema-4.25.1:

Successfully uninstalled jsonschema-4.25.1

Attempting uninstall: transformers

Found existing installation: transformers 4.56.1

Uninstalling transformers-4.56.1:

Successfully uninstalled transformers-4.56.1

Attempting uninstall: timm

Found existing installation: timm 1.0.19

Uninstalling timm-1.0.19:

Successfully uninstalled timm-1.0.19

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 60/60 [autogluon]

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

torchaudio 2.8.0+cu126 requires torch==2.8.0, but you have torch 2.7.1+cpu which is incompatible.

Successfully installed adagio-0.2.6 aiohttp_cors-0.8.1 appdirs-1.4.4 autogluon-1.4.0 autogluon.common-1.4.0 autogluon.core-1.4.0 autogluon.features-1.4.0 autogluon.multimodal-1.4.0 autogluon.tabular-1.4.0 autogluon.timeseries-1.4.0 boto3-1.40.44 botocore-1.40.44 catboost-1.2.8 colorama-0.4.6 colorful-0.5.7 colorlog-6.9.0 coreforecast-0.0.16 distlib-0.4.0 einx-0.3.0 evaluate-0.4.6 fs-2.4.16 fugue-0.9.1 gluonts-0.16.2 jmespath-1.0.1 jsonschema-4.23.0 lightning-2.5.5 lightning-utilities-0.15.2 loguru-0.7.3 mlforecast-0.14.0 model-index-0.1.11 nlpaug-1.1.11 nvidia-ml-py3-7.352.0 opencensus-0.11.4 opencensus-context-0.1.3 opendatalab-0.0.10 openmim-0.3.9 openxlab-0.0.11 optuna-4.5.0 ordered-set-4.1.0 pdf2image-1.17.0 py-spy-0.4.1 pycryptodome-3.23.0 pytesseract-0.3.13 pytorch-lightning-2.5.5 pytorch-metric-learning-2.8.1 ray-2.44.1 s3transfer-0.14.0 seqeval-1.2.2 statsforecast-2.0.1 tensorboardX-2.6.4 timm-1.0.3 tokenizers-0.21.4 torch-2.7.1+cpu torchmetrics-1.7.4 torchvision-0.22.1+cpu transformers-4.49.0 triad-0.9.8 utilsforecast-0.2.11 virtualenv-20.34.0 window-ops-0.0.15

CPU times: user 5.39 s, sys: 1.27 s, total: 6.67 s

Wall time: 2min 24s

from autogluon.tabular import TabularPredictor

import pandas as pd

from sklearn.model_selection import train_test_split

# Read data

df = pd.read_csv('NLP_data/Sentences_AllAgree.txt', sep=".@", header=None, engine='python', encoding = "ISO-8859-1") # Finbert data

# df = pd.read_csv('NLP_data/Sentences_AllAgree.txt', sep=".@", header=None, engine='python', encoding = "utf-8") # Finbert data

# tmp = pd.read_csv('NLP_data/Sentences_75Agree.txt', sep=".@", header=None, engine='python')

# df = pd.concat([df,tmp])

# tmp = pd.read_csv('NLP_data/Sentences_66Agree.txt', sep=".@", header=None, engine='python')

# df = pd.concat([df,tmp])

# tmp = pd.read_csv('NLP_data/Sentences_50Agree.txt', sep=".@", header=None, engine='python')

# df = pd.concat([df,tmp])

df.columns = ["Text","Label"]

print(df.shape)

df.head()

(2264, 2)

| Text | Label | |

|---|---|---|

| 0 | According to Gran , the company has no plans t... | neutral |

| 1 | For the last quarter of 2010 , Componenta 's n... | positive |

| 2 | In the third quarter of 2010 , net sales incre... | positive |

| 3 | Operating profit rose to EUR 13.1 mn from EUR ... | positive |

| 4 | Operating profit totalled EUR 21.1 mn , up fro... | positive |

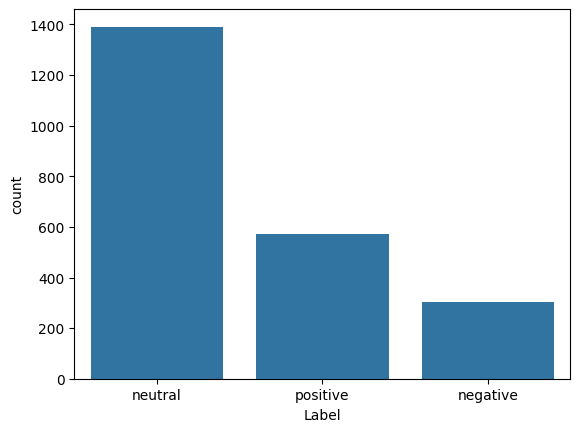

import seaborn as sns

import matplotlib.pyplot as plt

sns.countplot(x='Label', data=df)

plt.show()

27.2. Fit the model#

The next few lines of code are all that are needed to train the model. It is remarkable in its parsimony!

The vectorization of the text adjusts the size of the vocabulary so that it uses the available memory efficiently.

!pip install dask[dataframe] --quiet

%%time

#TRAIN THE MODEL

train_data, test_data = train_test_split(df, test_size=0.3, random_state=42)

print("Train size =",train_data.shape," | Test size =",test_data.shape)

predictor = TabularPredictor(label='Label').fit(train_data=train_data) #, hyperparameters='multimodal')

# predictor = task.fit(train_data=train_data, label='Label')

performance = predictor.evaluate(train_data)

No path specified. Models will be saved in: "AutogluonModels/ag-20251002_222432"

Verbosity: 2 (Standard Logging)

=================== System Info ===================

AutoGluon Version: 1.4.0

Python Version: 3.12.11

Operating System: Linux

Platform Machine: x86_64

Platform Version: #1 SMP PREEMPT_DYNAMIC Sat Sep 6 09:54:41 UTC 2025

CPU Count: 2

Memory Avail: 11.37 GB / 12.67 GB (89.7%)

Disk Space Avail: 67.81 GB / 112.64 GB (60.2%)

===================================================

No presets specified! To achieve strong results with AutoGluon, it is recommended to use the available presets. Defaulting to `'medium'`...

Recommended Presets (For more details refer to https://auto.gluon.ai/stable/tutorials/tabular/tabular-essentials.html#presets):

presets='extreme' : New in v1.4: Massively better than 'best' on datasets <30000 samples by using new models meta-learned on https://tabarena.ai: TabPFNv2, TabICL, Mitra, and TabM. Absolute best accuracy. Requires a GPU. Recommended 64 GB CPU memory and 32+ GB GPU memory.

presets='best' : Maximize accuracy. Recommended for most users. Use in competitions and benchmarks.

presets='high' : Strong accuracy with fast inference speed.

presets='good' : Good accuracy with very fast inference speed.

presets='medium' : Fast training time, ideal for initial prototyping.

Using hyperparameters preset: hyperparameters='default'

Train size = (1584, 2) | Test size = (680, 2)

Beginning AutoGluon training ...

AutoGluon will save models to "/content/drive/MyDrive/Books_Writings/NLPBook/AutogluonModels/ag-20251002_222432"

Train Data Rows: 1584

Train Data Columns: 1

Label Column: Label

AutoGluon infers your prediction problem is: 'multiclass' (because dtype of label-column == object).

3 unique label values: ['neutral', 'positive', 'negative']

If 'multiclass' is not the correct problem_type, please manually specify the problem_type parameter during Predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression', 'quantile'])

Problem Type: multiclass

Preprocessing data ...

Train Data Class Count: 3

Using Feature Generators to preprocess the data ...

Fitting AutoMLPipelineFeatureGenerator...

Available Memory: 11611.41 MB

Train Data (Original) Memory Usage: 0.26 MB (0.0% of available memory)

Inferring data type of each feature based on column values. Set feature_metadata_in to manually specify special dtypes of the features.

Stage 1 Generators:

Fitting AsTypeFeatureGenerator...

Stage 2 Generators:

Fitting FillNaFeatureGenerator...

Stage 3 Generators:

Fitting CategoryFeatureGenerator...

Fitting CategoryMemoryMinimizeFeatureGenerator...

Fitting TextSpecialFeatureGenerator...

Fitting BinnedFeatureGenerator...

Fitting DropDuplicatesFeatureGenerator...

Fitting TextNgramFeatureGenerator...

Fitting CountVectorizer for text features: ['Text']

CountVectorizer fit with vocabulary size = 186

Stage 4 Generators:

Fitting DropUniqueFeatureGenerator...

Stage 5 Generators:

Fitting DropDuplicatesFeatureGenerator...

Types of features in original data (raw dtype, special dtypes):

('object', ['text']) : 1 | ['Text']

Types of features in processed data (raw dtype, special dtypes):

('category', ['text_as_category']) : 1 | ['Text']

('int', ['binned', 'text_special']) : 20 | ['Text.char_count', 'Text.word_count', 'Text.capital_ratio', 'Text.lower_ratio', 'Text.digit_ratio', ...]

('int', ['text_ngram']) : 180 | ['__nlp__.000', '__nlp__.10', '__nlp__.11', '__nlp__.12', '__nlp__.20', ...]

6.2s = Fit runtime

1 features in original data used to generate 201 features in processed data.

Train Data (Processed) Memory Usage: 0.58 MB (0.0% of available memory)

Data preprocessing and feature engineering runtime = 6.24s ...

AutoGluon will gauge predictive performance using evaluation metric: 'accuracy'

To change this, specify the eval_metric parameter of Predictor()

Automatically generating train/validation split with holdout_frac=0.2, Train Rows: 1267, Val Rows: 317

User-specified model hyperparameters to be fit:

{

'NN_TORCH': [{}],

'GBM': [{'extra_trees': True, 'ag_args': {'name_suffix': 'XT'}}, {}, {'learning_rate': 0.03, 'num_leaves': 128, 'feature_fraction': 0.9, 'min_data_in_leaf': 3, 'ag_args': {'name_suffix': 'Large', 'priority': 0, 'hyperparameter_tune_kwargs': None}}],

'CAT': [{}],

'XGB': [{}],

'FASTAI': [{}],

'RF': [{'criterion': 'gini', 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

'XT': [{'criterion': 'gini', 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

}

Fitting 11 L1 models, fit_strategy="sequential" ...

Fitting model: NeuralNetFastAI ...

Fitting with cpus=1, gpus=0, mem=0.0/11.2 GB

0.7445 = Validation score (accuracy)

11.55s = Training runtime

0.01s = Validation runtime

Fitting model: LightGBMXT ...

Fitting with cpus=1, gpus=0, mem=0.0/10.8 GB

0.8801 = Validation score (accuracy)

11.55s = Training runtime

0.02s = Validation runtime

Fitting model: LightGBM ...

Fitting with cpus=1, gpus=0, mem=0.0/10.5 GB

0.8549 = Validation score (accuracy)

1.76s = Training runtime

0.01s = Validation runtime

Fitting model: RandomForestGini ...

Fitting with cpus=2, gpus=0

0.8612 = Validation score (accuracy)

2.47s = Training runtime

0.1s = Validation runtime

Fitting model: RandomForestEntr ...

Fitting with cpus=2, gpus=0

0.8549 = Validation score (accuracy)

1.18s = Training runtime

0.1s = Validation runtime

Fitting model: CatBoost ...

Fitting with cpus=1, gpus=0

0.858 = Validation score (accuracy)

5.72s = Training runtime

0.01s = Validation runtime

Fitting model: ExtraTreesGini ...

Fitting with cpus=2, gpus=0

0.8549 = Validation score (accuracy)

1.1s = Training runtime

0.1s = Validation runtime

Fitting model: ExtraTreesEntr ...

Fitting with cpus=2, gpus=0

0.8612 = Validation score (accuracy)

1.27s = Training runtime

0.1s = Validation runtime

Fitting model: XGBoost ...

Fitting with cpus=1, gpus=0

0.858 = Validation score (accuracy)

2.68s = Training runtime

0.02s = Validation runtime

Fitting model: NeuralNetTorch ...

Fitting with cpus=1, gpus=0, mem=0.0/10.3 GB

0.7413 = Validation score (accuracy)

9.39s = Training runtime

0.01s = Validation runtime

Fitting model: LightGBMLarge ...

Fitting with cpus=1, gpus=0, mem=0.2/10.3 GB

0.8644 = Validation score (accuracy)

2.31s = Training runtime

0.01s = Validation runtime

Fitting model: WeightedEnsemble_L2 ...

Ensemble Weights: {'LightGBMXT': 1.0}

0.8801 = Validation score (accuracy)

0.1s = Training runtime

0.0s = Validation runtime

AutoGluon training complete, total runtime = 59.05s ... Best model: WeightedEnsemble_L2 | Estimated inference throughput: 15697.3 rows/s (317 batch size)

TabularPredictor saved. To load, use: predictor = TabularPredictor.load("/content/drive/MyDrive/Books_Writings/NLPBook/AutogluonModels/ag-20251002_222432")

CPU times: user 45.7 s, sys: 2.2 s, total: 47.9 s

Wall time: 1min 1s

# TEST OUT-OF-SAMPLE

y_test = test_data['Label']

test_data_nolabel = test_data.drop(labels=['Label'],axis=1)

y_pred = predictor.predict(test_data_nolabel)

y_prob = predictor.predict(test_data_nolabel)

perf = predictor.evaluate_predictions(y_true=y_test, y_pred=y_pred, auxiliary_metrics=True)

print(perf)

{'accuracy': 0.8720588235294118, 'balanced_accuracy': np.float64(0.7908290922121237), 'mcc': np.float64(0.7572923133729487)}

predictor.leaderboard(test_data, silent=True)

| model | score_test | score_val | eval_metric | pred_time_test | pred_time_val | fit_time | pred_time_test_marginal | pred_time_val_marginal | fit_time_marginal | stack_level | can_infer | fit_order | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | LightGBMXT | 0.872059 | 0.880126 | accuracy | 0.036902 | 0.019261 | 11.553508 | 0.036902 | 0.019261 | 11.553508 | 1 | True | 2 |

| 1 | WeightedEnsemble_L2 | 0.872059 | 0.880126 | accuracy | 0.042148 | 0.020195 | 11.650759 | 0.005246 | 0.000933 | 0.097251 | 2 | True | 12 |

| 2 | LightGBM | 0.867647 | 0.854890 | accuracy | 0.021719 | 0.011121 | 1.761025 | 0.021719 | 0.011121 | 1.761025 | 1 | True | 3 |

| 3 | XGBoost | 0.854412 | 0.858044 | accuracy | 0.077250 | 0.022649 | 2.678355 | 0.077250 | 0.022649 | 2.678355 | 1 | True | 9 |

| 4 | CatBoost | 0.841176 | 0.858044 | accuracy | 0.017586 | 0.010405 | 5.719173 | 0.017586 | 0.010405 | 5.719173 | 1 | True | 6 |

| 5 | ExtraTreesEntr | 0.839706 | 0.861199 | accuracy | 0.146512 | 0.097935 | 1.272938 | 0.146512 | 0.097935 | 1.272938 | 1 | True | 8 |

| 6 | RandomForestGini | 0.838235 | 0.861199 | accuracy | 0.146591 | 0.098120 | 2.466754 | 0.146591 | 0.098120 | 2.466754 | 1 | True | 4 |

| 7 | ExtraTreesGini | 0.833824 | 0.854890 | accuracy | 0.145274 | 0.099282 | 1.095345 | 0.145274 | 0.099282 | 1.095345 | 1 | True | 7 |

| 8 | LightGBMLarge | 0.830882 | 0.864353 | accuracy | 0.027166 | 0.010562 | 2.308693 | 0.027166 | 0.010562 | 2.308693 | 1 | True | 11 |

| 9 | RandomForestEntr | 0.827941 | 0.854890 | accuracy | 0.149742 | 0.097670 | 1.181473 | 0.149742 | 0.097670 | 1.181473 | 1 | True | 5 |

| 10 | NeuralNetTorch | 0.683824 | 0.741325 | accuracy | 0.023454 | 0.014523 | 9.388918 | 0.023454 | 0.014523 | 9.388918 | 1 | True | 10 |

| 11 | NeuralNetFastAI | 0.675000 | 0.744479 | accuracy | 0.025833 | 0.014433 | 11.552325 | 0.025833 | 0.014433 | 11.552325 | 1 | True | 1 |

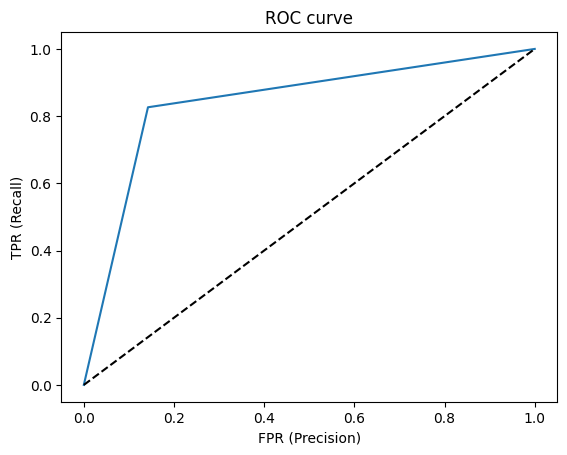

27.3. Metrics#

https://en.wikipedia.org/wiki/Receiver_operating_characteristic

https://srdas.github.io/MLBook2/3_MachineLearningOverview.html

https://srdas.github.io/MLBook2/3_MachineLearningOverview.html#ROC-and-AUC

27.4. Movie Reviews, one more time, with AG-Tabular#

train_data = pd.read_csv("NLP_data/movie_review_train.txt", sep = " ", header=None)

test_data = pd.read_csv("NLP_data/movie_review_test.txt", sep = " ", header=None)

train_data.columns = ['Label','Text']

test_data.columns = ['Label','Text']

print(train_data.shape, test_data.shape)

train_data.head()

(4001, 2) (1000, 2)

| Label | Text | |

|---|---|---|

| 0 | __label__0 | Homelessness (or Houselessness as George Carlin stated) has been an issue for years but never a plan to help those on the street that were once considered human who did everything from going to school, work, or vote for the matter. Most people think of the homeless as just a lost cause while worrying about things such as racism, the war on Iraq, pressuring kids to succeed, technology, the elections, inflation, or worrying if they'll be next to end up on the streets.<br /><br />But what if you were given a bet to live on the streets for a month without the luxuries you once had from a home,... |

| 1 | __label__1 | This film lacked something I couldn't put my finger on at first: charisma on the part of the leading actress. This inevitably translated to lack of chemistry when she shared the screen with her leading man. Even the romantic scenes came across as being merely the actors at play. It could very well have been the director who miscalculated what he needed from the actors. I just don't know.<br /><br />But could it have been the screenplay? Just exactly who was the chef in love with? He seemed more enamored of his culinary skills and restaurant, and ultimately of himself and his youthful explo... |

| 2 | __label__1 | \"It appears that many critics find the idea of a Woody Allen drama unpalatable.\" And for good reason: they are unbearably wooden and pretentious imitations of Bergman. And let's not kid ourselves: critics were mostly supportive of Allen's Bergman pretensions, Allen's whining accusations to the contrary notwithstanding. What I don't get is this: why was Allen generally applauded for his originality in imitating Bergman, but the contemporaneous Brian DePalma was excoriated for \"ripping off\" Hitchcock in his suspense/horror films? In Robin Wood's view, it's a strange form of cultural snob... |

| 3 | __label__0 | This isn't the comedic Robin Williams, nor is it the quirky/insane Robin Williams of recent thriller fame. This is a hybrid of the classic drama without over-dramatization, mixed with Robin's new love of the thriller. But this isn't a thriller, per se. This is more a mystery/suspense vehicle through which Williams attempts to locate a sick boy and his keeper.<br /><br />Also starring Sandra Oh and Rory Culkin, this Suspense Drama plays pretty much like a news report, until William's character gets close to achieving his goal.<br /><br />I must say that I was highly entertained, though this... |

| 4 | __label__1 | I don't know who to blame, the timid writers or the clueless director. It seemed to be one of those movies where so much was paid to the stars (Angie, Charlie, Denise, Rosanna and Jon) that there wasn't enough left to really make a movie. This could have been very entertaining, but there was a veil of timidity, even cowardice, that hung over each scene. Since it got an R rating anyway why was the ubiquitous bubble bath scene shot with a 70-year-old woman and not Angie Harmon? Why does Sheen sleepwalk through potentially hot relationships WITH TWO OF THE MOST BEAUTIFUL AND SEXY ACTRESSES in... |

%%time

#TRAIN THE MODEL

print("Train size =",train_data.shape," | Test size =",test_data.shape)

predictor = TabularPredictor(label='Label').fit(train_data=train_data) #, hyperparameters='multimodal')

performance = predictor.evaluate(train_data)

No path specified. Models will be saved in: "AutogluonModels/ag-20251002_222607"

Verbosity: 2 (Standard Logging)

=================== System Info ===================

AutoGluon Version: 1.4.0

Python Version: 3.12.11

Operating System: Linux

Platform Machine: x86_64

Platform Version: #1 SMP PREEMPT_DYNAMIC Sat Sep 6 09:54:41 UTC 2025

CPU Count: 2

Memory Avail: 9.97 GB / 12.67 GB (78.7%)

Disk Space Avail: 67.71 GB / 112.64 GB (60.1%)

===================================================

No presets specified! To achieve strong results with AutoGluon, it is recommended to use the available presets. Defaulting to `'medium'`...

Recommended Presets (For more details refer to https://auto.gluon.ai/stable/tutorials/tabular/tabular-essentials.html#presets):

presets='extreme' : New in v1.4: Massively better than 'best' on datasets <30000 samples by using new models meta-learned on https://tabarena.ai: TabPFNv2, TabICL, Mitra, and TabM. Absolute best accuracy. Requires a GPU. Recommended 64 GB CPU memory and 32+ GB GPU memory.

presets='best' : Maximize accuracy. Recommended for most users. Use in competitions and benchmarks.

presets='high' : Strong accuracy with fast inference speed.

presets='good' : Good accuracy with very fast inference speed.

presets='medium' : Fast training time, ideal for initial prototyping.

Using hyperparameters preset: hyperparameters='default'

Train size = (4001, 2) | Test size = (1000, 2)

Beginning AutoGluon training ...

AutoGluon will save models to "/content/drive/MyDrive/Books_Writings/NLPBook/AutogluonModels/ag-20251002_222607"

Train Data Rows: 4001

Train Data Columns: 1

Label Column: Label

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

2 unique label values: ['__label__0', '__label__1']

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during Predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression', 'quantile'])

Problem Type: binary

Preprocessing data ...

Selected class <--> label mapping: class 1 = __label__1, class 0 = __label__0

Note: For your binary classification, AutoGluon arbitrarily selected which label-value represents positive (__label__1) vs negative (__label__0) class.

To explicitly set the positive_class, either rename classes to 1 and 0, or specify positive_class in Predictor init.

Using Feature Generators to preprocess the data ...

Fitting AutoMLPipelineFeatureGenerator...

Available Memory: 10218.01 MB

Train Data (Original) Memory Usage: 5.22 MB (0.1% of available memory)

Inferring data type of each feature based on column values. Set feature_metadata_in to manually specify special dtypes of the features.

Stage 1 Generators:

Fitting AsTypeFeatureGenerator...

Stage 2 Generators:

Fitting FillNaFeatureGenerator...

Stage 3 Generators:

Fitting CategoryFeatureGenerator...

Fitting CategoryMemoryMinimizeFeatureGenerator...

Fitting TextSpecialFeatureGenerator...

Fitting BinnedFeatureGenerator...

Fitting DropDuplicatesFeatureGenerator...

Fitting TextNgramFeatureGenerator...

Fitting CountVectorizer for text features: ['Text']

CountVectorizer fit with vocabulary size = 5515

Stage 4 Generators:

Fitting DropUniqueFeatureGenerator...

Stage 5 Generators:

Fitting DropDuplicatesFeatureGenerator...

Types of features in original data (raw dtype, special dtypes):

('object', ['text']) : 1 | ['Text']

Types of features in processed data (raw dtype, special dtypes):

('category', ['text_as_category']) : 1 | ['Text']

('int', ['binned', 'text_special']) : 30 | ['Text.char_count', 'Text.word_count', 'Text.capital_ratio', 'Text.lower_ratio', 'Text.digit_ratio', ...]

('int', ['text_ngram']) : 5437 | ['__nlp__.000', '__nlp__.10', '__nlp__.10 10', '__nlp__.100', '__nlp__.11', ...]

39.9s = Fit runtime

1 features in original data used to generate 5468 features in processed data.

Train Data (Processed) Memory Usage: 41.61 MB (0.4% of available memory)

Data preprocessing and feature engineering runtime = 41.41s ...

AutoGluon will gauge predictive performance using evaluation metric: 'accuracy'

To change this, specify the eval_metric parameter of Predictor()

Automatically generating train/validation split with holdout_frac=0.12496875781054737, Train Rows: 3501, Val Rows: 500

User-specified model hyperparameters to be fit:

{

'NN_TORCH': [{}],

'GBM': [{'extra_trees': True, 'ag_args': {'name_suffix': 'XT'}}, {}, {'learning_rate': 0.03, 'num_leaves': 128, 'feature_fraction': 0.9, 'min_data_in_leaf': 3, 'ag_args': {'name_suffix': 'Large', 'priority': 0, 'hyperparameter_tune_kwargs': None}}],

'CAT': [{}],

'XGB': [{}],

'FASTAI': [{}],

'RF': [{'criterion': 'gini', 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

'XT': [{'criterion': 'gini', 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

}

Fitting 11 L1 models, fit_strategy="sequential" ...

Fitting model: LightGBMXT ...

Fitting with cpus=1, gpus=0, mem=1.2/10.0 GB

0.88 = Validation score (accuracy)

13.57s = Training runtime

0.05s = Validation runtime

Fitting model: LightGBM ...

Fitting with cpus=1, gpus=0, mem=1.2/10.0 GB

0.868 = Validation score (accuracy)

15.27s = Training runtime

0.07s = Validation runtime

Fitting model: RandomForestGini ...

Fitting with cpus=2, gpus=0

0.856 = Validation score (accuracy)

16.56s = Training runtime

0.09s = Validation runtime

Fitting model: RandomForestEntr ...

Fitting with cpus=2, gpus=0

0.846 = Validation score (accuracy)

14.79s = Training runtime

0.11s = Validation runtime

Fitting model: CatBoost ...

Fitting with cpus=1, gpus=0, mem=5.3/10.0 GB

0.858 = Validation score (accuracy)

48.8s = Training runtime

0.19s = Validation runtime

Fitting model: ExtraTreesGini ...

Fitting with cpus=2, gpus=0

0.842 = Validation score (accuracy)

16.65s = Training runtime

0.12s = Validation runtime

Fitting model: ExtraTreesEntr ...

Fitting with cpus=2, gpus=0

0.858 = Validation score (accuracy)