35. Natural Language Generation (NLG)#

NLP comprises NLU (natural language understanding) plus NLG (natural language generation). Whereas NLU has been around for quite some time, NLG has recently made huge strides with the creation of ultra large models. The size of these models run into the trillions of parameters!

NLG with Transformers

https://huggingface.co/tftransformers/gpt2-large

Sanjiv: I have adapted the notebook to run in our Colab accounts. You will need the file called

ascii_bible.txtto run the notebook as well, placed in theNLP_datafolder.For the leaderboard of the latest large language models, see: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard. However, this is only the open LLMs and there are several closed LLMs from OpenAI, Cohere, AI21, Anthropic.

In this notebook we borrow code by Max Woolf to train a GPT-2 Text-Generating Model using

gpt-2-simple. (See the license at the end of the notebook.)

35.1. LICENSE#

MIT License

Copyright (c) 2019 Max Woolf

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the “Software”), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

35.2. Recap of Transformers and LLMs#

This is an excellent video that visualizes transformers and explains succinctly the inner workings of transformers and LLMs: https://www.youtube.com/watch?v=KJtZARuO3JY (by Grant Sanderson, 3Blue1Brown).

from google.colab import drive

drive.mount('/content/drive') # Add My Drive/<>

import os

os.chdir('drive/My Drive')

os.chdir('Books_Writings/NLPBook/')

Mounted at /content/drive

%%capture

%pylab inline

import pandas as pd

import os

from IPython.display import Image

35.3. gpt-2-simple#

Here is the repository: minimaxir/gpt-2-simple

# %tensorflow_version 1.x

!pip install -q gpt-2-simple

import gpt_2_simple as gpt2

from datetime import datetime

from google.colab import files

Preparing metadata (setup.py) ... ?25l?25hdone

Building wheel for gpt-2-simple (setup.py) ... ?25l?25hdone

35.4. Check GPU#

You can verify which GPU is active by running the cell below.

!nvidia-smi

Tue Nov 11 04:18:39 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.54.15 Driver Version: 550.54.15 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 |

| N/A 43C P8 9W / 70W | 0MiB / 15360MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

35.5. Getting GPT-2#

There are four sizes of GPT-2:

124M(default): the “small” model, 500MB on disk.355M: the “medium” model, 1.5GB on disk.774M: the “large” model, cannot currently be finetuned with Colaboratory but can be used to generate text from the pretrained model (see later in Notebook)1558M: the “extra large”, true model. Will not work if a K80/P4 GPU is attached to the notebook. (like774M, it cannot be finetuned).

Larger models have more knowledge, but take longer to finetune and longer to generate text. Specify which base model to use in the code block below.

The next cell downloads it from Google Cloud Storage and saves it in the Colaboratory VM at /models/<model_name>. This model isn’t permanently saved in the Colaboratory VM; you’ll have to redownload it if you want to retrain it at a later time.

We use the smallest GPT-2 model to do a quick implementation.

%%time

gpt2.download_gpt2(model_name="124M")

Fetching checkpoint: 1.05Mit [00:00, 5.07Git/s]

Fetching encoder.json: 1.05Mit [00:01, 999kit/s]

Fetching hparams.json: 1.05Mit [00:00, 5.86Git/s]

Fetching model.ckpt.data-00000-of-00001: 498Mit [02:09, 3.86Mit/s]

Fetching model.ckpt.index: 1.05Mit [00:00, 4.26Git/s]

Fetching model.ckpt.meta: 1.05Mit [00:00, 1.26Mit/s]

Fetching vocab.bpe: 1.05Mit [00:00, 1.31Mit/s]

CPU times: user 1.09 s, sys: 468 ms, total: 1.55 s

Wall time: 2min 34s

I have provided different examples of text on which to fine-tune below:

## EXAMPLE FILES

file_name = "NLP_data/ascii_bible.txt" # Generates biblical text

# file_name = "NLP_data/canterbury_tales_chaucer.txt" # Generates poetry like text

# file_name = "NLP_data/history_indian_philosophy.txt" # generates plain text

35.6. Finetune GPT-2#

The next cell will start the actual finetuning of GPT-2. It creates a persistent TensorFlow session which stores the training config, then runs the training for the specified number of steps. (to have the finetuning run indefinitely, set steps = -1)

The model checkpoints will be saved in /checkpoint/run1 by default. The checkpoints are saved every 500 steps (can be changed) and when the cell is stopped.

The training might time out after 4ish hours; make sure you end training and save the results so you don’t lose them!

IMPORTANT NOTE: If you want to rerun this cell, restart the VM first (Runtime -> Restart Runtime). You will need to rerun imports but not recopy files.

Other optional-but-helpful parameters for gpt2.finetune:

restore_from: Set tofreshto start training from the base GPT-2, or set tolatestto restart training from an existing checkpoint.sample_every: Number of steps to print example outputprint_every: Number of steps to print training progress.learning_rate: Learning rate for the training. (default1e-4, can lower to1e-5if you have <1MB input data)run_name: subfolder withincheckpointto save the model. This is useful if you want to work with multiple models (will also need to specifyrun_namewhen loading the model)overwrite: Set toTrueif you want to continue finetuning an existing model (w/restore_from='latest') without creating duplicate copies.

%%time

sess = gpt2.start_tf_sess()

gpt2.finetune(sess,

dataset=file_name,

model_name='124M',

steps=150,

restore_from='fresh',

run_name='run1',

print_every=10,

sample_every=200,

save_every=50,

# reuse=True

)

Loading checkpoint models/124M/model.ckpt

Loading dataset...

100%|██████████| 1/1 [00:05<00:00, 5.46s/it]

dataset has 1564935 tokens

Training...

[10 | 25.59] loss=2.20 avg=2.20

[20 | 46.66] loss=1.94 avg=2.07

[30 | 68.12] loss=1.75 avg=1.96

[40 | 89.96] loss=2.06 avg=1.99

[50 | 112.31] loss=1.95 avg=1.98

Saving checkpoint/run1/model-50

[60 | 142.42] loss=1.87 avg=1.96

[70 | 165.38] loss=1.88 avg=1.95

[80 | 188.83] loss=1.80 avg=1.93

[90 | 212.66] loss=1.88 avg=1.92

[100 | 236.39] loss=1.74 avg=1.90

Saving checkpoint/run1/model-100

WARNING:tensorflow:From /usr/local/lib/python3.12/dist-packages/tensorflow/python/training/saver.py:1068: remove_checkpoint (from tensorflow.python.checkpoint.checkpoint_management) is deprecated and will be removed in a future version.

Instructions for updating:

Use standard file APIs to delete files with this prefix.

[110 | 262.36] loss=1.99 avg=1.91

[120 | 285.99] loss=1.76 avg=1.90

[130 | 309.69] loss=1.69 avg=1.88

[140 | 333.27] loss=1.85 avg=1.88

[150 | 356.77] loss=1.70 avg=1.87

Saving checkpoint/run1/model-150

CPU times: user 3min 44s, sys: 34 s, total: 4min 18s

Wall time: 6min 21s

35.7. Save the model#

After the model is trained, you can copy the checkpoint folder to your own Google Drive. (Look for a folder called checkpoints.)

If you want to download it to your personal computer, it’s strongly recommended you copy it there first, then download from Google Drive. The checkpoint folder is copied as a .rar compressed file; you can download it and uncompress it locally.

%%time

gpt2.copy_checkpoint_to_gdrive(run_name='run1')

CPU times: user 164 ms, sys: 1.01 s, total: 1.17 s

Wall time: 24.2 s

You’re done! Feel free to go to the Generate Text From The Trained Model section to generate text based on your retrained model.

35.8. Load a Trained Model Checkpoint#

Running the next cell will copy the .rar checkpoint file from your Google Drive into the Colaboratory VM.

gpt2.copy_checkpoint_from_gdrive(run_name='run1')

The next cell will allow you to load the retrained model checkpoint + metadata necessary to generate text.

IMPORTANT NOTE: If you want to rerun this cell, restart the VM first (Runtime -> Restart Runtime). You will need to rerun imports but not recopy files.

# sess = gpt2.start_tf_sess()

# gpt2.load_gpt2(sess, run_name='run1')

35.9. Generate Text From The Trained Model#

After you’ve trained the model or loaded a retrained model from checkpoint, you can now generate text. generate generates a single text from the loaded model.

%%time

gpt2.generate(sess, run_name='run1')

010:011 And Moses commanded the children of Israel, saying,

010:012 Behold, they shall look, and all the land and the

people of the land shall look, and all the people of the

land shall be astonished, and be afraid.

010:013 And Moses commanded the people of Israel to mount upon the mount

of the mountain; and the people of Israel were with him.

010:014 And the people were astonished at that day, that the sun was not

darkened, which was the hour of the night.

010:015 And the people of Israel were carried to the mount of the mount, and

they were found to their tents; and the people of Israel

came out of the mount, and said, The mount of the mount is

upon the mount of the mount.

010:016 And the people of Israel said, The mount of the mount is upon

the mount of the mount, and the people of Israel have come out of

the mount.

010:017 And the people of Israel were astonished at that day, that the

sun was not darkened, which was the hour of the night.

010:018 And the people of Israel came out of the mount, and said, The mount of the

mount is upon the mount of the mount.

010:019 And the people of Israel were carried to the mount of the mount. And

the children of Israel were with him.

010:020 And the people of Israel were greatly moved, and cried, All

this is the LORD's judgment:

010:021 And the LORD said, My servant Zedekiah the son of Manasseh,

who is my servant, and my servant Elias the son of Manasseh,

who is my servant, and I am them that I brought out of the

wilderness.

010:022 And Moses said to the children of Israel, Save the children of Israel,

that the house of Israel shall be taken away:

010:023 Take no children of Israel from the house of the LORD, save the children of

Israel.

010:024 And the LORD said unto Moses, Go and fetch the children of

Israel, and bring them as a stranger, and bring them out of the

wilderness.

010:025 And the LORD said unto Moses, Bring the children of Israel

out of the sight of the children of Israel, and bring them

in.

010:026 And Moses said unto the children of Israel, Go, fetch the children

of Israel, and bring them out of the sight of the children of

Israel.

010:027 And the LORD said, I will give them as a stranger, and they shall

be brought out of the sight of the children of Israel.

010:028 And the LORD said, Go, fetch the children of Israel, and bring

them out of the sight of the children of Israel.

010:029 And Moses said, Go, fetch the children of Israel, and bring

them out of the sight of the children of Israel.

010:030 And the LORD said unto Moses, Go, fetch the children of Israel,

and bring them out of the sight of the children of Israel, and

they shall be brought out of the sight of the children of

Israel.

010:031 And Moses said unto the children of Israel, Go, fetch the children

of Israel out of the sight of the children of Israel.

010:032 And the LORD said, I will give you as a stranger, and they shall

be brought out of the sight of the children of Israel.

010:033 And the LORD said, I will give you upon the mount of the mount a

hide,

CPU times: user 12.7 s, sys: 438 ms, total: 13.2 s

Wall time: 14.2 s

If you’re creating an API based on your model and need to pass the generated text elsewhere, you can do text = gpt2.generate(sess, return_as_list=True)[0]

You can also pass in a prefix to the generate function to force the text to start with a given character sequence and generate text from there (good if you add an indicator when the text starts).

You can also generate multiple texts at a time by specifing nsamples. Unique to GPT-2, you can pass a batch_size to generate multiple samples in parallel, giving a massive speedup (in Colaboratory, set a maximum of 20 for batch_size).

Other optional-but-helpful parameters for gpt2.generate and friends:

length: Number of tokens to generate (default 1023, the maximum)temperature: The higher the temperature, the crazier the text (default 0.7, recommended to keep between 0.7 and 1.0)top_k: Limits the generated guesses to the top k guesses (default 0 which disables the behavior; if the generated output is super crazy, you may want to settop_k=40)top_p: Nucleus sampling: limits the generated guesses to a cumulative probability. (gets good results on a dataset withtop_p=0.9)truncate: Truncates the input text until a given sequence, excluding that sequence (e.g. iftruncate='<|endoftext|>', the returned text will include everything before the first<|endoftext|>). It may be useful to combine this with a smallerlengthif the input texts are short.include_prefix: If usingtruncateandinclude_prefix=False, the specifiedprefixwill not be included in the returned text.

%%time

# Choose the prefix you want and then let it rip!

gpt2.generate(sess,

length=250,

temperature=0.7,

prefix="Destiny is",

nsamples=5,

batch_size=5

)

Destiny is the punishment of the Gentiles, and the judgment of the dead, and the kingdom of God.

008:019 For the Lord Jesus was the Son of man, and the redemption of the

world; and the redeeming of the world is the kingdom of God in

heaven.

008:020 For nothing is more glorious than the Lord Jesus, even the glory of

the Lord Jesus, that he is the Son, and the Saviour, and the

Son of God, which is in heaven, and in the earth, and in

heaven, and in the sea, and in the land, and in the depths thereof, and

in the sea and in the land, and in the sea, and in the land, and in the

land, and in the sea, and in the land: and he was the

God of the children of Israel, and the God of Israel, and the God of

====================

Destiny is near;

021:018 And thou shalt not be afraid, neither shall any man go down

unto the pit, neither shall any man go down unto the pit.

021:019 And it shall come to pass, that the LORD thy God shall

put an end to all Israel, and to all the heathen, and to all

the people of the land which is in the land of Egypt, and to all

the people of the earth, as the LORD thy God hath said: so shall

Israel be utterly destroyed.

021:020 And when the LORD thy God had taken away all the heathen, and

all the heathen that dwelt in the land of Egypt, and had

taken away all the heathen that dwelt in the land of

Egypt, and had taken away all the heathen, they shall

all be turned into beasts, and with them shall dwell

====================

Destiny is not the same as the plague,

and evil.

018:013 And they say, What is written in the law, that if the Lord

should establish the kingdom of heaven, he should

destroy the kingdoms of the Egyptians, and the Egyptians, and

the Egyptians, and the Egyptians, and the Egyptians, and the Egyptians, and

the Egyptians, and the Egyptians, and the Egyptians, and the

Egyptians, and the Egyptians, and the Egyptians, and the

Egyptians, and the Egyptians, and the Egyptians, and the Egyptians, and

the Egyptians, and the Egyptians, and the Egyptians, and the

Egyptians, and the Egyptians, and the Egyptians, and the

Egyptians, and the Egyptians, and the Egyptians, and the

Egyptians, and the Egyptians, and the Egyptians, and the

====================

Destiny is greater than the glory of the LORD.

012:003 And he saw the king of Babylon, and the king of Assyria,

and the king of Babylon, and all the men of the land of Assyria,

012:004 And all the sons of the Assyrians, and all the men of Syria,

and all the men of the land of Assyria, when they came to the

land of their fathers: for they had not left their

fathers, but the LORD had given them a great land over which he

had given them to dwell.

012:005 And all the Assyrians, and all the men of the land of Assyria, rode

with the men of the Assyrians, and went forth into the wilderness thereof,

and took great horses, and went and stood upon the high places of

Assyria.

012:006 And the king of Assyria, and all the men of

====================

Destiny is an abomination.

016:013 And the LORD spake unto Moses,

016:014 And Moses answered the word of the LORD, saying,

016:015 As for the LORD, he hath called me, and I call him

the father of Israel. And the children of Israel went

out, and took the children of David, and their seed, and

all their possession.

016:016 And the LORD said unto Moses, Tell me, when the children of

Israel shall be able to go unto the land of Egypt,

and to see the land of Egypt, how they shall go?

016:017 And Moses said, What shall I say unto my children?

016:017 They have not seen the land of Egypt, or the land of

Egypt, but they have heard the voice of the LORD, saying,

Thou shalt not go into the land of Egypt, neither shalt thou

====================

CPU times: user 6.59 s, sys: 176 ms, total: 6.76 s

Wall time: 7.43 s

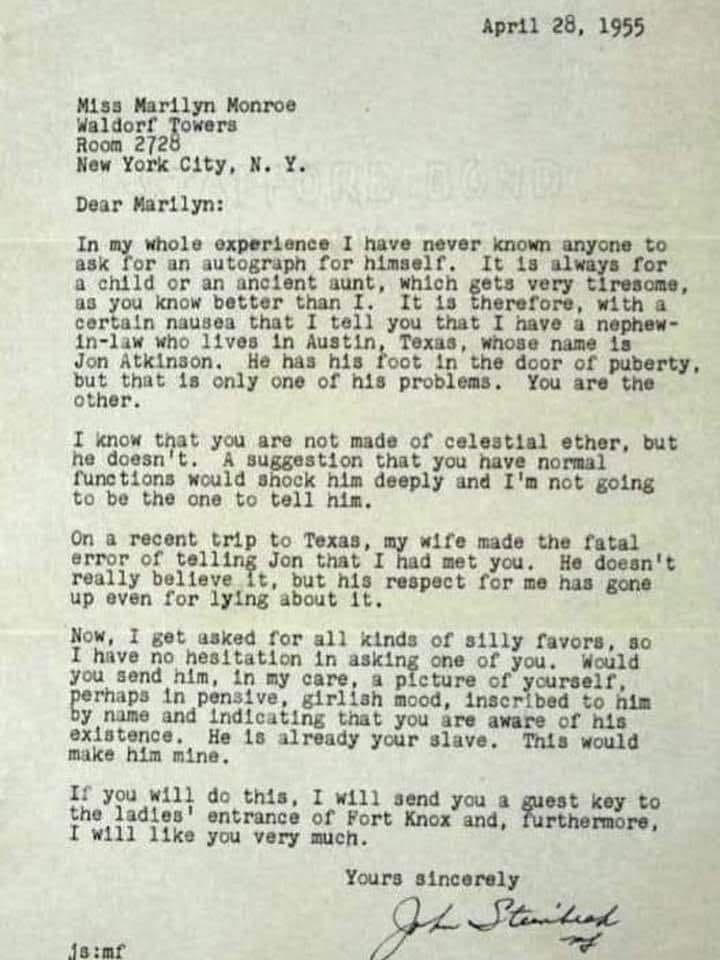

But, will AI truly learn to write well? Probably. Look at this letter written by John Steinbeck, and ask, can an AI write in this way?

In 2022, we have seen AIs write incredibly well informed text. Models such as Google’s LaMDA (https://blog.google/technology/ai/lamda/) are astonighingly literate. The paper is here: https://arxiv.org/abs/2201.08239

Take a look at how well it performs using the interface from https://beta.character.ai.

35.10. Large Language Models#

Training large language models (LLMs) is extremely expensive. Generative models such as GPT-3 (https://en.wikipedia.org/wiki/GPT-3) is estimated to have cost $12M.

The pre-training dataset for LaMDA consists of 2.97B documents, 1.12B dialogs, and 13.39B dialog utterances, for a total of 1.56T words. Pre-trained on 1024 TPU-v3 chips for a total of about 57.7 days, and 256K tokens per batch. Approximately equivalent to 22 passengers taking a round trip between San Francisco and New York (1.2 tCO2e / passenger).

BLOOM (176B parameters) is another large language model: https://bigscience.huggingface.co/blog/bloom. As stated by the site: “With its 176 billion parameters, BLOOM is able to generate text in 46 natural languages and 13 programming languages. For almost all of them, such as Spanish, French and Arabic, BLOOM will be the first language model with over 100B parameters ever created. This is the culmination of a year of work involving over 1000 researchers from 70+ countries and 250+ institutions, leading to a final run of 117 days (March 11 - July 6) training the BLOOM model on the Jean Zay supercomputer in the south of Paris, France thanks to a compute grant worth an estimated €3M from French research agencies CNRS and GENCI.” BLOOM is an example of open LLM modeling, in contrast to other models that are built by large tech companies.

BLOOM also advocates Responsible AI via its new license, RAIL: https://bigscience.huggingface.co/blog/the-bigscience-rail-license. This connects to the discussion of ML Explainability, one aspect of Responsible AI.

Stable Diffusion deploys open source text to image models: https://stability.ai/blog/stable-diffusion-public-release; here is an amazing video on video and image generation: https://www.youtube.com/watch?v=iv-5mZ_9CPY

Deployed in SageMaker: https://aws.amazon.com/about-aws/whats-new/2022/11/sagemaker-jumpstart-stable-diffusion-bloom-models/

A collection of links to LLMs on Github: https://gist.github.com/rain-1/eebd5e5eb2784feecf450324e3341c8d

Five Years of GPTs: https://finbarr.ca/five-years-of-gpt-progress/

35.11. Using BLOOM#

This is an excellent source for example code: https://amazon.awsapps.com/workdocs/index.html#/document/0beeca78eaca3b53f2a3beb37b3d515848ccb8965c9241c846bf44b37b21203a

35.12. Building LLMs from scratch#

This is a nice 3-hour presentation by Sebastian Raschka, if you want to dive into a better understanding of LLMs. See the substack post: https://magazine.sebastianraschka.com/p/building-llms-from-the-ground-up

35.13. Thinking Machines AI#

Recently, an offshoot team from OpenAI founded Thinking Machines Lab, to democratize fine-tuning for NLG. See: https://thinkingmachines.ai