21. Document Similarity#

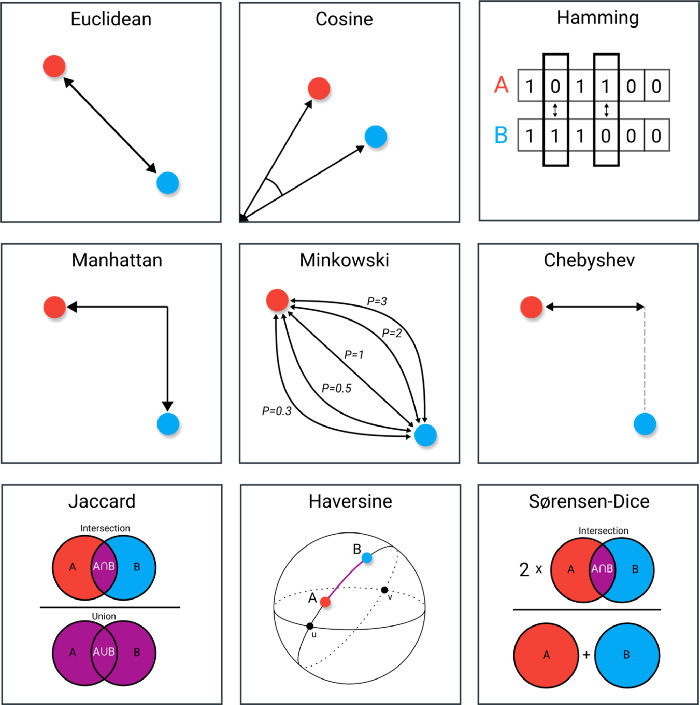

There are several measures of similarity between documents. Some, but not all, exploit the vector representations of documents.

from google.colab import drive

drive.mount('/content/drive') # Add My Drive/<>

import os

os.chdir('drive/My Drive')

os.chdir('Books_Writings/NLPBook/')

Mounted at /content/drive

%%capture

%pylab inline

import pandas as pd

import os

from IPython.display import Image

# %load_ext rpy2.ipython

21.1. Cosine Similarity in the Text Domain#

In this segment we will learn some popular functions on text that are used in practice. One of the first things we like to do is to find similar text or like sentences (think of web search as one application). Since documents are vectors in the TDM, we may want to find the closest vectors or compute the distance between vectors.

where \(||A|| = \sqrt{A \cdot A}\), is the dot product of \(A\) with itself, also known as the norm of \(A\). This gives the cosine of the angle between the two vectors and is zero for orthogonal vectors and 1 for identical vectors.

For a collection of distance measures, see: https://towardsdatascience.com/9-distance-measures-in-data-science-918109d069fa

#COSINE DISTANCE OR SIMILARITY

A = array([0,3,4,1,7,0,1])

B = array([0,4,3,0,6,1,1])

cos = A.dot(B)/(sqrt(A.dot(A)) * sqrt(B.dot(B)))

print('Cosine similarity = ',cos)

#Using sklearn

from sklearn.metrics.pairwise import cosine_similarity

cosine_similarity([A, B], dense_output=True)

Cosine similarity = 0.9682727993019339

array([[1. , 0.9682728],

[0.9682728, 1. ]])

21.2. Minimum Edit Distance#

The MED is the minimum number of edits needed to transform one string into another. The strings could be words or sentences or even documents. This is also known as the Levenshtein distance.

For example, to convert Apple into Amazon, we need to change p->m, p->a, l->z, e->o, add n. This entails 5 simple operations.

Properties:

Zero only for identical strings.

Minimum = the difference of the sizes of the two strings.

Maximum = length of the longer string.

Satisfies the Triangle Inequality: The Levenshtein distance between two strings is no greater than the sum of their Levenshtein distances from a third string.

See the Lazy Prices paper: https://hbswk.hbs.edu/item/lazy-prices, which uses MED for document similarity. Get the published paper through the library for free: https://onlinelibrary.wiley.com/doi/epdf/10.1111/jofi.12885

(Adapted from kristinauko/challenge_100)

import builtins

def min_edit_distance(string1, string2):

if len(string1) > len(string2):

difference = len(string1) - len(string2)

string1[:difference]

elif len(string2) > len(string1):

difference = len(string2) - len(string1)

string2[:difference]

else:

difference = 0

for i in range(builtins.min(len(string1),len(string2))):

if string1[i] != string2[i]:

difference += 1

return difference

print(min_edit_distance("Amazon", "Apple"))

print(min_edit_distance("Amazon", "Amazing"))

5

2

21.3. Simple Similarity#

(Example: Used in the Lazy Prices paper.)

This measure compares two documents word by word or character by character. It uses an old document \(D_1\) and a new document \(D_2\) and counts the additions, deletions, and changes of words, normalized by the sum of words in the two documents.

It is a simple side-by-side comparison method. Much like the function “Track Changes” in Microsoft Word or the function “diff” in Unix/Linux terminal.

First we look at MED at the word level and then consider Simple Similarity.

import os

import nltk

nltk.download('punkt')

nltk.download('punkt_tab')

import difflib # https://docs.python.org/2/library/difflib.html

from nltk.tokenize import word_tokenize

D1 = "Some areas around the world that were devastated by the coronavirus in the spring — and are now tightening rules to head off a second wave — are facing resistance from residents who are exhausted, confused and frustrated."

print(D1, "\n")

D1 = word_tokenize(D1)

D2 = "Some parts of the world devastated by the terrible coronavirus in the winter — have now tightened rules to head off a second wave but are facing resistance from residents who are exhausted, bewildered and angry."

print(D2, "\n")

D2 = word_tokenize(D2)

print("Length D1: ",len(D1[:5]),D1[:5])

print("Length D2: ",len(D2[:5]),D2[:5])

print("MED =",min_edit_distance(D1[:5],D2[:5]))

print("Length D1: ",len(D1),D1)

print("Length D2: ",len(D2),D2)

print("MED =",min_edit_distance(D1,D2))

[nltk_data] Downloading package punkt to /root/nltk_data...

[nltk_data] Unzipping tokenizers/punkt.zip.

[nltk_data] Downloading package punkt_tab to /root/nltk_data...

[nltk_data] Unzipping tokenizers/punkt_tab.zip.

Some areas around the world that were devastated by the coronavirus in the spring — and are now tightening rules to head off a second wave — are facing resistance from residents who are exhausted, confused and frustrated.

Some parts of the world devastated by the terrible coronavirus in the winter — have now tightened rules to head off a second wave but are facing resistance from residents who are exhausted, bewildered and angry.

Length D1: 5 ['Some', 'areas', 'around', 'the', 'world']

Length D2: 5 ['Some', 'parts', 'of', 'the', 'world']

MED = 2

Length D1: 40 ['Some', 'areas', 'around', 'the', 'world', 'that', 'were', 'devastated', 'by', 'the', 'coronavirus', 'in', 'the', 'spring', '—', 'and', 'are', 'now', 'tightening', 'rules', 'to', 'head', 'off', 'a', 'second', 'wave', '—', 'are', 'facing', 'resistance', 'from', 'residents', 'who', 'are', 'exhausted', ',', 'confused', 'and', 'frustrated', '.']

Length D2: 38 ['Some', 'parts', 'of', 'the', 'world', 'devastated', 'by', 'the', 'terrible', 'coronavirus', 'in', 'the', 'winter', '—', 'have', 'now', 'tightened', 'rules', 'to', 'head', 'off', 'a', 'second', 'wave', 'but', 'are', 'facing', 'resistance', 'from', 'residents', 'who', 'are', 'exhausted', ',', 'bewildered', 'and', 'angry', '.']

MED = 37

res = list(difflib.ndiff(D1,D2))

print("DIFFs =",res)

nplus = len([j for j in res if j[0].startswith('+')])

nminus = len([j for j in res if j[0].startswith('-')])

print("SIMPSIM =",nplus,nminus,(nplus+nminus)/(len(D1)+len(D2)))

DIFFs = [' Some', '- areas', '- around', '+ parts', '+ of', ' the', ' world', '- that', '- were', ' devastated', ' by', ' the', '+ terrible', ' coronavirus', ' in', ' the', '- spring', '+ winter', ' —', '+ have', '- and', '- are', ' now', '- tightening', '+ tightened', ' rules', ' to', ' head', ' off', ' a', ' second', ' wave', '- —', '+ but', ' are', ' facing', ' resistance', ' from', ' residents', ' who', ' are', ' exhausted', ' ,', '- confused', '+ bewildered', ' and', '- frustrated', '+ angry', ' .']

SIMPSIM = 9 11 0.2564102564102564

21.4. Sentence Similarity via Language Model Representation#

We can determine sentence similarity based on raw text using set-based similarity methods, as we will see later in this notebook.

However, computing similarity is basically a mathematical operation and requires quantification of text into vectors, matrices, tensors. We have seen an example of such similarity in the computation of cosine similarity above. In that example, we used simple word count vectors.

However, there are other ways of transforming sentences into fixed-length vectors so that we can compute cosine similarity. These are known as “embeddings”, i.e., we convert the text of a sentence into a numeric vector of dimension \(n\) which can be thought of as an embedding of that sentence into \(n\)-dimensional space.

Two popular ways this is done is using traditional word embeddings such as word2vec and BERT model embeddings. Word2vec creates word embeddings and there is a corresponding package for sentence enbeddings, sent2vec.

%%time

!pip install sent2vec

Collecting sent2vec

Downloading sent2vec-0.3.0-py3-none-any.whl.metadata (5.8 kB)

Requirement already satisfied: transformers in /usr/local/lib/python3.12/dist-packages (from sent2vec) (4.56.2)

Requirement already satisfied: torch in /usr/local/lib/python3.12/dist-packages (from sent2vec) (2.8.0+cu126)

Requirement already satisfied: numpy in /usr/local/lib/python3.12/dist-packages (from sent2vec) (2.0.2)

Collecting gensim (from sent2vec)

Downloading gensim-4.3.3-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (8.1 kB)

Requirement already satisfied: spacy in /usr/local/lib/python3.12/dist-packages (from sent2vec) (3.8.7)

Requirement already satisfied: pytest in /usr/local/lib/python3.12/dist-packages (from sent2vec) (8.4.2)

Collecting numpy (from sent2vec)

Downloading numpy-1.26.4-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (61 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 61.0/61.0 kB 3.3 MB/s eta 0:00:00

?25hCollecting scipy<1.14.0,>=1.7.0 (from gensim->sent2vec)

Downloading scipy-1.13.1-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (60 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 60.6/60.6 kB 5.6 MB/s eta 0:00:00

?25hRequirement already satisfied: smart-open>=1.8.1 in /usr/local/lib/python3.12/dist-packages (from gensim->sent2vec) (7.3.1)

Requirement already satisfied: iniconfig>=1 in /usr/local/lib/python3.12/dist-packages (from pytest->sent2vec) (2.1.0)

Requirement already satisfied: packaging>=20 in /usr/local/lib/python3.12/dist-packages (from pytest->sent2vec) (25.0)

Requirement already satisfied: pluggy<2,>=1.5 in /usr/local/lib/python3.12/dist-packages (from pytest->sent2vec) (1.6.0)

Requirement already satisfied: pygments>=2.7.2 in /usr/local/lib/python3.12/dist-packages (from pytest->sent2vec) (2.19.2)

Requirement already satisfied: spacy-legacy<3.1.0,>=3.0.11 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (3.0.12)

Requirement already satisfied: spacy-loggers<2.0.0,>=1.0.0 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (1.0.5)

Requirement already satisfied: murmurhash<1.1.0,>=0.28.0 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (1.0.13)

Requirement already satisfied: cymem<2.1.0,>=2.0.2 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (2.0.11)

Requirement already satisfied: preshed<3.1.0,>=3.0.2 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (3.0.10)

Requirement already satisfied: thinc<8.4.0,>=8.3.4 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (8.3.6)

Requirement already satisfied: wasabi<1.2.0,>=0.9.1 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (1.1.3)

Requirement already satisfied: srsly<3.0.0,>=2.4.3 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (2.5.1)

Requirement already satisfied: catalogue<2.1.0,>=2.0.6 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (2.0.10)

Requirement already satisfied: weasel<0.5.0,>=0.1.0 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (0.4.1)

Requirement already satisfied: typer<1.0.0,>=0.3.0 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (0.19.2)

Requirement already satisfied: tqdm<5.0.0,>=4.38.0 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (4.67.1)

Requirement already satisfied: requests<3.0.0,>=2.13.0 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (2.32.4)

Requirement already satisfied: pydantic!=1.8,!=1.8.1,<3.0.0,>=1.7.4 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (2.11.9)

Requirement already satisfied: jinja2 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (3.1.6)

Requirement already satisfied: setuptools in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (75.2.0)

Requirement already satisfied: langcodes<4.0.0,>=3.2.0 in /usr/local/lib/python3.12/dist-packages (from spacy->sent2vec) (3.5.0)

Requirement already satisfied: filelock in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (3.19.1)

Requirement already satisfied: typing-extensions>=4.10.0 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (4.15.0)

Requirement already satisfied: sympy>=1.13.3 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (1.13.3)

Requirement already satisfied: networkx in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (3.5)

Requirement already satisfied: fsspec in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (2025.3.0)

Requirement already satisfied: nvidia-cuda-nvrtc-cu12==12.6.77 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (12.6.77)

Requirement already satisfied: nvidia-cuda-runtime-cu12==12.6.77 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (12.6.77)

Requirement already satisfied: nvidia-cuda-cupti-cu12==12.6.80 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (12.6.80)

Requirement already satisfied: nvidia-cudnn-cu12==9.10.2.21 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (9.10.2.21)

Requirement already satisfied: nvidia-cublas-cu12==12.6.4.1 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (12.6.4.1)

Requirement already satisfied: nvidia-cufft-cu12==11.3.0.4 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (11.3.0.4)

Requirement already satisfied: nvidia-curand-cu12==10.3.7.77 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (10.3.7.77)

Requirement already satisfied: nvidia-cusolver-cu12==11.7.1.2 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (11.7.1.2)

Requirement already satisfied: nvidia-cusparse-cu12==12.5.4.2 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (12.5.4.2)

Requirement already satisfied: nvidia-cusparselt-cu12==0.7.1 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (0.7.1)

Requirement already satisfied: nvidia-nccl-cu12==2.27.3 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (2.27.3)

Requirement already satisfied: nvidia-nvtx-cu12==12.6.77 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (12.6.77)

Requirement already satisfied: nvidia-nvjitlink-cu12==12.6.85 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (12.6.85)

Requirement already satisfied: nvidia-cufile-cu12==1.11.1.6 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (1.11.1.6)

Requirement already satisfied: triton==3.4.0 in /usr/local/lib/python3.12/dist-packages (from torch->sent2vec) (3.4.0)

Requirement already satisfied: huggingface-hub<1.0,>=0.34.0 in /usr/local/lib/python3.12/dist-packages (from transformers->sent2vec) (0.35.3)

Requirement already satisfied: pyyaml>=5.1 in /usr/local/lib/python3.12/dist-packages (from transformers->sent2vec) (6.0.3)

Requirement already satisfied: regex!=2019.12.17 in /usr/local/lib/python3.12/dist-packages (from transformers->sent2vec) (2024.11.6)

Requirement already satisfied: tokenizers<=0.23.0,>=0.22.0 in /usr/local/lib/python3.12/dist-packages (from transformers->sent2vec) (0.22.1)

Requirement already satisfied: safetensors>=0.4.3 in /usr/local/lib/python3.12/dist-packages (from transformers->sent2vec) (0.6.2)

Requirement already satisfied: hf-xet<2.0.0,>=1.1.3 in /usr/local/lib/python3.12/dist-packages (from huggingface-hub<1.0,>=0.34.0->transformers->sent2vec) (1.1.10)

Requirement already satisfied: language-data>=1.2 in /usr/local/lib/python3.12/dist-packages (from langcodes<4.0.0,>=3.2.0->spacy->sent2vec) (1.3.0)

Requirement already satisfied: annotated-types>=0.6.0 in /usr/local/lib/python3.12/dist-packages (from pydantic!=1.8,!=1.8.1,<3.0.0,>=1.7.4->spacy->sent2vec) (0.7.0)

Requirement already satisfied: pydantic-core==2.33.2 in /usr/local/lib/python3.12/dist-packages (from pydantic!=1.8,!=1.8.1,<3.0.0,>=1.7.4->spacy->sent2vec) (2.33.2)

Requirement already satisfied: typing-inspection>=0.4.0 in /usr/local/lib/python3.12/dist-packages (from pydantic!=1.8,!=1.8.1,<3.0.0,>=1.7.4->spacy->sent2vec) (0.4.2)

Requirement already satisfied: charset_normalizer<4,>=2 in /usr/local/lib/python3.12/dist-packages (from requests<3.0.0,>=2.13.0->spacy->sent2vec) (3.4.3)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.12/dist-packages (from requests<3.0.0,>=2.13.0->spacy->sent2vec) (3.10)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.12/dist-packages (from requests<3.0.0,>=2.13.0->spacy->sent2vec) (2.5.0)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.12/dist-packages (from requests<3.0.0,>=2.13.0->spacy->sent2vec) (2025.8.3)

Requirement already satisfied: wrapt in /usr/local/lib/python3.12/dist-packages (from smart-open>=1.8.1->gensim->sent2vec) (1.17.3)

Requirement already satisfied: mpmath<1.4,>=1.1.0 in /usr/local/lib/python3.12/dist-packages (from sympy>=1.13.3->torch->sent2vec) (1.3.0)

Requirement already satisfied: blis<1.4.0,>=1.3.0 in /usr/local/lib/python3.12/dist-packages (from thinc<8.4.0,>=8.3.4->spacy->sent2vec) (1.3.0)

Requirement already satisfied: confection<1.0.0,>=0.0.1 in /usr/local/lib/python3.12/dist-packages (from thinc<8.4.0,>=8.3.4->spacy->sent2vec) (0.1.5)

INFO: pip is looking at multiple versions of thinc to determine which version is compatible with other requirements. This could take a while.

Collecting thinc<8.4.0,>=8.3.4 (from spacy->sent2vec)

Downloading thinc-8.3.4-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (15 kB)

Collecting blis<1.3.0,>=1.2.0 (from thinc<8.4.0,>=8.3.4->spacy->sent2vec)

Downloading blis-1.2.1-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (7.4 kB)

Requirement already satisfied: click>=8.0.0 in /usr/local/lib/python3.12/dist-packages (from typer<1.0.0,>=0.3.0->spacy->sent2vec) (8.3.0)

Requirement already satisfied: shellingham>=1.3.0 in /usr/local/lib/python3.12/dist-packages (from typer<1.0.0,>=0.3.0->spacy->sent2vec) (1.5.4)

Requirement already satisfied: rich>=10.11.0 in /usr/local/lib/python3.12/dist-packages (from typer<1.0.0,>=0.3.0->spacy->sent2vec) (13.9.4)

Requirement already satisfied: cloudpathlib<1.0.0,>=0.7.0 in /usr/local/lib/python3.12/dist-packages (from weasel<0.5.0,>=0.1.0->spacy->sent2vec) (0.22.0)

Requirement already satisfied: MarkupSafe>=2.0 in /usr/local/lib/python3.12/dist-packages (from jinja2->spacy->sent2vec) (3.0.3)

Requirement already satisfied: marisa-trie>=1.1.0 in /usr/local/lib/python3.12/dist-packages (from language-data>=1.2->langcodes<4.0.0,>=3.2.0->spacy->sent2vec) (1.3.1)

Requirement already satisfied: markdown-it-py>=2.2.0 in /usr/local/lib/python3.12/dist-packages (from rich>=10.11.0->typer<1.0.0,>=0.3.0->spacy->sent2vec) (4.0.0)

Requirement already satisfied: mdurl~=0.1 in /usr/local/lib/python3.12/dist-packages (from markdown-it-py>=2.2.0->rich>=10.11.0->typer<1.0.0,>=0.3.0->spacy->sent2vec) (0.1.2)

Downloading sent2vec-0.3.0-py3-none-any.whl (8.1 kB)

Downloading gensim-4.3.3-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (26.6 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 26.6/26.6 MB 49.7 MB/s eta 0:00:00

?25hDownloading numpy-1.26.4-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (18.0 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 18.0/18.0 MB 62.6 MB/s eta 0:00:00

?25hDownloading scipy-1.13.1-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (38.2 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 38.2/38.2 MB 21.4 MB/s eta 0:00:00

?25hDownloading thinc-8.3.4-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (3.7 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 3.7/3.7 MB 98.7 MB/s eta 0:00:00

?25hDownloading blis-1.2.1-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (11.6 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 11.6/11.6 MB 101.2 MB/s eta 0:00:00

?25hInstalling collected packages: numpy, scipy, blis, gensim, thinc, sent2vec

Attempting uninstall: numpy

Found existing installation: numpy 2.0.2

Uninstalling numpy-2.0.2:

Successfully uninstalled numpy-2.0.2

Attempting uninstall: scipy

Found existing installation: scipy 1.16.2

Uninstalling scipy-1.16.2:

Successfully uninstalled scipy-1.16.2

Attempting uninstall: blis

Found existing installation: blis 1.3.0

Uninstalling blis-1.3.0:

Successfully uninstalled blis-1.3.0

Attempting uninstall: thinc

Found existing installation: thinc 8.3.6

Uninstalling thinc-8.3.6:

Successfully uninstalled thinc-8.3.6

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

opencv-python-headless 4.12.0.88 requires numpy<2.3.0,>=2; python_version >= "3.9", but you have numpy 1.26.4 which is incompatible.

opencv-contrib-python 4.12.0.88 requires numpy<2.3.0,>=2; python_version >= "3.9", but you have numpy 1.26.4 which is incompatible.

opencv-python 4.12.0.88 requires numpy<2.3.0,>=2; python_version >= "3.9", but you have numpy 1.26.4 which is incompatible.

tsfresh 0.21.1 requires scipy>=1.14.0; python_version >= "3.10", but you have scipy 1.13.1 which is incompatible.

Successfully installed blis-1.2.1 gensim-4.3.3 numpy-1.26.4 scipy-1.13.1 sent2vec-0.3.0 thinc-8.3.4

CPU times: user 2.45 s, sys: 977 ms, total: 3.42 s

Wall time: 27.5 s

%%time

from scipy import spatial # for cosine distance

from sent2vec.vectorizer import Vectorizer # uses DistilBERT

sentences = [

"There are several approaches to learn NLP.",

"BERT is an amazing NLP language model.",

"We can use embedding, encoding, or vectorizing to represent language.",

]

vectorizer = Vectorizer()

vectors_bert = vectorizer.run(sentences)

vectors_bert = vectorizer.vectors

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<timed exec> in <module>

/usr/local/lib/python3.12/dist-packages/sent2vec/vectorizer.py in <module>

1 import numpy as np

2 import os

----> 3 import gensim

4 import torch

5 import transformers as ppb

/usr/local/lib/python3.12/dist-packages/gensim/__init__.py in <module>

9 import logging

10

---> 11 from gensim import parsing, corpora, matutils, interfaces, models, similarities, utils # noqa:F401

12

13

/usr/local/lib/python3.12/dist-packages/gensim/corpora/__init__.py in <module>

4

5 # bring corpus classes directly into package namespace, to save some typing

----> 6 from .indexedcorpus import IndexedCorpus # noqa:F401 must appear before the other classes

7

8 from .mmcorpus import MmCorpus # noqa:F401

/usr/local/lib/python3.12/dist-packages/gensim/corpora/indexedcorpus.py in <module>

12 import numpy

13

---> 14 from gensim import interfaces, utils

15

16 logger = logging.getLogger(__name__)

/usr/local/lib/python3.12/dist-packages/gensim/interfaces.py in <module>

17 import logging

18

---> 19 from gensim import utils, matutils

20

21

/usr/local/lib/python3.12/dist-packages/gensim/matutils.py in <module>

1032 try:

1033 # try to load fast, cythonized code if possible

-> 1034 from gensim._matutils import logsumexp, mean_absolute_difference, dirichlet_expectation

1035

1036 except ImportError:

/usr/local/lib/python3.12/dist-packages/gensim/_matutils.pyx in init gensim._matutils()

ValueError: numpy.dtype size changed, may indicate binary incompatibility. Expected 96 from C header, got 88 from PyObject

print(len(vectors_bert))

print([len(v) for v in vectors_bert])

print(vectors_bert[0])

3

[768, 768, 768]

[-2.26823017e-01 -1.47761062e-01 -5.47579350e-03 -3.04903507e-01

-3.22078824e-01 -1.62703261e-01 2.03426387e-02 1.00655407e-01

3.73837091e-02 -5.03204226e-01 -1.22439943e-01 1.63336441e-01

1.29842563e-02 6.99332803e-02 3.26597206e-02 3.87874156e-01

-7.58709095e-04 2.40361437e-01 -1.91940039e-01 -2.15191051e-01

-1.55010313e-01 -5.68637326e-02 1.55610666e-01 -8.65276679e-02

8.88356240e-04 -6.96205720e-02 7.45741278e-02 9.62956548e-02

2.06479847e-01 -6.76913932e-02 -9.84049365e-02 4.25805241e-01

-1.33869514e-01 -2.16157615e-01 1.26786023e-01 6.81602731e-02

3.96859676e-01 1.13230199e-01 4.18778628e-01 3.79229076e-02

-1.06499501e-01 9.64720249e-02 1.49633139e-01 9.64184999e-02

-4.75690663e-02 -1.01827666e-01 -2.63574839e+00 1.65038556e-02

-4.71193939e-01 -4.67932016e-01 -1.86669126e-01 2.94502199e-01

9.48905423e-02 5.40360272e-01 8.56129639e-03 3.07039082e-01

-3.35935831e-01 1.45308673e-01 1.37860700e-01 2.19708443e-01

9.55504403e-02 2.88474023e-01 -2.76178569e-02 1.22285582e-01

-1.54011808e-02 4.51129556e-01 -3.07397217e-01 3.09489220e-01

-6.12712681e-01 3.57676268e-01 -4.09798980e-01 9.58273485e-02

-4.27542105e-02 6.80846125e-02 -4.93309386e-02 -1.35744914e-01

8.74879509e-02 -4.53176647e-02 -8.67362320e-02 4.83227819e-02

-2.39696696e-01 2.68901557e-01 1.44882098e-01 -2.74839494e-02

4.40118283e-01 1.44843102e-01 -3.82807180e-02 1.65082321e-01

1.57738671e-01 6.64534450e-01 1.04841538e-01 1.51146334e-02

1.54527113e-01 1.23651788e-01 3.48817110e-01 -1.46993965e-01

1.78795561e-01 -2.29342327e-01 2.08396047e-01 1.40462806e-02

2.47902088e-02 -2.89021656e-02 2.28638738e-01 -4.83231872e-01

9.73528549e-02 -2.97256082e-01 -2.70515680e-01 -3.18353891e-01

1.86815802e-02 -1.85961711e+00 3.24971452e-02 4.31109756e-01

-1.12260215e-01 -4.44193512e-01 -6.53545931e-02 1.65485218e-01

1.52908880e-02 1.44769832e-01 1.12764919e-02 9.00352746e-02

-2.54508257e-01 5.55104673e-01 9.34276581e-02 -8.16307291e-02

-1.62391484e-01 2.10521832e-01 -2.70959735e-02 -1.32175967e-01

3.04886624e-02 5.38688302e-02 2.32332170e-01 3.40421468e-01

7.09613189e-02 3.05516291e-02 -2.02954888e-01 9.00338888e-02

7.54131228e-02 1.69310719e-01 -1.35370523e-01 -3.94758061e-02

-7.01085031e-01 -7.98658058e-02 -2.02029872e+00 1.42055452e-01

5.93047261e-01 2.78570235e-01 -2.84663469e-01 -1.04280643e-01

-1.78184956e-02 2.62182266e-01 1.08751990e-01 1.69573590e-01

-2.42274344e-01 2.09384114e-01 -2.54915744e-01 1.46993056e-01

-2.84852803e-01 -6.58724532e-02 4.17336375e-02 -3.39802578e-02

-2.99072564e-02 -2.72333652e-01 -2.44185835e-01 -1.45454198e-01

6.80722073e-02 -6.28367215e-02 1.87413648e-01 4.31166917e-01

-9.28756818e-02 7.98323750e-02 -7.21464455e-02 -3.13811183e-01

4.81017411e-01 -3.74601781e-01 7.63321221e-02 -2.36082092e-01

2.44253520e-02 1.86722621e-01 3.05069685e-01 -9.52055957e-03

-5.19484401e-01 5.97312450e-01 9.03005302e-02 -2.70555228e-01

-3.72936837e-02 3.00552528e-02 3.06580573e-01 -2.97621489e-01

2.67895669e-01 1.53339922e-01 -2.45803222e-01 1.38785630e-01

-1.04599997e-01 -3.80814411e-02 4.00410950e-01 2.81501234e-01

-2.53713191e-01 -3.70403826e-01 -2.40856618e-01 8.23369622e-02

-3.41215819e-01 -5.75691909e-02 -2.47280106e-01 2.29414240e-01

-6.45461604e-02 2.77542782e+00 7.87422135e-02 6.51319250e-02

3.58989060e-01 -5.59832491e-02 -1.66168377e-01 -4.70280685e-02

2.30873842e-02 -9.23751891e-02 4.42410290e-01 -1.90682895e-02

8.18718150e-02 -3.38529311e-02 1.95147097e-01 -3.29268932e-01

4.47742134e-01 -8.79669636e-02 -1.23695031e-01 3.34862649e-01

3.18276286e-02 4.75249469e-01 1.01494335e-01 1.13809295e-01

3.55070263e-01 -9.70506310e-01 4.10026401e-01 -1.81388915e-01

1.39484063e-01 1.08907364e-01 -4.16180521e-01 -1.28986806e-01

-1.75764728e-02 -1.66842714e-01 -9.73673686e-02 -2.82542109e-01

-1.34674609e-01 2.98626333e-01 2.20364947e-02 -3.81718623e-03

-8.97697732e-03 -9.32683349e-02 9.84866694e-02 -1.68176562e-01

-3.15172039e-02 -1.59646809e-01 -1.52193168e-02 4.04709242e-02

-3.61425355e-02 -1.31888270e-01 1.67583734e-01 8.35261540e-04

5.98185286e-02 -1.61335263e-02 -9.42047760e-02 -5.22759967e-02

-2.59322912e-01 9.23770890e-02 -1.29276499e-01 -7.61138350e-02

-2.41602033e-01 -3.14744972e-02 1.70970142e-01 -6.24634102e-02

4.74545091e-01 5.71488142e-02 -2.35630255e-02 -4.70198303e-01

-4.78065848e-01 -2.38320994e+00 -3.54137689e-01 -4.42415655e-01

3.41201693e-01 1.85879290e-01 -1.78949878e-01 -1.04123041e-01

6.56973338e-03 2.35111758e-01 -2.28391722e-01 5.97111508e-02

1.18563853e-01 1.37852291e-02 1.22526130e-02 -4.46589947e-01

3.63903701e-01 -2.11648986e-01 -3.83351803e-01 4.42999482e-01

-2.32672885e-01 -2.04120621e-01 2.22281173e-01 -1.05530396e-01

1.07551523e-01 2.92489417e-02 1.13889396e-01 -1.97316438e-01

-5.32778144e-01 -3.67643148e-01 1.02619745e-01 7.28893206e-02

-3.82938504e-01 1.43517435e-01 -1.88074231e-01 -2.54700422e-01

-3.98399901e+00 -3.31057161e-02 -1.75209492e-01 -1.98840961e-01

2.07303286e-01 -1.42577738e-01 4.55047280e-01 -3.05551291e-01

-1.09201908e-01 2.31051948e-02 -2.01247171e-01 -6.35872111e-02

-1.75411120e-01 2.05243900e-01 2.35942021e-01 -8.39132890e-02

4.21901613e-01 -1.20110355e-01 2.83799171e-01 1.86568454e-01

5.46215363e-02 -3.98122594e-02 -1.71857551e-01 2.64151752e-01

-4.07825746e-02 1.82962388e-01 -4.98848975e-01 -9.00024250e-02

-2.47254074e-01 -2.04888105e-01 6.73137307e-02 -2.38001540e-01

9.14094597e-02 -1.27731666e-01 -2.33812213e-01 -1.88372925e-01

1.50251715e-02 1.55119196e-01 2.81799048e-01 -1.37119934e-01

-1.55513790e-02 8.95956039e-01 4.80378680e-02 -2.36534685e-01

3.70134979e-01 -1.08685970e-01 8.78231227e-02 5.76908654e-03

9.70422029e-02 2.22099394e-01 -3.29074785e-02 4.45820726e-02

8.98213804e-01 -1.36731744e-01 1.96854562e-01 -2.10877657e-01

4.15728301e-01 -1.61334887e-01 9.10422653e-02 6.02832474e-02

6.58661366e-01 -1.18021749e-01 2.02928744e-02 -3.84146482e-01

1.21805161e-01 -3.66641164e-01 2.82424539e-02 -4.59806830e-01

8.48373771e-02 1.24456137e-01 -3.41930181e-01 2.62192219e-01

1.23951033e-01 -1.01404166e+00 1.03130788e-02 -1.57883435e-01

-1.36062577e-01 -5.24381548e-02 -1.39156029e-01 2.58363217e-01

-6.03006661e-01 -1.20169461e-01 -1.55057758e-01 2.22922191e-01

-1.90432161e-01 -8.43296871e-02 -2.60037422e-01 9.81554240e-02

-3.54547948e-01 2.12953895e-01 1.69279113e-01 2.76174873e-01

2.70218581e-01 -4.04903889e-02 -7.58495331e-02 1.63042068e-01

3.41014504e-01 -5.94523370e-01 3.76277328e-01 -1.96680382e-01

3.21530104e-01 -4.81156021e-01 -3.89512300e-01 -2.12872382e-02

-3.84428315e-02 -7.48455375e-02 -3.85747045e-01 4.24151510e-01

5.94641268e-01 2.89940089e-01 -2.93446273e-01 7.36966059e-02

-4.81509641e-02 3.17279458e-01 8.83386195e-01 -1.00194663e-01

-2.42691115e-01 2.86531985e-01 1.96036294e-01 1.96277797e-01

1.39873743e-01 2.53659904e-01 -1.00874960e-01 7.90259391e-02

-1.52343124e-01 1.35166362e-01 2.70832866e-01 1.70779619e-02

-5.24571776e-01 -1.35290742e-01 1.90432757e-01 -6.25958815e-02

-3.78610879e-01 -4.04048771e-01 1.78893097e-02 4.40552691e-03

-3.03421140e-01 3.85396272e-01 2.09550917e-01 -1.32109776e-01

5.72810590e-01 1.69442609e-01 -1.57633021e-01 3.68085146e-01

-1.61640674e-01 3.53503823e-01 9.26730409e-02 -1.75020695e-02

-1.45956250e-02 4.52185005e-01 3.42324466e-01 -2.39099920e-01

1.47445083e-01 -2.87156314e-01 3.38443249e-01 5.95076531e-02

-1.68009490e-01 -1.34095088e-01 -2.19338417e-01 -2.03131884e-02

2.33578831e-01 1.15399845e-01 -1.22840607e+00 8.61015916e-02

-8.51946697e-02 -4.09756005e-01 8.54763761e-02 2.47371584e-01

8.46841186e-03 1.68238088e-01 2.28288144e-01 -6.96231350e-02

-2.22524643e-01 -1.44618571e-01 2.54606962e-01 9.70552042e-02

1.80657357e-01 6.86261430e-02 -3.89966398e-01 -2.39925519e-01

1.33364081e-01 -1.57093287e-01 2.40891024e-01 3.66401404e-01

9.45899263e-02 -3.02364472e-02 4.55497764e-02 -1.39668226e-01

-3.04720253e-01 1.66179419e-01 8.82003605e-02 -5.61152063e-02

5.43554388e-02 -4.90639240e-01 -4.13035572e-01 -1.38074696e-01

1.56396136e-01 4.64149594e-01 1.70700416e-01 -9.03190747e-02

2.79066652e-01 4.67687309e-01 -2.94482261e-01 1.26683652e-01

2.23465696e-01 -1.84697304e-02 2.21003503e-01 1.84812963e-01

-1.52590930e-01 -2.38419652e-01 -1.07181305e-02 -3.53877753e-01

1.08581953e-01 -3.72363478e-01 -9.94319990e-02 -2.40276661e-02

9.63701531e-02 4.83764708e-02 1.90859772e-02 -2.72734761e-01

4.81234975e-02 -2.59204149e-01 2.47689441e-01 -2.68151194e-01

-9.17727500e-02 7.36614829e-03 -2.74078012e-01 -7.68317401e-01

2.27967110e-02 5.07310927e-02 -9.68993902e-02 8.88455212e-02

1.62850842e-01 -1.55871719e-01 -3.30328673e-01 -5.76968566e-02

-2.97000498e-01 1.97899029e-01 1.06270269e-01 4.95866574e-02

1.24977656e-01 -1.96507685e-02 -2.91426089e-02 -1.37163237e-01

-5.36235869e-02 1.82125837e-01 1.35074705e-01 7.21001402e-02

-1.22615770e-01 -5.00245988e-02 2.78049350e-01 -8.52020681e-02

-2.09889635e-01 -1.62394375e-01 6.63297251e-02 4.49191839e-01

2.85629958e-01 -2.08596587e-02 1.98431149e-01 8.64213035e-02

4.15109098e-02 -5.96219264e-02 -7.87838176e-02 4.27091330e-01

-1.32452641e-02 8.53687003e-02 -1.59388527e-01 9.41388682e-03

2.81176537e-01 -6.67755678e-02 -2.46442690e-01 -2.62191802e-01

8.32653642e-02 1.94023654e-01 1.12548389e-01 -9.87180844e-02

1.76222637e-01 9.66103226e-02 -1.36115640e-01 -3.46074551e-01

1.38782227e+00 2.03133702e-01 2.40363255e-01 3.85643728e-02

4.76367623e-02 1.16032772e-01 4.41755466e-02 -5.64423986e-02

5.75231798e-02 1.63326502e-01 -3.78666461e-01 9.04994830e-02

-1.62141621e-01 1.56018093e-01 -3.47767025e-02 2.13334203e-01

-1.52753927e-02 1.81620777e-01 -6.32040858e-01 -6.77393079e-02

-1.94472238e-01 1.60786077e-01 2.00159848e-01 -1.73232593e-02

2.36870900e-01 1.55469730e-01 2.21356407e-01 -1.27177775e-01

-5.76464534e-02 1.46301091e-01 -6.02893531e-02 3.16140771e-01

2.25121856e-01 3.15511882e-01 9.25692618e-02 -4.37557660e-02

-6.53960332e-02 -3.37835431e-01 2.14453533e-01 1.41064122e-01

1.13495298e-01 -2.54960090e-01 5.42946219e-01 -9.20450613e-02

-2.47639120e-01 4.24886972e-01 9.30148065e-02 -4.39312398e-01

1.16518833e-01 3.63954604e-01 2.37775847e-01 1.66261449e-01

2.30428968e-02 1.06118873e-01 -1.82369158e-01 7.47296885e-02

2.28815824e-01 -1.59212843e-01 1.85413333e-03 2.63744116e-01

1.10013232e-01 2.89614946e-01 1.13922089e-01 -7.32004941e-02

5.40841892e-02 9.53734666e-02 -2.02629372e-01 1.84097737e-01

-2.07332745e-01 9.76036936e-02 3.10970515e-01 2.56136566e-01

2.83115298e-01 5.35130024e-01 1.77970886e-01 1.26921013e-01

3.67209375e-01 3.10058407e-02 -6.08112514e-02 -1.49223578e+00

1.25000387e-01 4.66605812e-01 4.19445097e-01 -2.30416767e-02

8.34298730e-02 1.13320455e-01 9.34180617e-02 -1.10260911e-01

-2.30419174e-01 3.09943646e-01 2.58616835e-01 5.59240699e-01

1.24192327e-01 1.86672479e-01 -7.64031485e-02 8.33642781e-02

-1.91049017e-02 7.19259754e-02 -3.68612826e-01 -4.45300072e-01

4.76997159e-02 2.17042938e-01 -1.94208205e-01 -6.85525417e-01

1.70823276e-01 1.05218336e-01 -3.09406579e-01 1.89641789e-01

1.08483568e-01 1.58739224e-01 1.90208137e-01 -1.48205450e-02

2.32073575e-01 -1.26893371e-02 -2.12258428e-01 2.05483109e-01

-2.30197251e-01 1.14911929e-01 2.72436351e-01 -2.92711318e-01

3.47318023e-01 1.91550016e-01 1.88708723e-01 -1.58242002e-01

-2.16264933e-01 4.39530641e-01 -2.06607491e-01 5.17994702e-01

-1.87912479e-01 -2.55198538e-01 5.25640607e-01 1.04928881e-01

7.48544261e-02 7.62657821e-02 -1.23278424e-01 -1.04919553e-01

1.06906749e-01 -1.96471512e-01 -1.48452818e-01 -2.74912924e-01

-9.62016657e-02 -5.34620769e-02 6.07956313e-02 2.66585231e-01

-8.13766420e-02 -3.81158255e-02 2.12972328e-01 -1.70428425e-01

-2.70167202e-01 3.96722518e-02 7.84713998e-02 8.11150447e-02

-7.18506426e-02 2.20162809e-01 -4.09821011e-02 2.03185603e-02

4.95767385e-01 3.72703299e-02 2.63583720e-01 1.74978692e-02

-7.91534316e-03 2.05668792e-01 -2.02743381e-01 5.45394659e-01

-5.06533623e+00 -1.16844989e-01 1.18520401e-01 -2.51036078e-01

-2.78635412e-01 -1.83215559e-01 -1.54419214e-01 -5.08450866e-01

-5.26199266e-02 -1.69289876e-02 1.18149951e-01 -3.47214900e-02

-6.04555756e-02 9.65920687e-02 3.54044825e-01 4.99681145e-01]

print(sentences)

dist_1 = 1 - spatial.distance.cosine(vectors_bert[0], vectors_bert[1]) # Similarity = 1 - Distance

print(dist_1)

dist_2 = 1 - spatial.distance.cosine(vectors_bert[0], vectors_bert[2])

print(dist_2)

dist_3 = 1 - spatial.distance.cosine(vectors_bert[1], vectors_bert[2])

print(dist_3)

['There are several approaches to learn NLP.', 'BERT is an amazing NLP language model.', 'We can use embedding, encoding, or vectorizing to represent language.']

0.9422925349756553

0.8620276274855502

0.8503696792267201

To summarize, here is a graphic that depicts various distance measures.