1. Open Source AI#

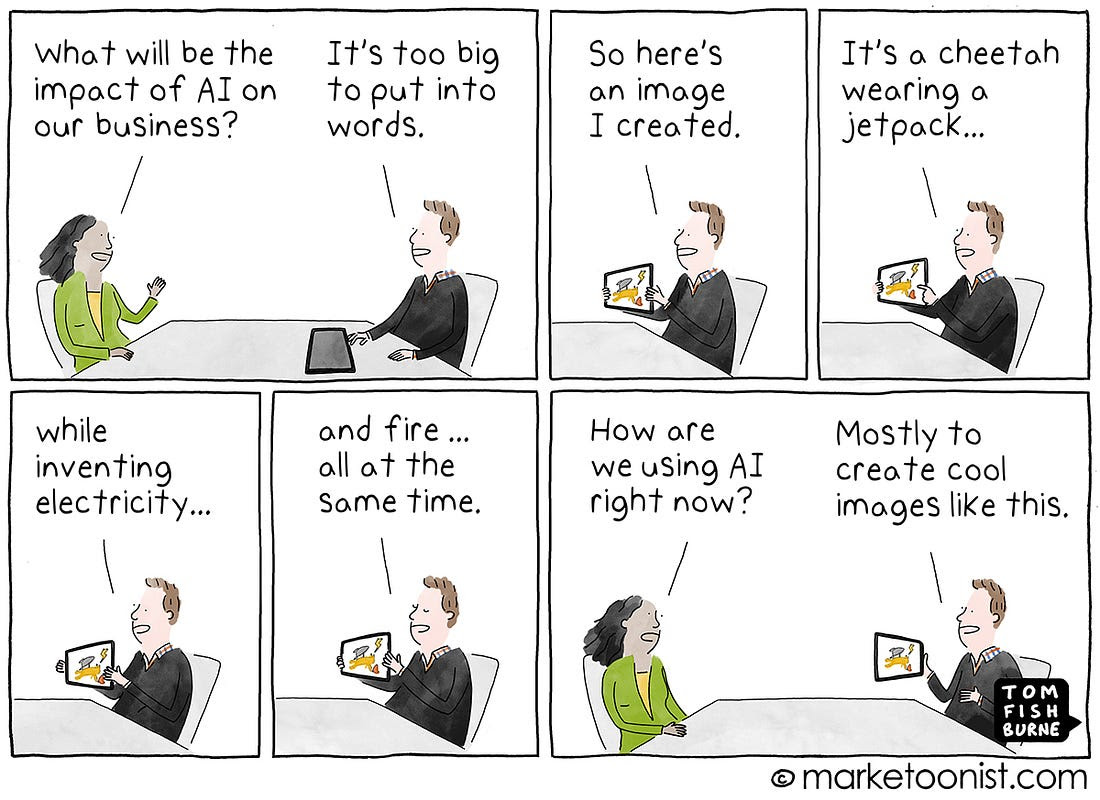

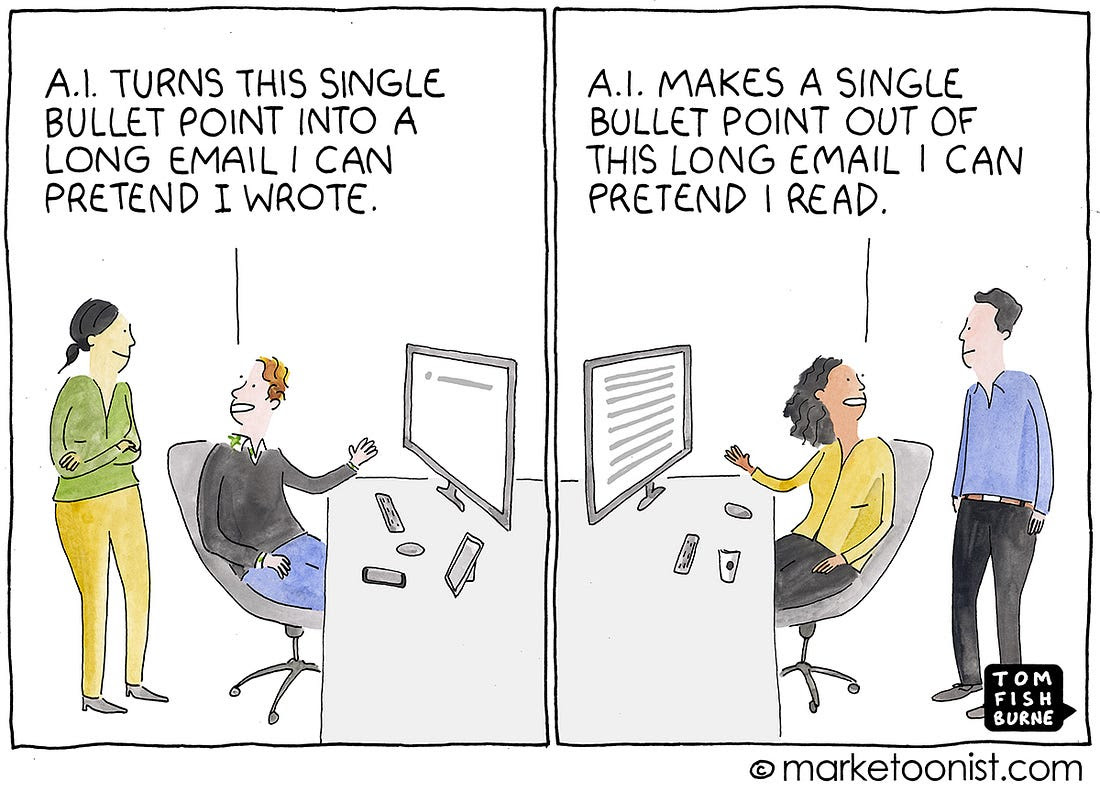

AI is a misnomer, as intelligence is difficult to define even though we have many IQ tests claiming to quantify intelligence. Generative AI (GenAI) bypasses this issue and assumes machine intelligence as given and the debate has been reframed around AGI (artificial general intelligence) instead.

The computer scientist Francois Chollet (creator of Keras, among other useful software) makes a useful distinction between skill and intelligence. Skill is how well as task is performed and intelligence is how quickly one learns a task. By this definiton GenAI is skilled but not intelligent as it requires many lifetimes of data processing to train the latest models. Nevertheless, the technology is interesting and in these lectures, the hope is we learn about the amazing human ideas that developed these machine skills by delving deeper into the mathematical and computer science theories underpinning AI.

from google.colab import drive

drive.mount('/content/drive') # Add My Drive/<>

import os

os.chdir('drive/My Drive')

os.chdir('Books_Writings/NLPBook/')

Mounted at /content/drive

%%capture

%pylab inline

import pandas as pd

import os

from IPython.display import Image

And AI is everywhere but we need to keep questioning if it is useful and how we can improve it. Hopefully, learning about it will help!

1.1. GenAI#

Generative AI is in a phase of rapid growth, with concurrent industrialization and democratization. Whereas traditional ML was industrialized first and democratized later, ChatGPT reversed this for generative AI (GenAI).

We are now seeing two more broad developments:

Compound AI systems. This is a new phase of models, which started with building classifiers in standard machine learning (ML). These models grew larger with the advent of deep neural networks and eventually large language models (LLMs). Now models are no longer a single monolithic neural net artifact, but a collection of components often comprising multiple LLMs, tools, and agents chained together. These are called Compound AI Systems.

Closed versus Open Source AI. Huge models like ChatGPT, Claude, Gemini, etc., have usually the provenance of well-funded large tech companies. They required massive resources to train these models as well as sophisticated infrastructure to deploy the models for inference.

These closed models do not disclose their training procedures, data, or model weights. They are accessed mostly through APIs with per token pricing menus.

Open models will disclose model weights but not training procedures, nor will they share the data used for training, let alone the sources of that same data. Still, the open class of models, such as Meta’s Llama, are very popular, because they can be used for fine-tuning, as well as additional analysis and modification. Open models also tend to be smaller, and require less compute, while being almost as accurate, and in many cases, faster than large closed models.

Open source models that disclose everything are few and far between. Examples of these are Bloom, and the Mistral class of models.

Trade-offs between these approaches are based on criteria like cost, safety/misuse, and transparency. There is no consensus as to whether this taxonomy is widely agreed upon by the open source or closed source communities, as is reflected in this thought piece.

Open Source AI is the way forward, according to Mark Zuckerberg in this essay: https://about.fb.com/news/2024/07/open-source-ai-is-the-path-forward/. PDF. I asked an AI to summarize the article, shown below here. Read the article, it is way better! Really.

He argues for the importance of open source AI in fostering innovation, security, and accessibility in technology.

He draws parallels between the development of operating systems, particularly Linux, and the current landscape of AI, suggesting that open source models, like Llama, are becoming competitive with closed models.

He emphasizes that open source AI can democratize access to technology, allowing startups, universities, and smaller entities to benefit from advancements, while also enhancing safety through transparency and scrutiny.

The article advocates for a collaborative approach among tech companies and governments to support open source AI as a means to drive economic growth and ensure a secure future, countering the threat posed by more resource-rich states.

Overall, it positions open source AI as a critical factor for maximizing the benefits of AI for society as a whole.

Open source AI is good for developers because: The benefits of open source AI for developers include:

Cost Efficiency: Developers can run inference on open source models like Llama 3.x at roughly 50% the cost compared to using closed models.

Transparency: Open source models are more transparent, which allows developers to understand how the models work and make necessary modifications.

Data Protection: Developers can run models on their own infrastructure, which helps protect sensitive data that cannot be sent to closed models over cloud APIs.

Flexibility and Freedom: Open source enables developers to avoid being locked into a single cloud provider or model, allowing for easy movement between compatible toolchains.

Access to Leading Technology: Open source AI provides developers with access to advanced models and tools that can help them build better and more innovative applications.

Community Support: Open source projects often have a community of developers contributing to and improving the technology, providing additional resources and support.

Innovation: Open source AI promotes faster innovation and development, as many developers can collaborate and build on each other’s work.

You can fine-tune and distill open source models to build better wariants.

Overall, open source AI fosters an environment that encourages development, innovation, and the democratization of technology.

The benefits of open source AI for society as a whole include:

Increased Access: Open source AI ensures that more people around the world have access to AI technology, preventing power from being concentrated in a small number of companies.

Transparency and Safety: Open source systems are more transparent and can be widely scrutinized, which makes them potentially safer compared to closed models. This transparency allows for rigorous testing and assessment of AI systems, reducing the risk of unintentional harm.

Encouragement of Innovation: A decentralized and open innovation ecosystem fosters the development of startups, universities, and small businesses, providing them with opportunities to leverage advanced AI technologies.

Economic Growth: Open source AI has the potential to accelerate economic growth by increasing human productivity, creativity, and quality of life, as well as unlocking progress in fields like medical and scientific research.

Robust Defense Against Malicious Use: Widespread deployment of open source AI can help larger institutions and governments check the power of smaller bad actors who may use AI for intentional harm.

Flexibility and Control: Organizations can run models wherever they want, addressing concerns about data security and trust that come with closed models, as well as avoiding vendor lock-in.

Cost Efficiency: Open source AI models can be more affordable to run compared to closed models, making advanced technology accessible to a broader range of users and organizations.

Overall, open source AI represents a collaborative approach to harnessing technology for the greater good, promoting economic opportunity and security for everyone.

1.2. What is the Open Source ecosystem?#

Definition: Source code made freely available for use, study and modification, redistribution, and distribute modifications (Stallman’s Four Freedoms). Anyone can inspect, modify, and enhance OS code.

Examples are: PyTorch, TensorFlow, Jax, scikit-learn, XGBoost, AutoGluon, Jupyter, Ray, Spark, NumPy, Pandas, Matplotlib, Altair, LangChain and many others.

1.3. Tenets#

Openness: publicly accessible

Transparency: promotes open collaboration

Collaboration: peer review and peer production

In active projects with a large number of participants it results in rapid software evolution of high quality.

Self interest plays a bigger role than altruism! Contrary to popular thinking, contributors to open source projects are mostly interested in adding features that they want to use. This is a good thing as it brings contributors to a project who are also users and the roadmaps for successful open source projects automatically work backwards from their customers.

1.4. Open Source Process#

Example: Jupyter AI

Maintainers: a small group of gatekeepers who decide what code is accepted into the code base

Community: maintainers plus users who submit issues and pull requests Issues: requests to repair bugs, add new features, user interface suggestions, etc.

Pull requests: code submissions by the community that go through code review, modification, and then are merged into the code base.

Code Review: maintainers and others in the community review the code and assess it for approval, while suggesting improvements.

Merge: Once the code review is approved, one of the maintainers will merge the code.

Release: New versions of the code base will be released from time to time at three levels – major, minor, patch releases (e.g., 2.16.0 where each number relates to the release level). A release candidate (RC) is a beta version of the release.

1.5. How is Quality Control done?#

“Many eyes promotes high quality” (Massively Diverse Human Scrutiny)

Feature review by a wide community

Code reviews are at a high bar

Automated testing: unit tests, integration tests

Coding standards and style guidelines

Gated commits

CI/CD (continuous integration and continuous delivery/deployment)

1.6. Issues with Open Source Software#

Fragmented, multiple licenses in the same project make compliance for production systems complicated.

Because much of OSS is deployed in the cloud, which runs closed software, it limits access and usage.

Need for privacy of internal data limits cloud-based open source usage with external providers.

Shortage of people with technical skills to include OSS in cloud systems.

Sometimes there is poor documentation, poor UX, hard to use software, etc.

OSS maintainers are small teams and this affects velocity of innovation.

1.7. BDFL: Benevolent Dictator for Life#

Many OS projects have a founder who runs the project in an autocratic manner, i.e., oversees all contributions. He/she is known as the BDFL. Linus Torvalds, the creator of Linux, is a good example. Such projects may eventually migrate into a different organization structure, such as a committee of core maintainers, foundation led, etc.

A good BDFL usually adheres to the following tenets:

Be open and friendly

Ship rock-solid products

Be a leader in Open Source

Maintain a security first focus

Provide top-notch documentation

Remain independent

Respond timely

Keep up with the world

Stay on the bleeding edge

Respect feedback

References:

1.8. What is different about working in open source?#

Open source software development (OSSD) is radically different from traditional software engineering (TSE). The community owns the code: “Once the code has been committed, it is no longer ’your code’ it is ’our code’, and we are all committed to making that code as good as possible. It’s one of the strengths of open source.”

Jason Weill gave a talk at WebDevCon 2023 where he made some of the following observations about the comparison of OSSD with TSE:

OSSD: long term view and effectiveness depends on earning trust in the community than TSE

Many stakeholders, maybe a BDFL (OSSD) vs one entity, one hierarchy (TSE)

Consensus, voting, escalation (OSSD) vs single-threaded ownership (TSE)

Users and contributors (OSSD) vs customers (TSE)

Bottom up: Build, pull request, consensus (OSSD) vs Top-down: business case, design doc, reviews.

Communicate publicly (OSSD) vs privately (TSE)

Freely available tooling (OSSD) vs custom tooling (TSE)

Shared source and package repositories (OSSD) vs proprietary code (TSE)

Less hacking! Everyone can see it (OSSD) vs hack and fix later (TSE)

No single operator responsible for bugs (OSSD) vs on-call, pages, etc.

1.9. A Brief History of OSS#

Free software is a matter of liberty, not price. To understand the concept, you should think of ‘free’ as in ‘free speech,’ not as in ‘free beer’ – Richard Stallman, the founder of the Free Software Foundation

Before software was licensed, source code was often distributed so that users could compile it on their own hardware and modify it if needed.

Time Line

1953: Remington’s UNIVAC was released with OSS software called A-2 (Arithmetic Language v2). IBM released code for their operating system 74.

ARPANET (1969) made it easy to distribute OSS IBM loses antitrust suit in 1969 forcing the unbundling of hardware and software, which promotes OSS.

1973: Unix v4 source code (written in C) released by Dennis Ritchie at Bell Labs. Also SPICE was released.

1983: GNU = GNU’s Not Unix - because AT&T started charging for Unix. Released the Emacs editor.

1985: Free Software Foundation (FSF) created to host the funding for GNU.

1986: MIT License for release of the X Window system.

1989: General Public License (GPL) created - protected software users’ freedoms using copyright law.

1991: Linux kernel released as OSS. Other developments at this time: BSD, FreeBSD, OpenBSD, and last but not least, TeX.

In the 1990s: terminology “free” is replaced by “open source”. Together, this became FOSS

1991: Python interpreter released to open source.

1993: Mozilla Firefox code goes open source. Debian Free Software Guidelines, and Apache starts.

1994: Linux 1.0 is open sourced. Monetized by add-on services.

1995: Apache Server

1996: Kool Desktop Environment (KDE), non-profit foundation 1997

1997: GNU Network Object Model Environment (GNOME), npf in 2000

1997: The Cathedral and the Bazaar is published by Eric Raymond – “Given enough eyeballs, all bugs are shallow.”

1998: Netscape Navigator OS code released. This is when the term “open source” is coined in Los Altos by the Foresight Group.

1999: Apache software foundation.

2000: Linux Foundation is established.

Early 2000s: Eric Raymond starts the Open Source Initiative (OSI).

2000: OpenOffice code released.

2003: Mozilla Foundation.

2004: IPython released

Git (2005) and Github (2008) operationalize the management of OSS.

Android OSS released in 2008.

2008: Github has 33K repositories, in 2018 >100 million repos! In 2021, >413 million contributions.

2008: Blockchain, Chromium code release

2009: Node.js

2014: Transition to Project Jupyter

2016: PyTorch released to open source. Part of the Linux Foundation.

2022: PyTorch Foundation, part of the Linux Foundation. Today, 98% of enterprises use OSS

References

History of open source software: https://www.btw.so/blog/history-of-open-source-software/ https://www.gitcoin.co/blog/a-brief-history-of-open-source

A Brief History of Free, Open Source Software and Its Communities, Jesus M. Gonzalez-Barahona, Computer, 2021, https://dl.acm.org/doi/10.1109/MC.2020.3041887

FreeCodeCamp: https://www.freecodecamp.org/news/brief-history-of-open-source/

How I coined the term open source: https://opensource.com/article/18/2/coining-term-open-source-software

State of Open Source Report: https://www.openlogic.com/resources/state-of-open-source-report; Data: openlogic/2024-State-of-Open-Source-survey-dataset

Video on the PyTorch revolution: https://www.youtube.com/watch?v=EjgTv6aSeqk

1.10. What is Project Jupyter?#

Jupyter is a foundational tool for AI/ML and is used by millions of users worldwide.

Jupyter is a multi-stakeholder open source project that builds software and standards for interactive computing in data science, scientific computing, and machine learning.

The Jupyter notebook enables users to create and share documents – Jupyter notebooks – that contain code, narrative text, equations, and visualizations.

AWS is a Principal Corporate Sponsor of Jupyter and its 501c3 (NumFOCUS).

Multiple Jupyter contributors and leaders work full time on open source, employed by AWS

Jupyter has a layered ecosystem that 1) is founded in a diverse community of users and contributors who 2) establish open standards and protocols, by 3) building extensible software, which is 4) deployed in services and enables the authoring and sharing of content. These layers build on each other, are each irreplaceable, and drive innovation. Ref: https://ieeexplore.ieee.org/document/9387490

1.11. A Brief History of Jupyter#

JuPyteR = Julia, Python, R (used by the scientific research community)

2001: Fernando Perez creates the original IPython Terminal in grad school (a “thesis procrastination project”). script on Github

2004: Brian Granger and Fernando discuss a web-based notebook interface for IPython at Santa Clara University (SCU).

2004: Fernando and Brian co-create the IPython Notebook.

2005: Min Ragan-Kelley, an undergrad at SCU, becomes the lead in developing IPython Parallel, which was deeply influential in the IPython kernel architecture that Fernando and Brian used to create the IPython Notebook.

2009: Brian creates traitlets, a core GUI improvement for IPython.

2010: Brian creates PyZMQ, for lightweight and fast messaging.

2010: Brian and Fernando build the IPython kernel architecture together, moved to Github.

2010: Min takes over the PyZMQ component from Brian.

2011: Brian writes the code for the actual IPython Notebook server and user interface. (Ideas from a web-based mock up by James Gao at Berkeley.)

2011: First release of the IPython Notebook in December. By now, other people are contributing to the effort. Brian and Fernando set the vision, obtained funding, hired/mentored/managed interns and staff, designed and built the kernel architecture, the notebook interface, and cultivated and managed the community around the effort.

2014: Fernando, Brian and the other core IPython contributors co-found Project Jupyter and rebrand the IPython Notebook as the Jupyter Notebook.

2015: the Jupyter Steering Council is formed.

2015: Jupyter Hub releases, serving a preconfigured Jupyter server to multiple users.

2017: Jupyter Lab releases, providing an extensible environment for interactive and reproducible computing, based on the Jupyter Notebook.

2022: Jupyter-Scheduler is launched, enabling users to schedule notebook jobs.

2023: Jupyter-AI launches, supporting chat and programming interfaces for interacting with a wide range of generative AI models.

2024: Project Jupyter is recognized by the White House for contributions to OS.

References

IPython/Jupyter history: (personal communication)

White House recognition: announcement (2024)

Beg, et al (2021), “Using Jupyter for Reproducible Scientific Workflows” https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9325550

Jupyter: Thinking and Storytelling With Code and Data (Granger & Perez, IEEE-Xplore, 2021)

Project Jupyter, Computer Code that transformed science (BLCS, 2021)

Ten Computer Codes that transformed science, Nature 2021

Interview with Fernando Perez (Berkeley CDSS, 2021)

A brief history of Jupyter Notebooks (William Horton, EuroPython 2020)

Computational narratives of Jupyter as an engine for data science (Medium, 2015)

1.12. Jupyter Scheduler#

Jupyter Scheduler is an open source Jupyter extension that enables users to run and schedule notebooks as jobs.

Configure parameters, instance types, conda environment, etc.

Run once & periodic schedule (CRON)

Uses an immutable snapshot of the notebook

Download and view outputs (notebook, logs, etc.)

Delete jobs

Rerun jobs

Edit/clone job definitions

Usage cases:

Reporting

Lightweight way to move notebooks to production

Experimentation (run a single notebook many times for different parameters)

Scale out (run a notebook on different instance types, clusters, etc.)

Contributed by AWS and governed by Project Jupyter.

Documentation: https://jupyter-scheduler.readthedocs.io/en/latest/

GitHub: jupyter-server/jupyter-scheduler

1.13. Jupyter AI#

Jupyter AI is an open source Jupyter extension that brings generative AI and large language models to Jupyter notebooks.

Create a conda environment:

conda create -n v2 python=3.12

Then activate it:

conda activate v2

Then do a full install:

pip install "jupyter-ai[all]" jupyterlab

Use cases:

AI playground

AI assistant

Contributed by AWS and governed by Project Jupyter

Documentation: https://jupyter-ai.readthedocs.io/en/latest/

GitHub: jupyterlab/jupyter-ai

Design Principles

Vendor neutral

Transparent and traceable

Collaborative

Human-centered

Configurable & extensible

%%aimagic commands

Experiment with dozens of language models directly from a notebook.

Turns notebooks into a generative AI playground!

Works anywhere Jupyter notebooks run (JupyterLab, Jupyter Notebook, Colab, VSCode, Ipython, etc.)

Include code and outputs in your prompt

Render outputs as HTML, JSON, images, etc.

Chat UI in JupyterLab:

Chat-based AI assistant in JupyterLab.

Include code from notebooks/text files in your chat request.

Copy/paste/insert generated code back into notebooks.

Pick the chat and embedding model you want to use, including local models.

Chat UI is multi-user when JupyterLab’s real-time collaboration is enabled.

The

/learnand/askcommands enable you to ask questions about your local files.The

/generatecommand enables you to generate an entire notebook from a promptThe

/exportcommand allows you to save your chat history.

1.14. Summary#

Open source software provides significant value in AI/ML

Rapid innovation

Open collaboration

Best practices, open standards

There are many opportunities to participate in open source communities and and contribute; watch Professor Lorena Barba’s talk on jupyter-ai at PyCon24: https://www.youtube.com/watch?v=Q3jcpPIkdy8

Now is a great time to get involved and join us in the community!

import this

The Zen of Python, by Tim Peters

Beautiful is better than ugly.

Explicit is better than implicit.

Simple is better than complex.

Complex is better than complicated.

Flat is better than nested.

Sparse is better than dense.

Readability counts.

Special cases aren't special enough to break the rules.

Although practicality beats purity.

Errors should never pass silently.

Unless explicitly silenced.

In the face of ambiguity, refuse the temptation to guess.

There should be one-- and preferably only one --obvious way to do it.

Although that way may not be obvious at first unless you're Dutch.

Now is better than never.

Although never is often better than *right* now.

If the implementation is hard to explain, it's a bad idea.

If the implementation is easy to explain, it may be a good idea.

Namespaces are one honking great idea -- let's do more of those!

1.15. Lets get started!#

Install

VSCode: https://code.visualstudio.comInstall

gitif you do not already have it. You can try running it in your terminal to check if it’s already there: https://git-scm.com/book/en/v2/Getting-Started-Installing-GitInstall the conda ecosystem from Anaconda: https://www.anaconda.com

Documentation for install: https://docs.anaconda.com/anaconda/install/

Install

JupyterLab: https://anaconda.org/conda-forge/jupyterlabInstall

jupyter-aias a Contributor by following the instructions here: https://jupyter-ai.readthedocs.io/en/latest/contributors/index.html. The steps are as follows:

Create a conda environment:

conda create -n jupyter-ai -c conda-forge python=3.11 nodejs=20Activate the environment from the Terminal:

conda activate jupyter-aiClone the

jupyter-aiGitHub repository (jupyterlab/jupyter-ai) into any folder on your machine. In my case, I created a folder calledGitHubinside which I cloned thejupyter-airepo:git clone https://github.com/jupyterlab/jupyter-ai.gitYou may also want to use the GitHub Desktop for managing your Git repositories: apps/desktop

Change directory to the cloned folder:

cd jupyter-aiCompile the

jupyter-aiextension by running the following command:/scripts/dev-install.shRun Jupyter Lab package manager to complete the install:

jlpm&&jlpm buildStart

Jupyter Labjlpm devYou can also start Jupyter Lab from any terminal that is running the installed conda environment as follows:

jupyter lab

Try jupyter-ai by following this blog: https://blog.jupyter.org/generative-ai-in-jupyter-3f7174824862

See this recent talk: https://engineering.gwu.edu/professor-lorena-barba-demonstrates-transformative-use-jupyter-ai-engineering-education

… as well as:

1.16. Using Ollama#

Ollama enables running open models on your laptop for free!

This is easiest to run locally on your laptop. The following URLs are what you need.

GitHub: ollama/ollama

Install: https://ollama.com

Steps:

Install

ollama(follow instructions on the sites above).From the command line you can pull any model, for example, use

mistralfrom https://mistral.ai:

ollama pull mistral

Then from the command line you can try:

ollama run mistral

You can also run this directly and skip step 2. 4. To see installed models, run

ollama list

Let’s also look at code to run the model in Jupyter locally once you have started this up as above. You may want to do this to call the models programmatically, and not in chat mode from the command line.

Note: You cannot run this in Colab, only locally.

Of course, you can run ollama from inside jupyter-ai which is highly recommended.

!pip install ollama

Collecting ollama

Downloading ollama-0.6.1-py3-none-any.whl.metadata (4.3 kB)

Requirement already satisfied: httpx>=0.27 in /usr/local/lib/python3.12/dist-packages (from ollama) (0.28.1)

Requirement already satisfied: pydantic>=2.9 in /usr/local/lib/python3.12/dist-packages (from ollama) (2.12.3)

Requirement already satisfied: anyio in /usr/local/lib/python3.12/dist-packages (from httpx>=0.27->ollama) (4.12.0)

Requirement already satisfied: certifi in /usr/local/lib/python3.12/dist-packages (from httpx>=0.27->ollama) (2025.11.12)

Requirement already satisfied: httpcore==1.* in /usr/local/lib/python3.12/dist-packages (from httpx>=0.27->ollama) (1.0.9)

Requirement already satisfied: idna in /usr/local/lib/python3.12/dist-packages (from httpx>=0.27->ollama) (3.11)

Requirement already satisfied: h11>=0.16 in /usr/local/lib/python3.12/dist-packages (from httpcore==1.*->httpx>=0.27->ollama) (0.16.0)

Requirement already satisfied: annotated-types>=0.6.0 in /usr/local/lib/python3.12/dist-packages (from pydantic>=2.9->ollama) (0.7.0)

Requirement already satisfied: pydantic-core==2.41.4 in /usr/local/lib/python3.12/dist-packages (from pydantic>=2.9->ollama) (2.41.4)

Requirement already satisfied: typing-extensions>=4.14.1 in /usr/local/lib/python3.12/dist-packages (from pydantic>=2.9->ollama) (4.15.0)

Requirement already satisfied: typing-inspection>=0.4.2 in /usr/local/lib/python3.12/dist-packages (from pydantic>=2.9->ollama) (0.4.2)

Downloading ollama-0.6.1-py3-none-any.whl (14 kB)

Installing collected packages: ollama

Successfully installed ollama-0.6.1

!pip install ollama

import ollama

Requirement already satisfied: ollama in /usr/local/lib/python3.12/dist-packages (0.6.1)

Requirement already satisfied: httpx>=0.27 in /usr/local/lib/python3.12/dist-packages (from ollama) (0.28.1)

Requirement already satisfied: pydantic>=2.9 in /usr/local/lib/python3.12/dist-packages (from ollama) (2.12.3)

Requirement already satisfied: anyio in /usr/local/lib/python3.12/dist-packages (from httpx>=0.27->ollama) (4.12.0)

Requirement already satisfied: certifi in /usr/local/lib/python3.12/dist-packages (from httpx>=0.27->ollama) (2025.11.12)

Requirement already satisfied: httpcore==1.* in /usr/local/lib/python3.12/dist-packages (from httpx>=0.27->ollama) (1.0.9)

Requirement already satisfied: idna in /usr/local/lib/python3.12/dist-packages (from httpx>=0.27->ollama) (3.11)

Requirement already satisfied: h11>=0.16 in /usr/local/lib/python3.12/dist-packages (from httpcore==1.*->httpx>=0.27->ollama) (0.16.0)

Requirement already satisfied: annotated-types>=0.6.0 in /usr/local/lib/python3.12/dist-packages (from pydantic>=2.9->ollama) (0.7.0)

Requirement already satisfied: pydantic-core==2.41.4 in /usr/local/lib/python3.12/dist-packages (from pydantic>=2.9->ollama) (2.41.4)

Requirement already satisfied: typing-extensions>=4.14.1 in /usr/local/lib/python3.12/dist-packages (from pydantic>=2.9->ollama) (4.15.0)

Requirement already satisfied: typing-inspection>=0.4.2 in /usr/local/lib/python3.12/dist-packages (from pydantic>=2.9->ollama) (0.4.2)

response = ollama.chat(model='mistral', messages=[

{

'role': 'user',

'content': 'What is the difference between a quark and a quirk?',

},

])

print(response['message']['content'])